Our research found cycle time decreased by 5% but the time spent coding decreased by 11%, which shows a shift in the importance of coding towards code reviews. This has business and career implications for engineers who no longer need to code well but need to be able to understand and review code.

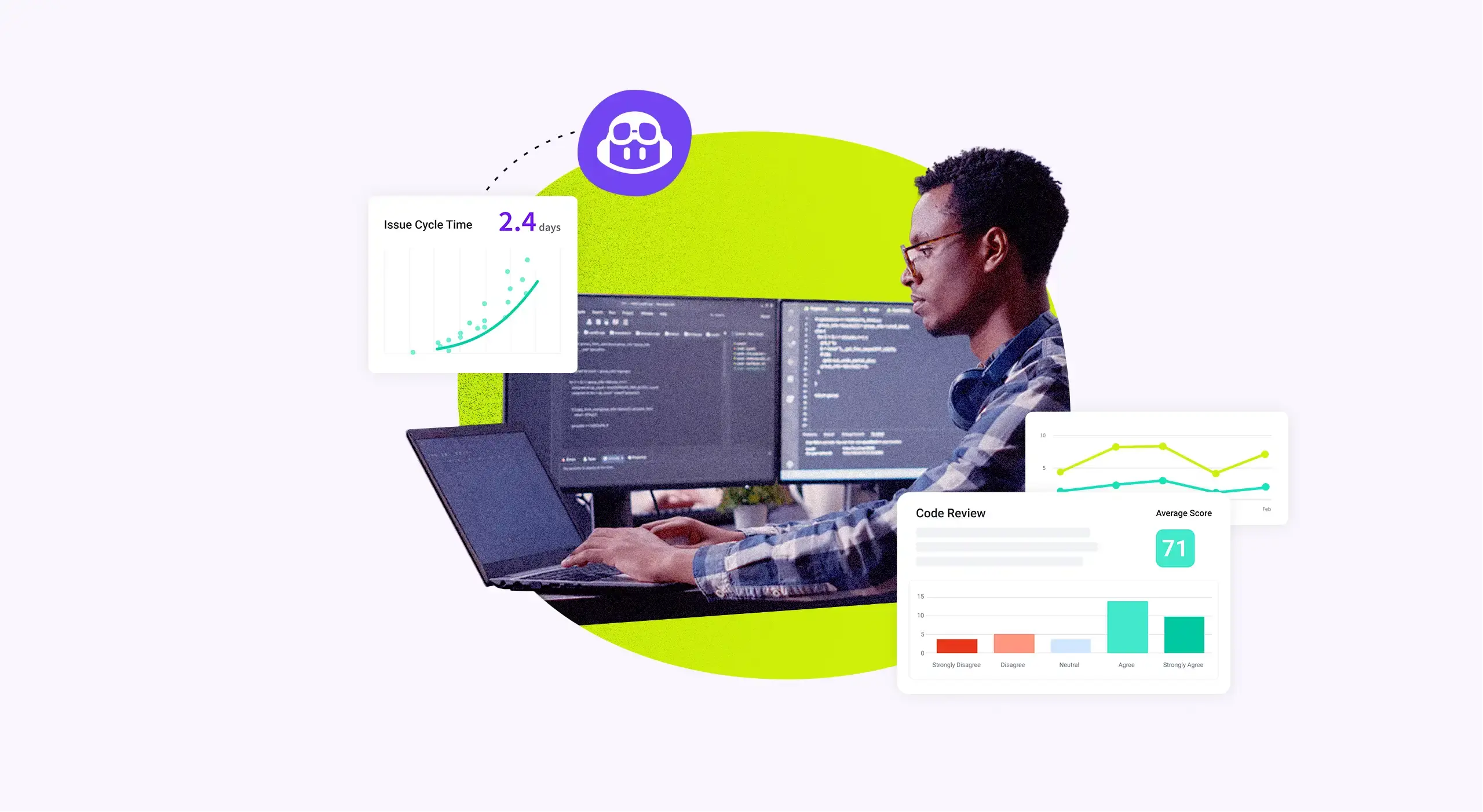

At Jellyfish, we don’t believe productivity can be measured by simply counting PRs and lines of code. Instead, developer productivity is reflected in how well they can complete their responsibilities and how they impact the success of their team and their company as a whole. GenAI is redefining how we perceive productivity and over the past year, developers worldwide have continued to experiment and adopt AI-powered coding tools.

In particular, GitHub Copilot offers real-time code suggestions and contextual guidance, making it easy for engineers to improve their productivity and efficiency. Tools like Copilot will have a huge impact on the everyday lives of software developers, not just by increasing coding speed but also by improving workflows and job satisfaction.

GitHub has already published surveys and studies pointing to the impact of Copilot in controlled environments. However, we have seen less research regarding how Copilot is changing engineering work with real data from live deployments. Other perspectives from GitClear, point towards the potential for “AI-induced tech debt.” Leading VCs are speculating the implications of all this newly AI-generated code: Larger surface area creates risk, more code review and more sloppy code”.

In Q4, we conducted a one-month study in collaboration with with the engineers at Bench — an online bookkeeping service and Jellyfish customer. Using Jellyfish data, we were able to investigate the changes that Copilot brought on both an individual level and an organizational level.

For both individuals and the organization, we established four hypotheses regarding changes we expected to observe due to integrating Copilot into engineering workflows. Using Jellyfish data (i.e. metrics, coding time, life cycle explorer) and internal Jellyfish analysis (i.e. context switching, flow time), as well as our Jellyfish DevEx surveys, we could investigate these hypotheses in the context of Bench’s engineering operations.

Granted this is a data point of one. Our early read is that developer happiness goes up. And more strategic and creative work will have to happen on the front end of an initiative.

Bench’s Copilot trial

Bench rolled out Copilot to all active engineers, and they were encouraged to use Copilot for everyday tasks over a month-long period. We sent out a DevEx survey on the first day of the Copilot trial period to ask questions about general satisfaction and workflow; these answers provided us with a benchmark to compare the post-trial results. At the end of the month-long experiment, we sent a similar survey that included some questions about their use of Copilot.

To test some of our hypotheses, we compared the month before the experiment to the month of the experiment.

Here are the biggest takeaways:

- The perceived impact is more significant than we measured in the data: Bench’s engineering team reported a wide range of positive feedback after the experiment, stating that their work was significantly easier and more enjoyable. However, the reported results from the survey were less pronounced than the actual data observed in workflows and day-to-day signals — many of the changes we expected to see were more subtle in a real-life work environment than in GitHub’s controlled environments.

- Coding time generally decreased: Bench’s coding time — the amount of time spent writing code per ticket — decreased by 11% during the month of the experiment. However, this decrease only applied to certain aspects of the work — coding time for tasks decreased by 40%, while time spent on bugs and defects saw no change. This reinforces our hypothesis that AI coding tools will have a more significant impact on repetitive tasks than bug work, which is highly codebase-specific.

- Individual contributors found it easier to write code with Copilot and enjoyed their work more: Questions about developer experience saw overwhelming positive feedback. In general, Bench engineers were 5% more satisfied with their work during the trial period. Engineers were particularly enthusiastic about the ease of writing code in the current environment, which saw survey scores increase by 9%.

- PR review time and number of comments decreased: One of the most common assumptions when it comes to AI and coding is that developers will spend less time generating code and more time reviewing. The Bench experiment proved otherwise, as both PR cycle time and number of reviews decreased during the trial period. Additionally, the reviews were 3% more likely to feature positive comments than before the implementation of Copilot.

- Effort spent in refinement increased: Software development can be divided into four phases — the definition and refinement period, in which work is planned and tickets are created; the work period, during which most of the code is written; the review period; and the deployment period. We expected there to be a shift regarding the periods in which developers spent most of their time, and we found that engineers were able to spend less time writing code and more time doing creative work during the refinement period.

New hypotheses for an AI-enabled future

It’s clear that Bench’s developers were more satisfied while using Copilot. But was the team more productive?

At Jellyfish, we don’t believe that productivity can be measured by simply counting PRs and lines of code. Instead, developer productivity is reflected in how well they can complete their responsibilities and how they impact the success of their team and their company as a whole. We can track this type of productivity by looking at the amount of context switching, focus/flow time, and perceived productivity towards product roadmap work. The post-experiment survey saw a 5% increase in perceived productivity.

But more importantly, the ability to work more effectively will leave more time for the important work that takes place before code generation — creative idea development and work refinement.

Will Copilot enable engineering teams to generate more lines of code? Maybe. But the Bench case study shows that it can already free up engineers to spend more time on planning and refinement, which will in turn result in more creative, innovative software solutions.