In this article

The DORA program aims to help software engineering organizations “get better at getting better.”

As part of its mission, DORA runs an annual survey to explore the capabilities and practices that drive high software delivery performance. Almost 5,000 respondents from global organizations in every industry contributed to this year’s survey. The findings are reported in the State of AI-assisted Software Development. It’s essential research, and Jellyfish was proud to be a gold sponsor.

In our latest Deep Dive webinar, Nathen Harvey, lead author, joined Jellyfish advisor Adam Ferrari, Engineering Manager Marilyn Cole, and Senior Sales Engineer Milan Thakker to discuss results from the report and what they mean for engineering teams and leadership.

AI Adoption is Almost Universal

AI Adoption is Almost Universal

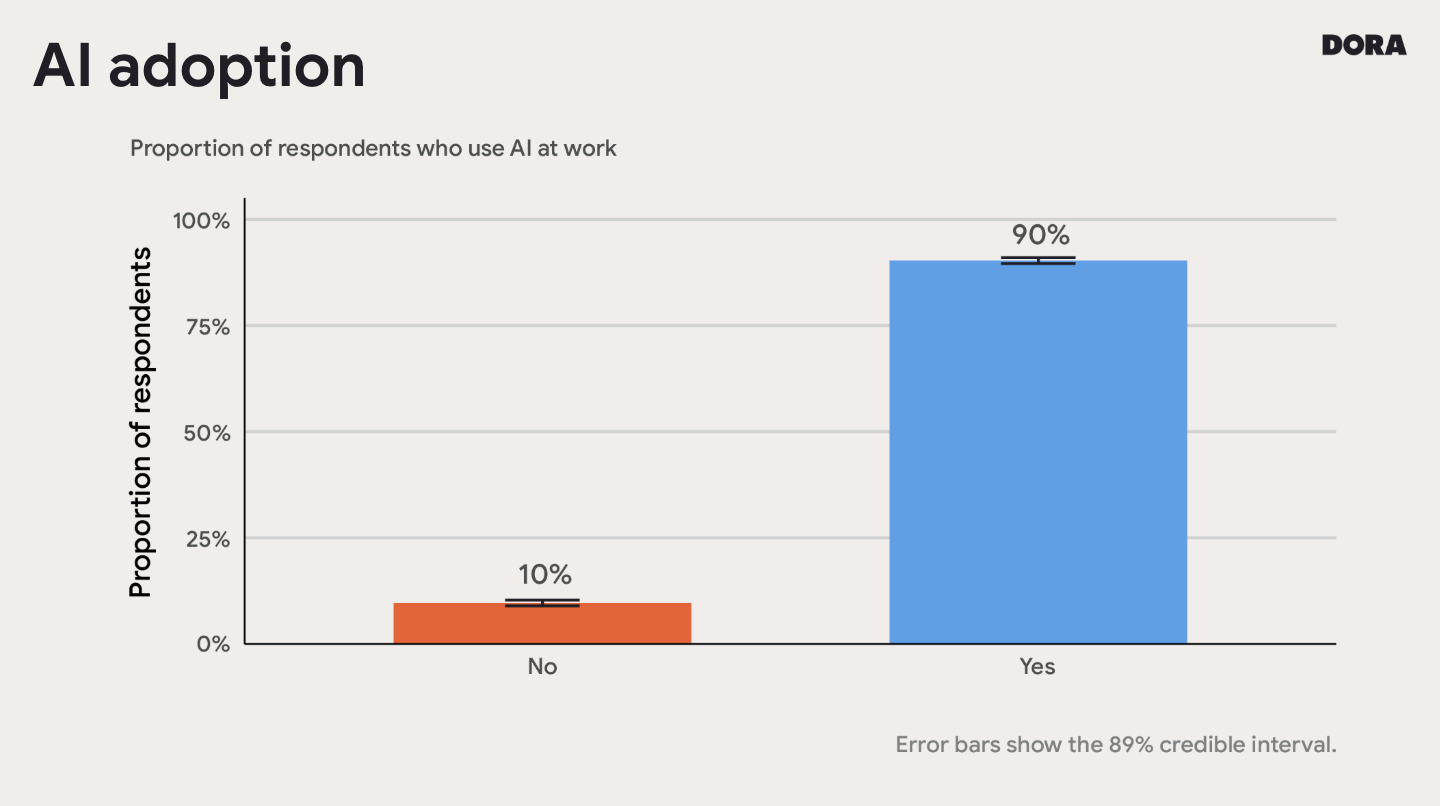

Around 90% of survey respondents said they used AI tools at work. Adoption is almost universal, and the number of engineers integrating AI into their workflows continues to grow. “I believe we’re at the point where we won’t have to ask this question next year,” said Harvey.

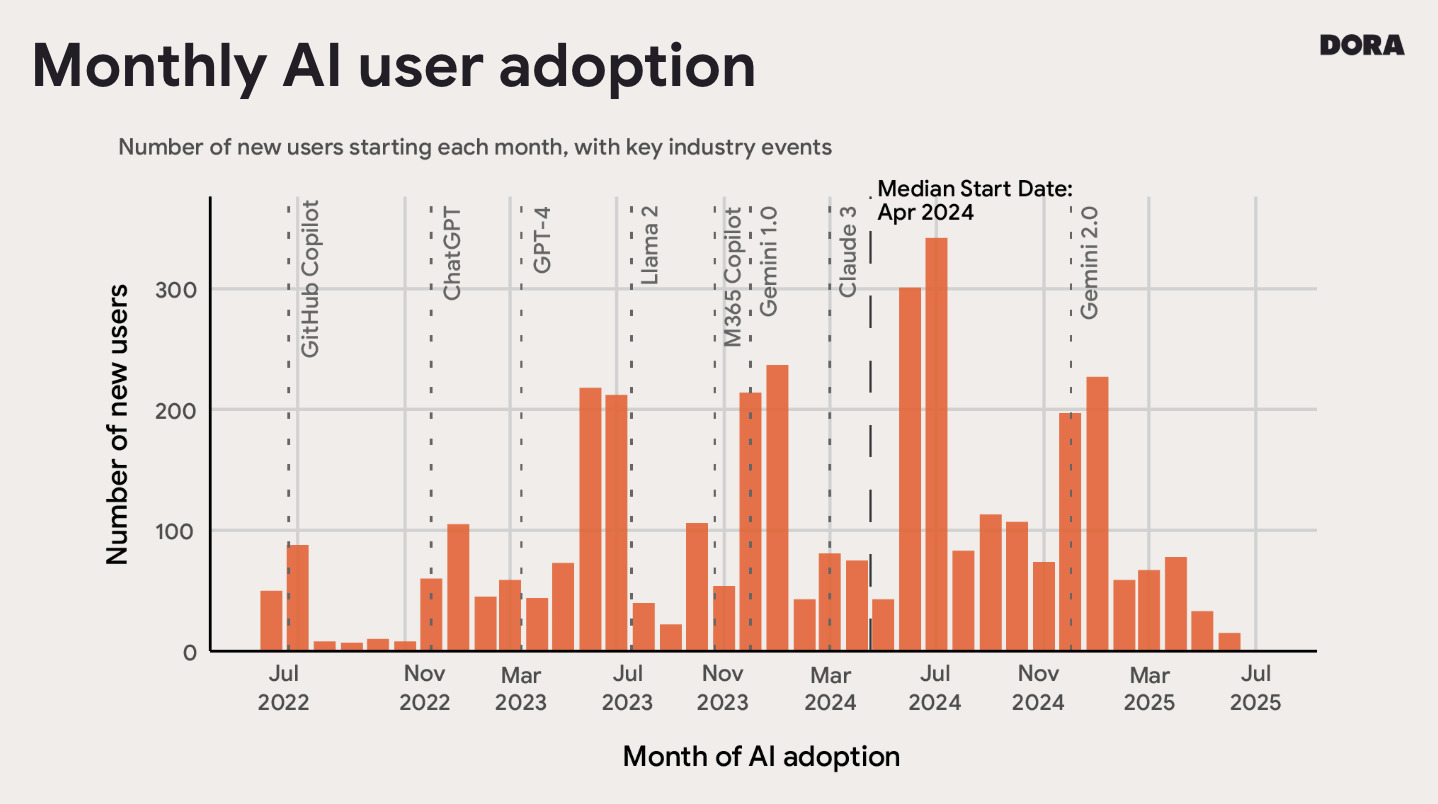

The DORA survey also provides insights into the rate of AI adoption. While there is a clear upward trend, AI use doesn’t always increase at the same pace. The report reveals bumps in adoption following the release of larger AI models, including Copilot, GPT-4, and Gemini 2.

With AI dominating conversations about software engineering, it’s easy to assume everyone else is racing ahead. However, the DORA survey shows that it’s still early days for most organizations. “April 2024 was the median start date of adoption,” said Harvey. “Even if you’re just getting started with AI in your organization, you’re not that far behind.”

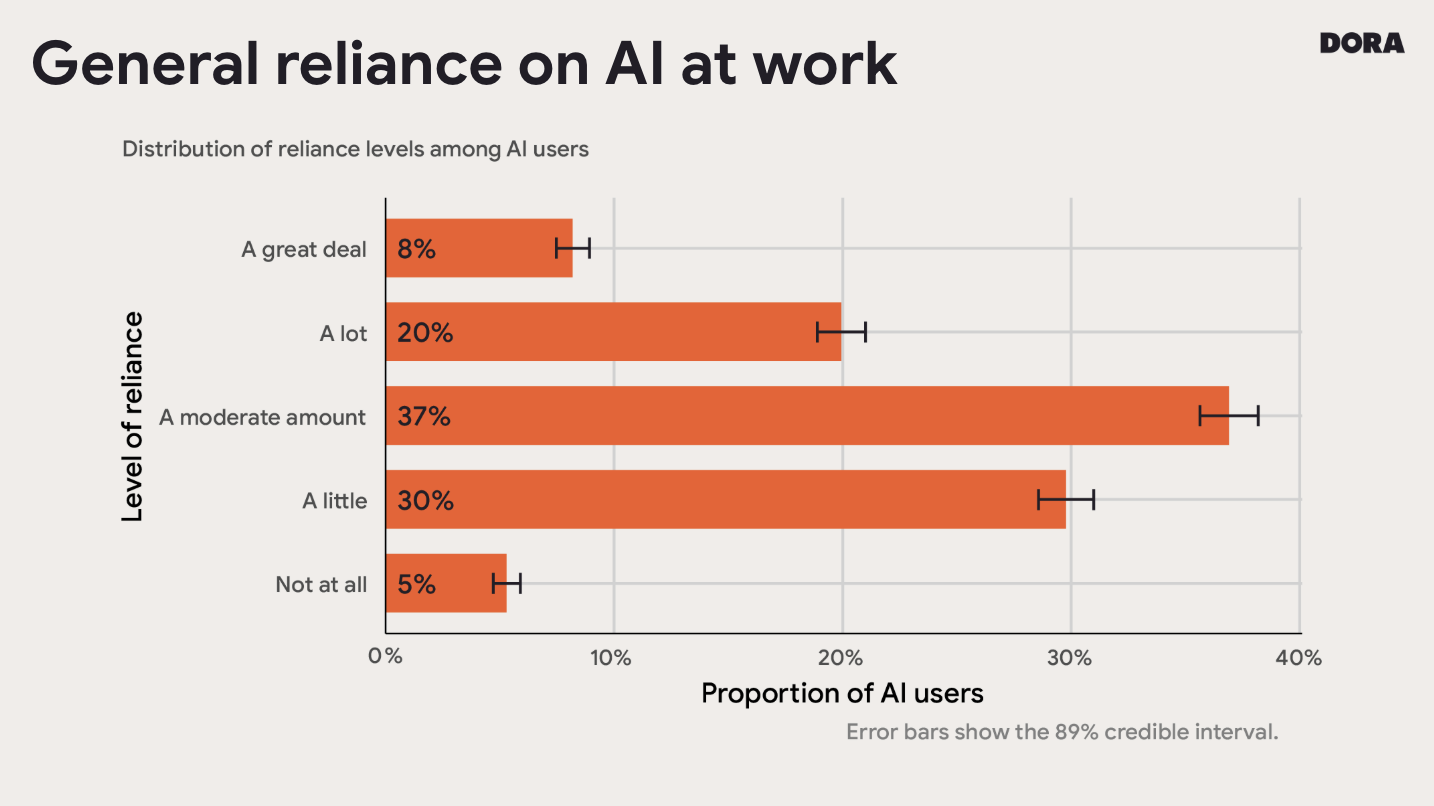

DORA isn’t just interested in the percentage of software engineers using AI; they also want to understand the extent to which they depend on its capabilities. According to the survey, 95% of AI users rely on the technology for at least one of their regular tasks.

At Jellyfish, we’re seeing similar adoption trends among our customers. “What I see out in the field with our customers is that AI adoption is skyrocketing,” said Thakker. But while usage is becoming increasingly widespread, gaps remain: “There’s still a significant population – around 40% of some of the companies we work with – where next to none of the code is assisted by AI,” Thakker added. “This doubles down on the point that it’s not too late to start.”

Individuals feel more productive with AI

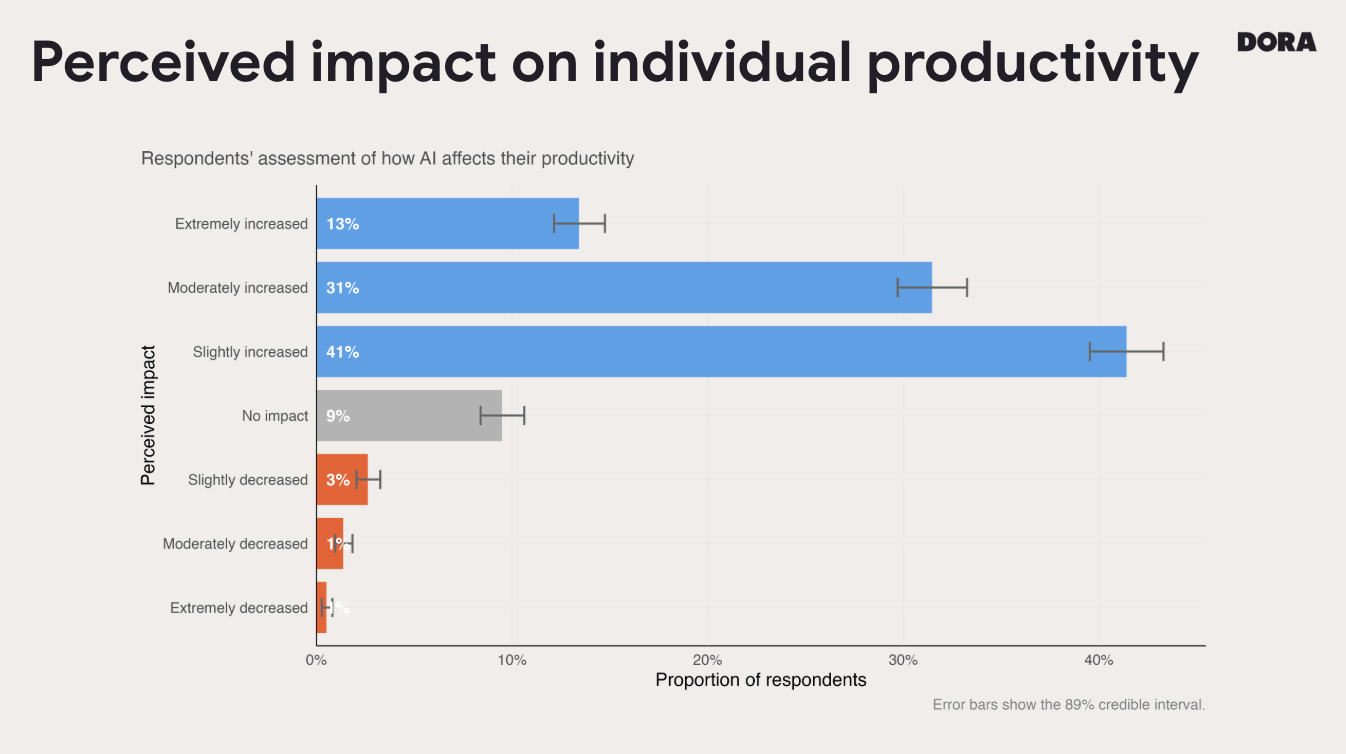

“A large majority of survey respondents feel like AI is positively impacting their productivity,” said Harvey. In fact, 13% said productivity increased extremely, 31% moderately increased, and 41% slightly increased.

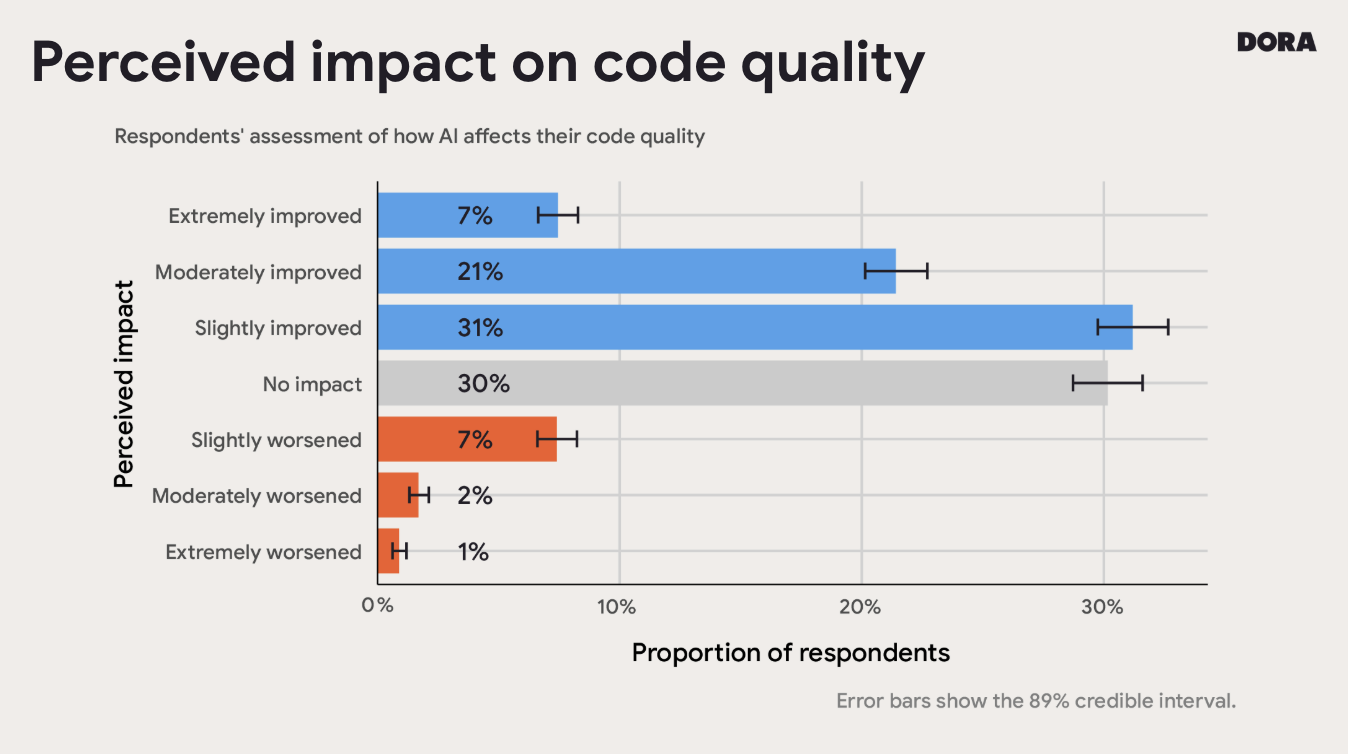

Understanding perceptions of AI and code quality is more challenging; a quality codebase means different things to different people. But in general, respondents do feel AI improves the quality of their code, with 52% perceiving a moderate or slight improvement.

Using Jellyfish data, we can assess the accuracy of those perceptions. “What we’ve found is that AI adoption doesn’t really have a strong influence on how many bugs you have to deal with, or how many bugs per PR that you actually have,” said Thakker. “At least from the customer perspective, it appears that you’re probably building things faster with AI, but you’re dealing with the same number of bugs, or the same percentage of bugs, as before.”

A healthy level of trust

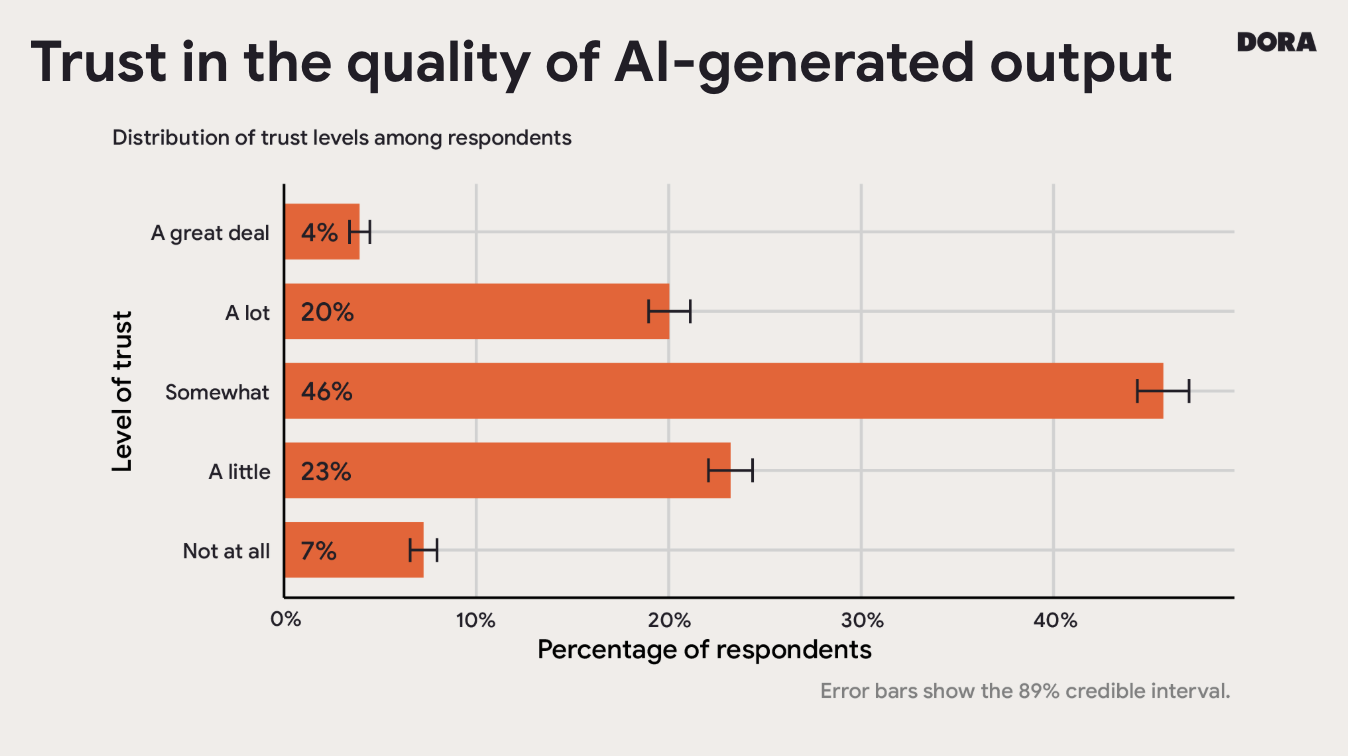

Engineering organizations need to approach AI with the right combination of trust and precaution. To understand whether teams are finding that balance, the DORA survey asked participants whether they trust AI outputs such as coding suggestions and emails.

“About 30% of our survey respondents trust the output a little or not at all,” said Harvey. “Frankly, I think this is a healthy level of trust. Like with all software changes, we should trust but verify. If I wrote the code, I would need some feedback mechanism, and that’s definitely true with AI.”

The rise of agentic AI

Agentic AI is the next major advancement for software engineering, and it’s quickly gaining traction. “We collected our information in June and July,” explained Harvey. “At that time, 61% of respondents said that they had never interacted with agentic workflows with AI. That number has likely already come down as we see more and more agentic use. It’s still very, very early in the game.”

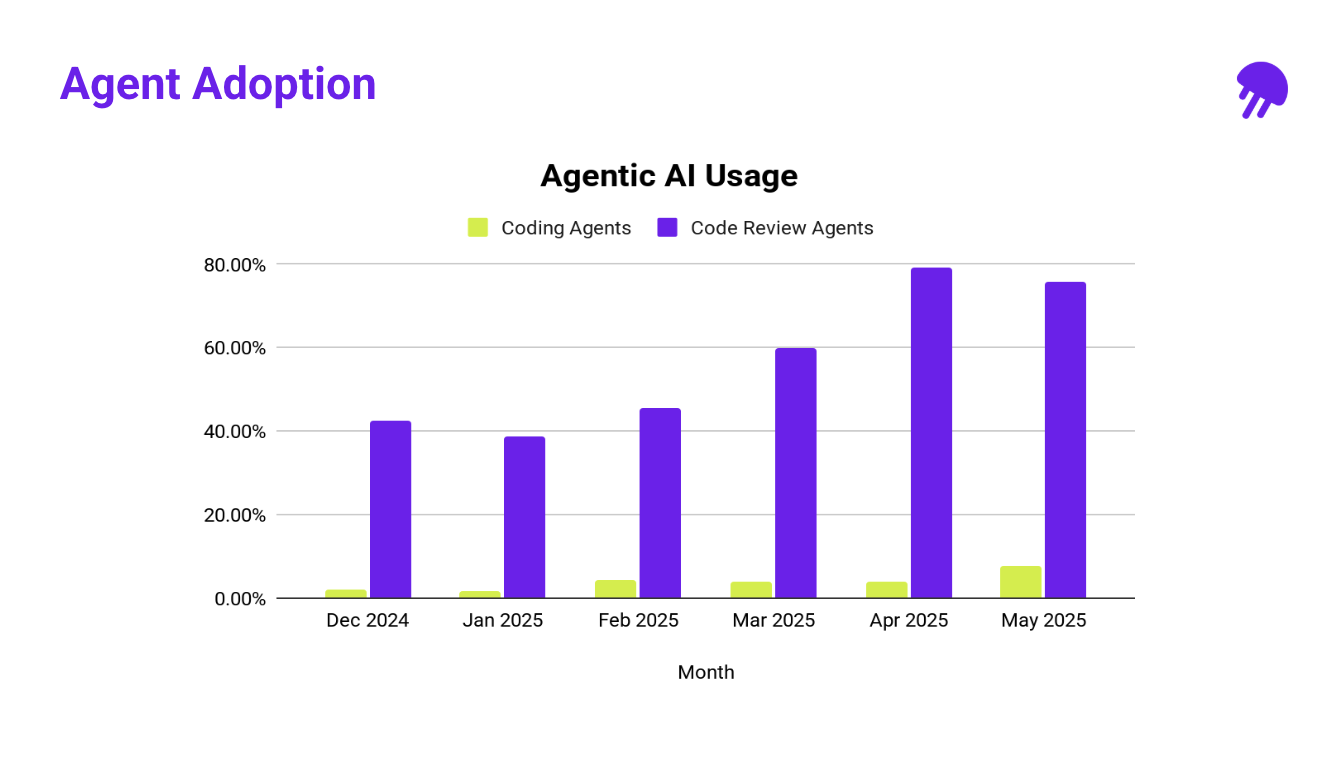

Across the industry, engineering leaders are envisioning a future in which AI agents can write code autonomously. But when we look at agentic AI usage today, coding work still makes up a tiny percentage. Most agentic AI use remains focused on code review: “A variety of different code review agents are being used across the board, including Copilot, CodeRabbit, Code Coverage, and CursorBugBot,” said Thakker. “And I guarantee you this list will change two or three weeks from now as people experiment with more and more agentic modes.”

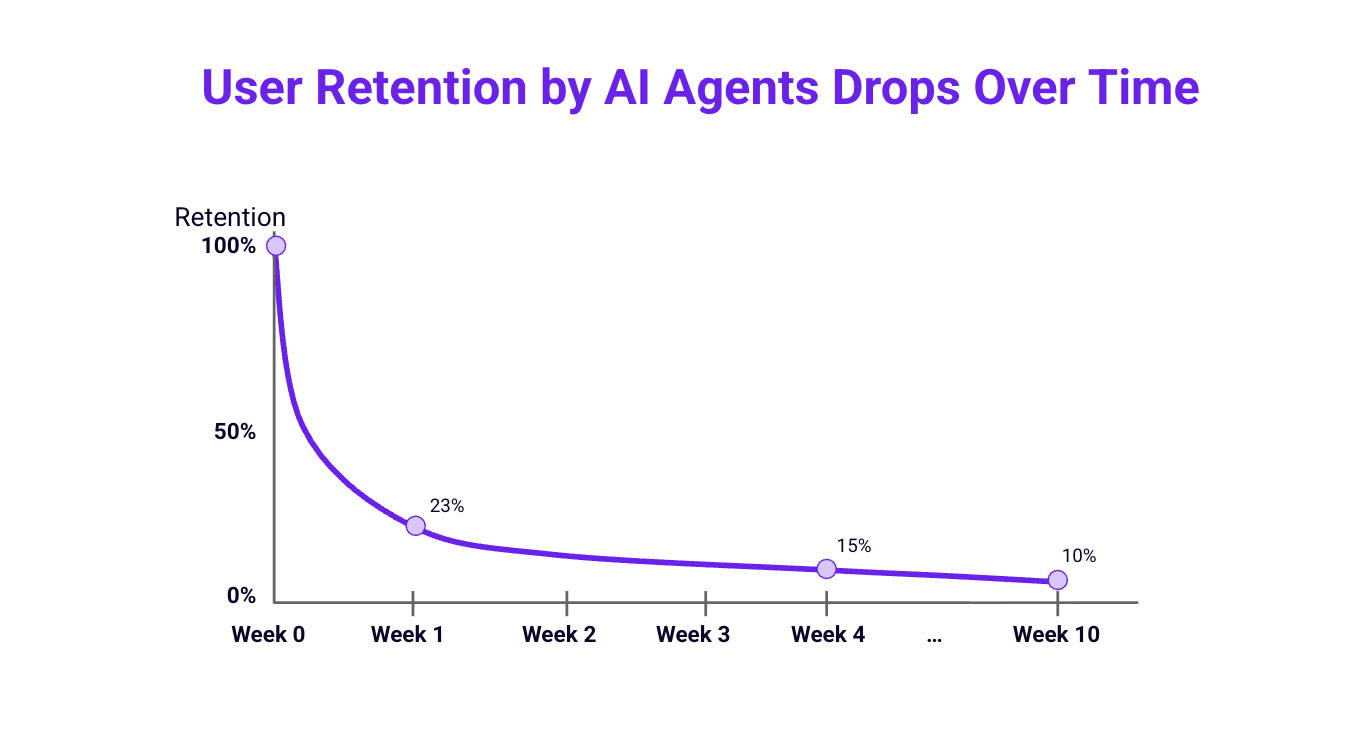

Low retention rates suggest we’re still in the experimental phase with agentic AI: “If people are using it in week one, less than 10% of those people are still using agents to write code ten weeks later,” said Thakker. “People are still figuring out how to govern it and exactly how to use it. The data reflects that.”

The Impact of AI in the SDLC

The Impact of AI in the SDLC

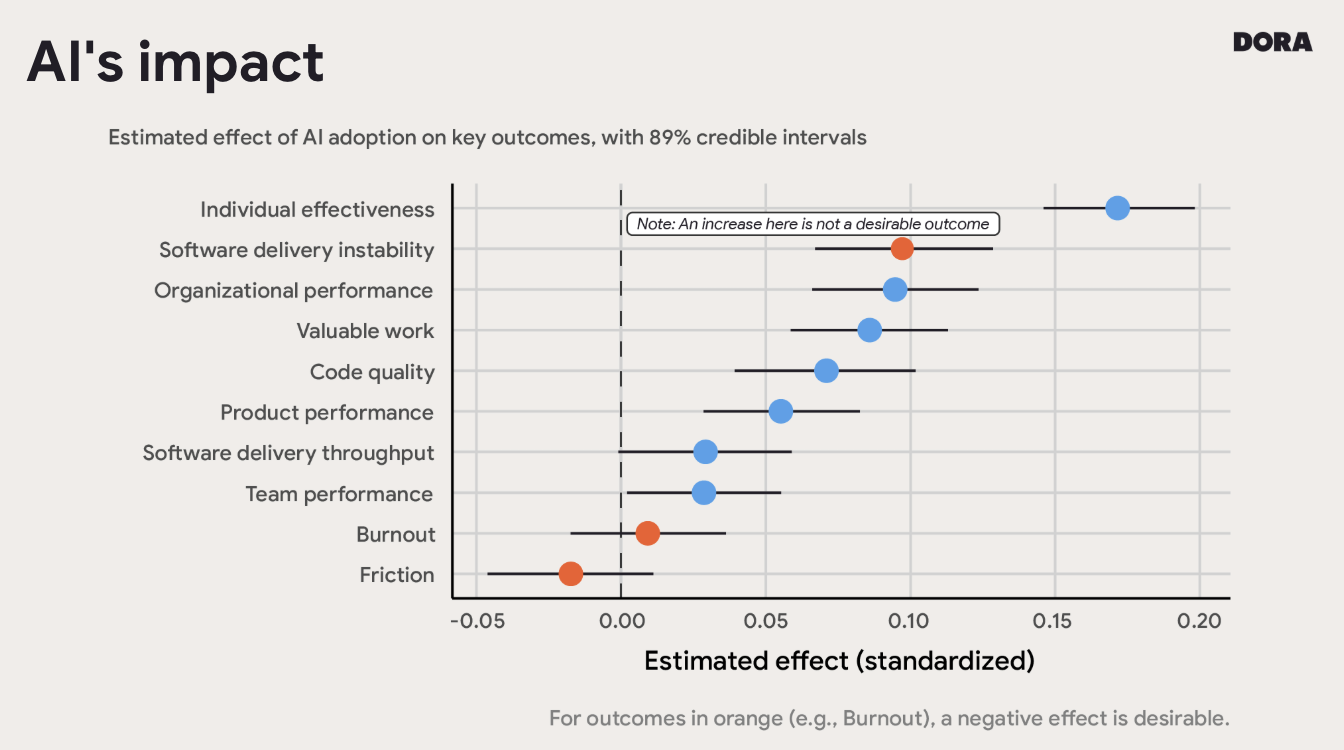

Organizations are going all in on AI, but many don’t understand how adoption impacts important markers like team and product performance. DORA built a data model to investigate what happens when you amp up AI. “You can’t do this in your team; it’s too complex, but we can do it in our data,” said Harvey. “We ran a bunch of simulations to see what the expected impact would be on the outcomes we’re interested in.”

Individual effectiveness increased the most with greater AI adoption. They also saw improvements in organizational performance, team performance, and software delivery throughout.

But not every outcome changed for the better. Software delivery instability went up – an indication that critical issues are leading to unplanned deployments. Outcomes such as friction and burnout were unaffected by AI. “If you’re an engineer feeling burnt out, just throwing a new tool at you isn’t going to help,” said Harvey.

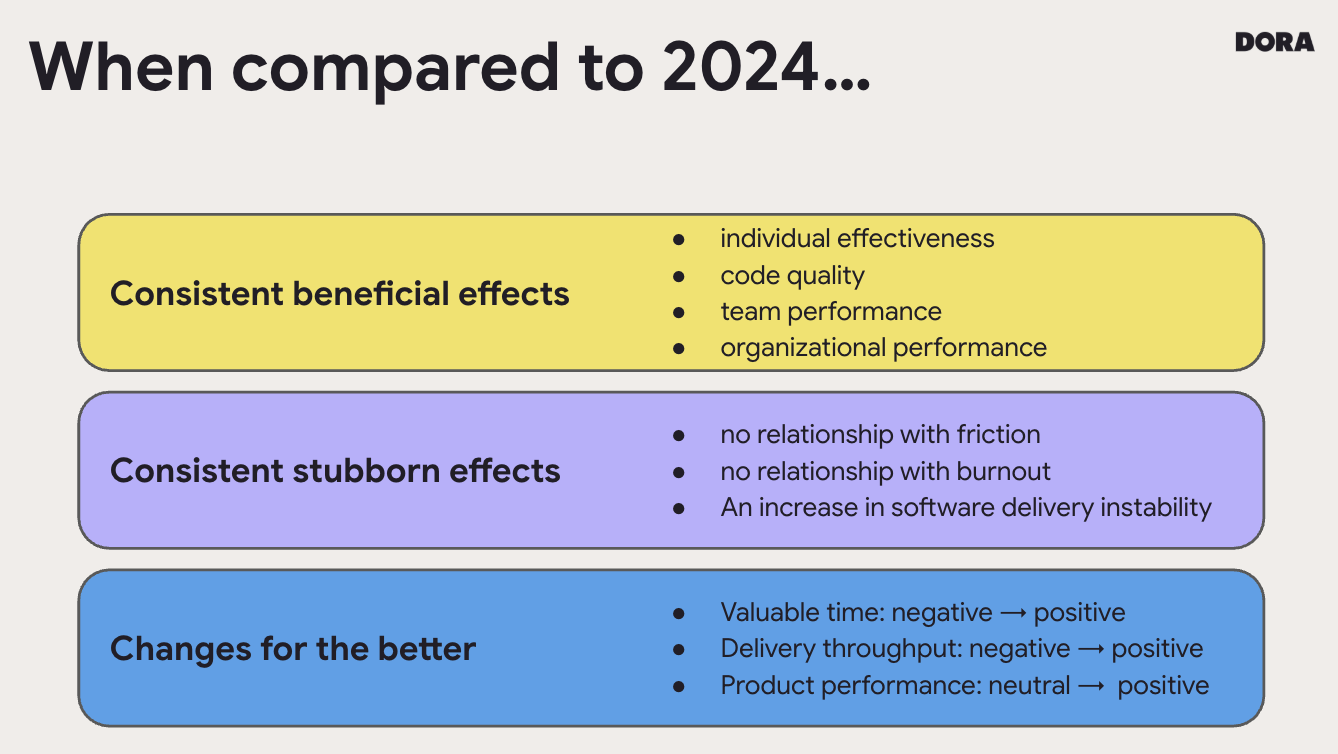

AI impact is an evolving story

It’s exciting to watch the impact of AI evolve in real time. Comparing results from the 2024 DORA survey to this year’s, we can see consistent benefits in areas like individual effectiveness, team performance, and code quality. Delivery throughput, product performance, and valuable time all changed for the better.

“It’s a story of adaptation as we learn how to interact best with these tools,” said Harvey. “The tools are getting better, and even the surfaces that we’re interacting with are changing.” Ferrari agreed, adding, “AI doesn’t immediately make everything better. It’s a journey, and there are a variety of enablement activities you have to carry out along the way.”

Measuring AI impact with Jellyfish

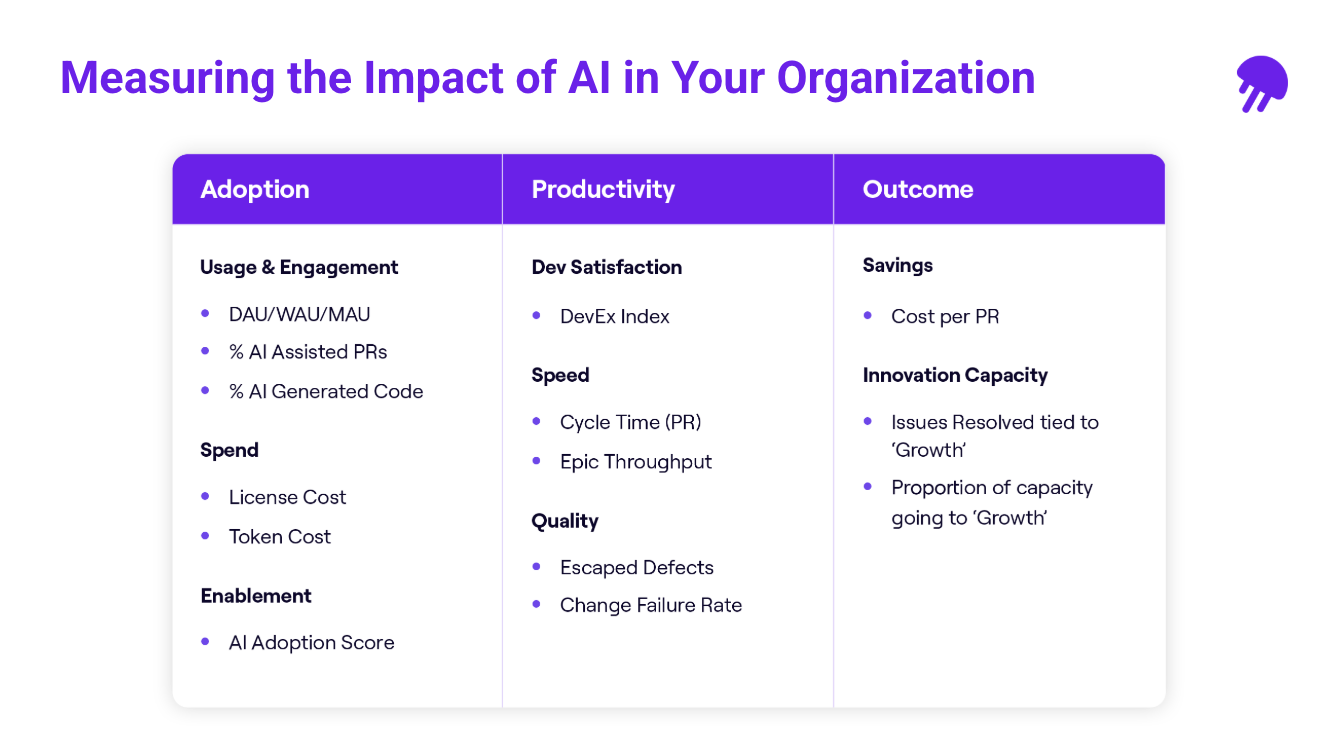

There’s plenty of debate around which are the right metrics to measure AI impact. But the reality is that different metrics matter to different organizations. Engineering leaders need to focus on the metrics that spark conversations and spur change in their organizations.

Jellyfish AI Impact can help you answer key questions around adoption, productivity, and outcomes, including:

- Is AI allowing you to generate more code?

- Are you moving through more tasks because of AI?

- Is AI helping to increase work on new features?

- Does the team find value in AI coding tools?

- What do engineers need to get the most out of your tools?

With visibility into how AI tools impact your team, you can make smarter investments and optimize your AI strategy.

AI Is a Mirror and a Multiplier

AI Is a Mirror and a Multiplier

Above all, the 2025 DORA report reveals how AI magnifies an organization’s existing strengths and weaknesses. “The greatest returns on AI investment come from a strategic focus on the underlying organizational systems,” said Harvey. “In well-organized organizations with strong practices, AI amplifies that flow and accelerates value delivery. And in fragmented organizations with brittle processes, AI will expose those pain points and bottlenecks. You’ll feel the pain of those bottlenecks more acutely.”

AI is motivating leaders to fix the issues standing in the way of success, and that means greater investment in areas that improve the developer experience. “So many of those success factors were about having a better environment for the human engineers, and the fact that those were also the things that were the best enablers of AI impact is kind of delightful,” said Ferrari. “It intuitively made sense, but it was an exciting finding.”

Watch the full webinar for more insights from the DORA 2025 State of AI-Assisted Software Development report.

Take a Closer Look

Ready to start measuring AI impact in your organization?

Request a demo to see Jellyfish in action.About the author

Jellyfish is the leading Software Engineering Intelligence Platform, helping more than 700 companies including DraftKings, Keller Williams and Blue Yonder, leverage AI to transform how they build software. By turning fragmented data into context-rich guidance, Jellyfish enables better decision-making across AI adoption, planning, developer experience and delivery so R&D teams can deliver stronger business outcomes.