In this article

When it comes to determining the impact of AI coding tools, reliable data has been few and far between, and published results can tell conflicting stories. For instance, a recent study from METR found that in some cases and under some conditions, AI tools can actually slow developers down, while an earlier study from Microsoft showed significant gains.

At Jellyfish, we’ve been collecting a robust set of AI impact data across more than 500 companies, representing the coding activities of tens of thousands of engineers, comprising millions of pull requests and billions of lines of code. Real-world data at this scale can help us understand what’s really going on out in the field as companies engage in the journey of AI transformation.

We recently teamed up with the folks at OpenAI to take a closer look at how AI tools are affecting coding productivity and quality. Here’s what we found:

Increasing AI adoption means shipping more code, and faster

Increasing AI adoption means shipping more code, and faster

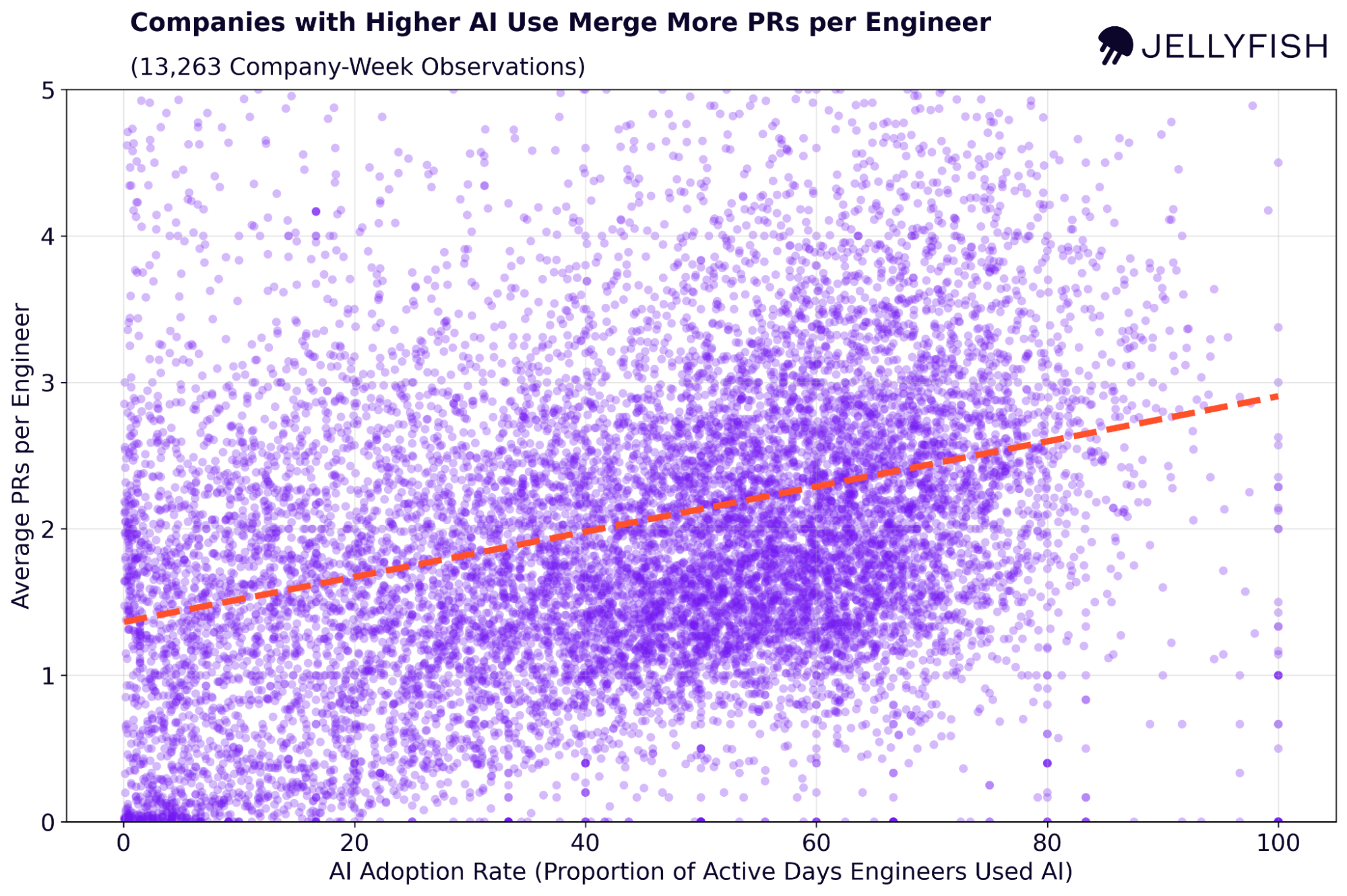

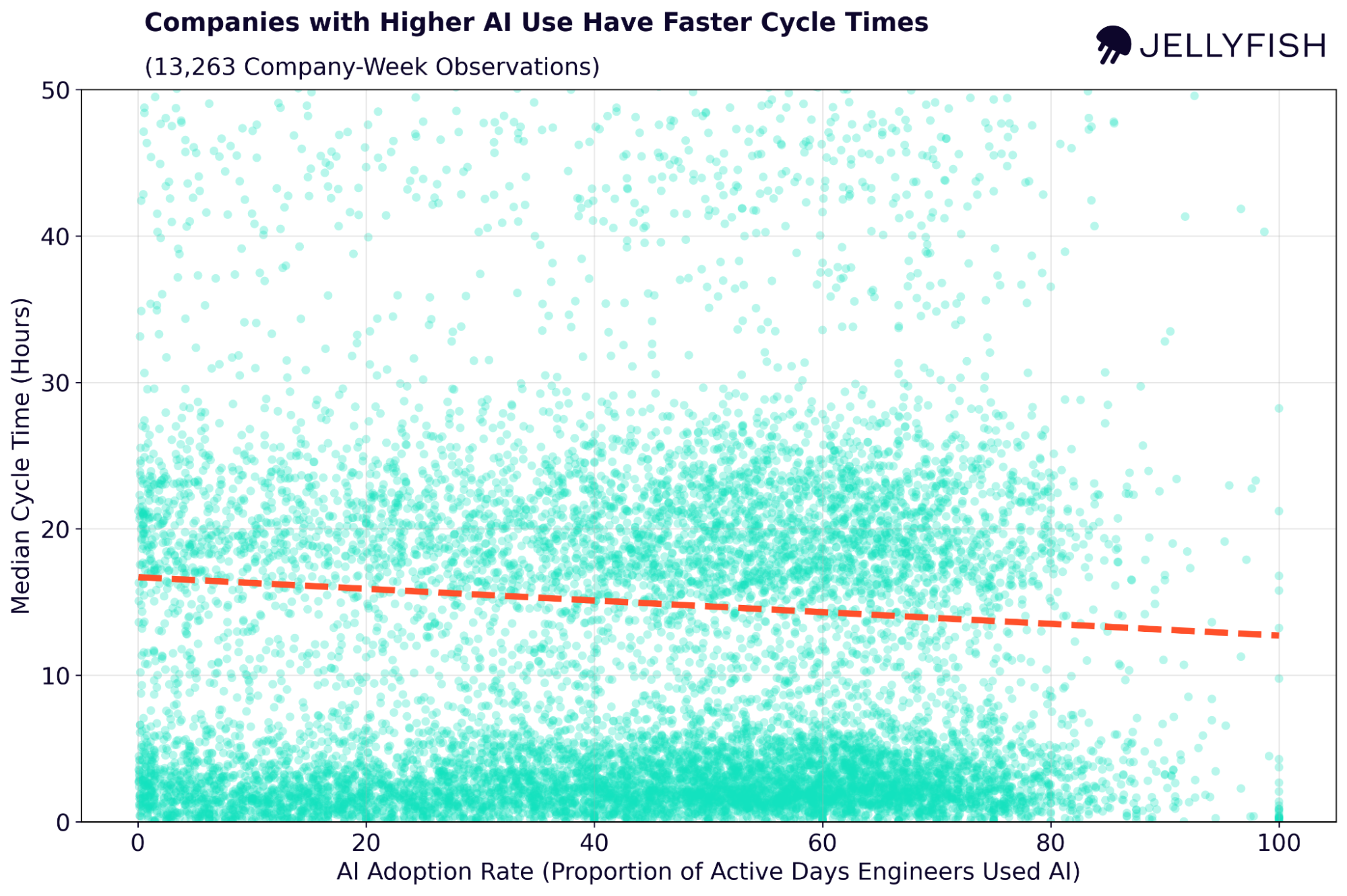

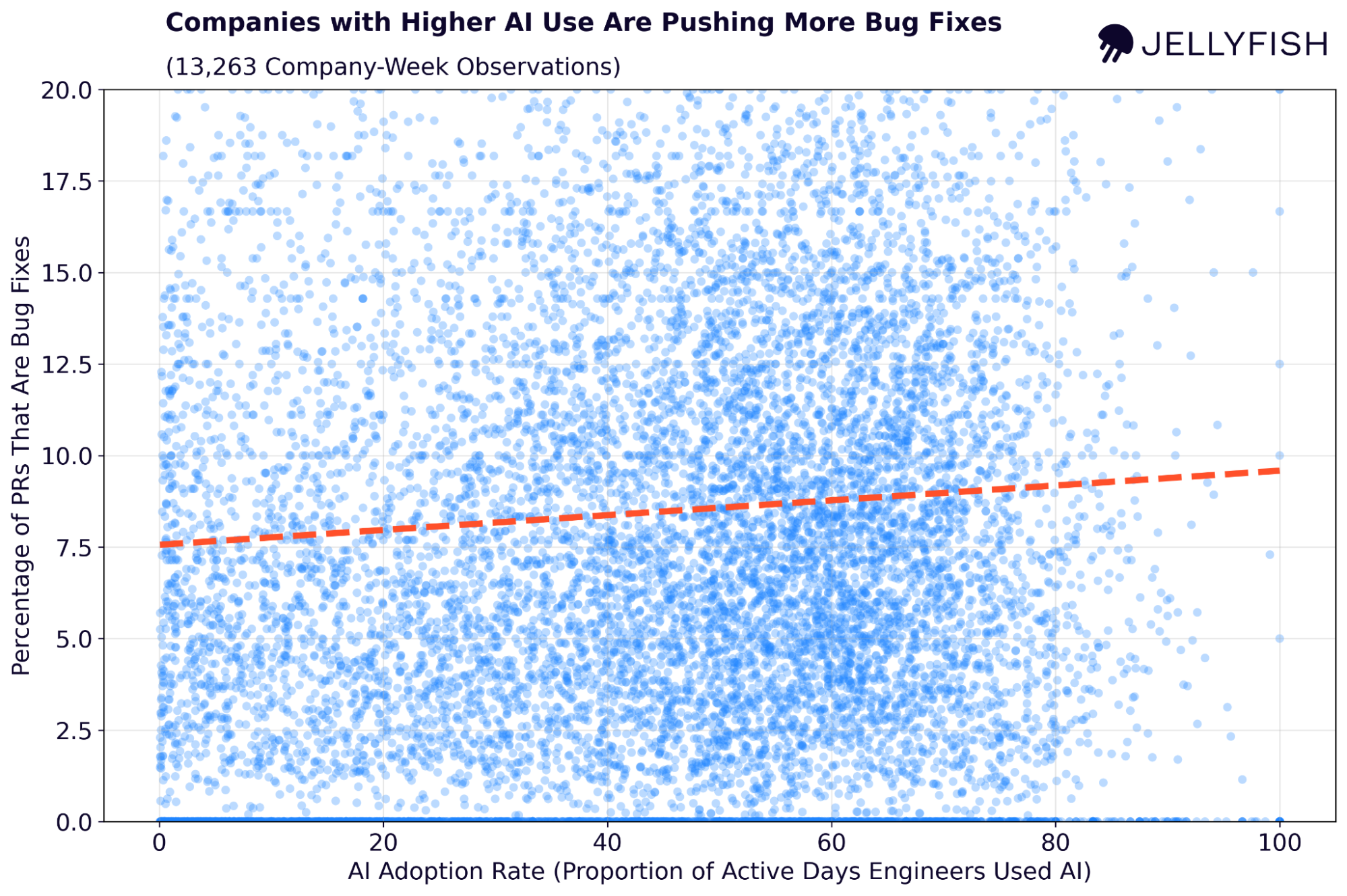

We defined a company-wide “AI Adoption Rate” which reflects how regularly engineers on a team are using AI tools. It is defined as the fraction of active coding days an engineer used AI, averaged across all engineers on the team. In the following plots, each data point is a snapshot of a given performance metric and a company’s level of AI adoption for a single week, allowing us to see trends as companies achieve higher levels of AI adoption.

What we see is that in terms of PR throughput, going from 0% AI adoption to 100% adoption corresponds to an increase from 1.36 PRs per engineer on average to 2.90 PRs – a 113% increase.

Increasing AI adoption also correlates with pushing code to production faster. In going from 0% to 100% AI adoption, an average company can expect their median cycle time to drop four hours from 16.7 to 12.7 – a 24% reduction.

But what about code quality?

But what about code quality?

One of the things we looked at was the fraction of total PRs that are linked to bug tickets. We see that the highest levels of AI adoption correspond to an increase of 26.8% in the proportion of tickets (9.5% versus a baseline of 7.5%). In other words, companies with high AI adoption are pushing more code, and a higher fraction of those PRs are bug fixes.

It may be the case that higher AI use is causing more bugs, or it may be helping teams fix more bugs – or it may be a combination of both. One interesting observation is that we aren’t seeing a comparable increase in revert PRs (pull requests whose primary purpose is to undo previous changes, typically due to a critical failure), suggesting that AI doesn’t seem to be causing major quality issues across the board.

AI tools still need human judgment to deliver quality at speed

AI tools still need human judgment to deliver quality at speed

While errors are few and far between, they still diminish the time savings and productivity gains from AI and show just how vital human skill and oversight remain in the AI era. As AI models improve and training and usage increase, we expect quality and speed to further improve.

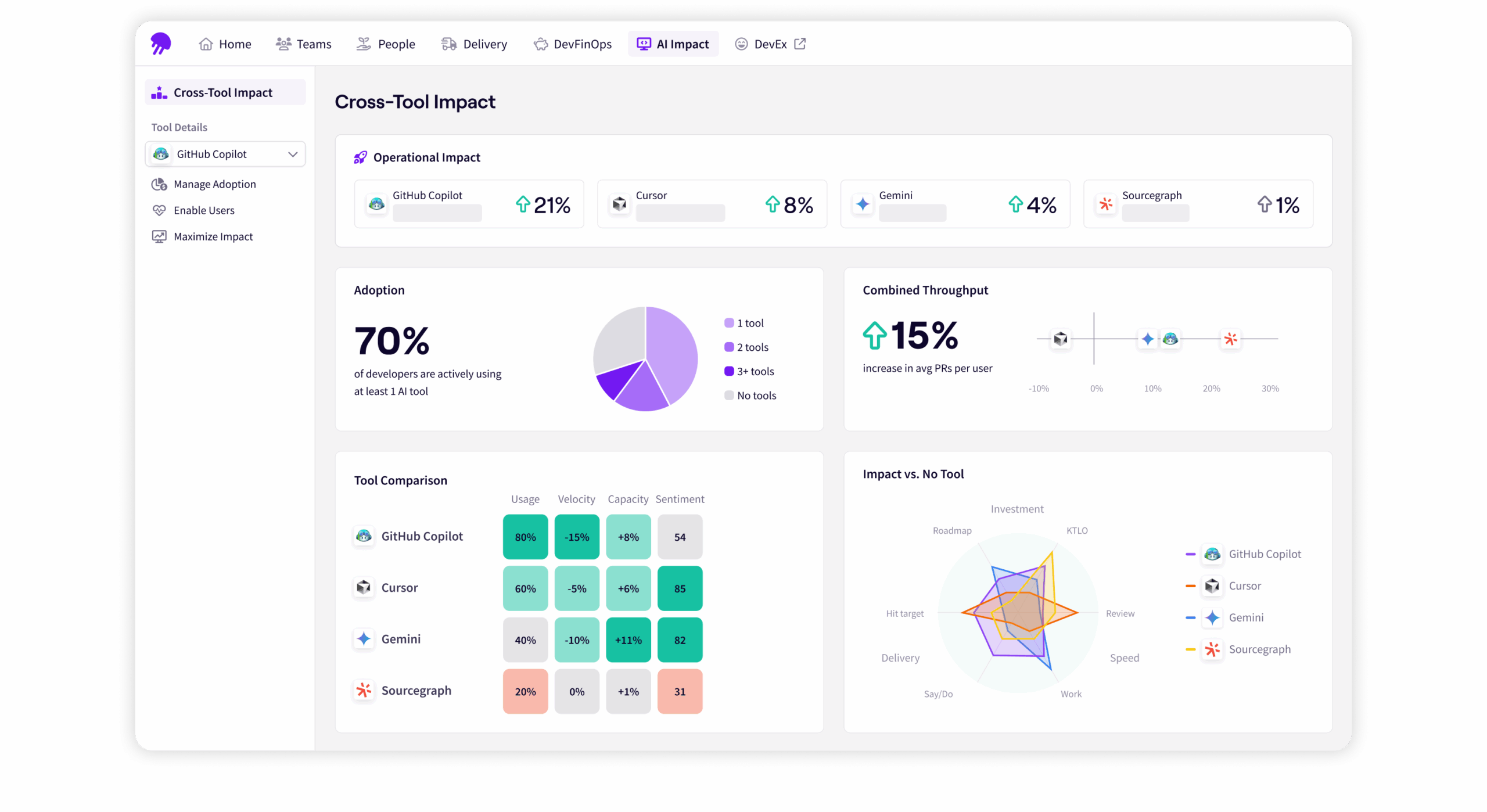

Get Started with AI Impact

Get a better understanding of AI’s impact on your engineering team. Learn more about Jellyfish AI Impact and get a demo.

Learn MoreAbout the author

Nicholas Arcolano, Ph.D. is Head of Research at Jellyfish where he leads Jellyfish Research, a multidisciplinary department that focuses on new product concepts, advanced ML and AI algorithms, and analytics and data science support across the company.