In this article

R&D is quickly evolving into a hybrid AI-Human ecosystem. In our recently released State of Engineering Management report (SEMR), eight in 10 respondents believe that at least 25% of the engineering work humans do today will be handled by AI five years from now. And AI use itself has exploded. The same report shows widespread AI coding tool adoption with use topping more than 90%, up from 61% just a year ago. This finding is backed up by Jellyfish platform data showing 51% of PRs in May 2025 used AI, compared to just 14% in June 2024.

The sea change is indisputable. AI is here to stay. R&D leaders now must shift their focus to understand how to best adopt, use, measure, and drive impact with these tools.

Calibrating AI Success

Calibrating AI Success

Jellyfish was built to give engineering leaders the insights our founders wished they had when leading R&D teams. Today, as the leading Software Engineering Intelligence platform, Jellyfish supports more than 500 companies and nearly 100,000 engineers worldwide. As noted, the vast majority of these organizations are experimenting with or scaling their own AI adoption.

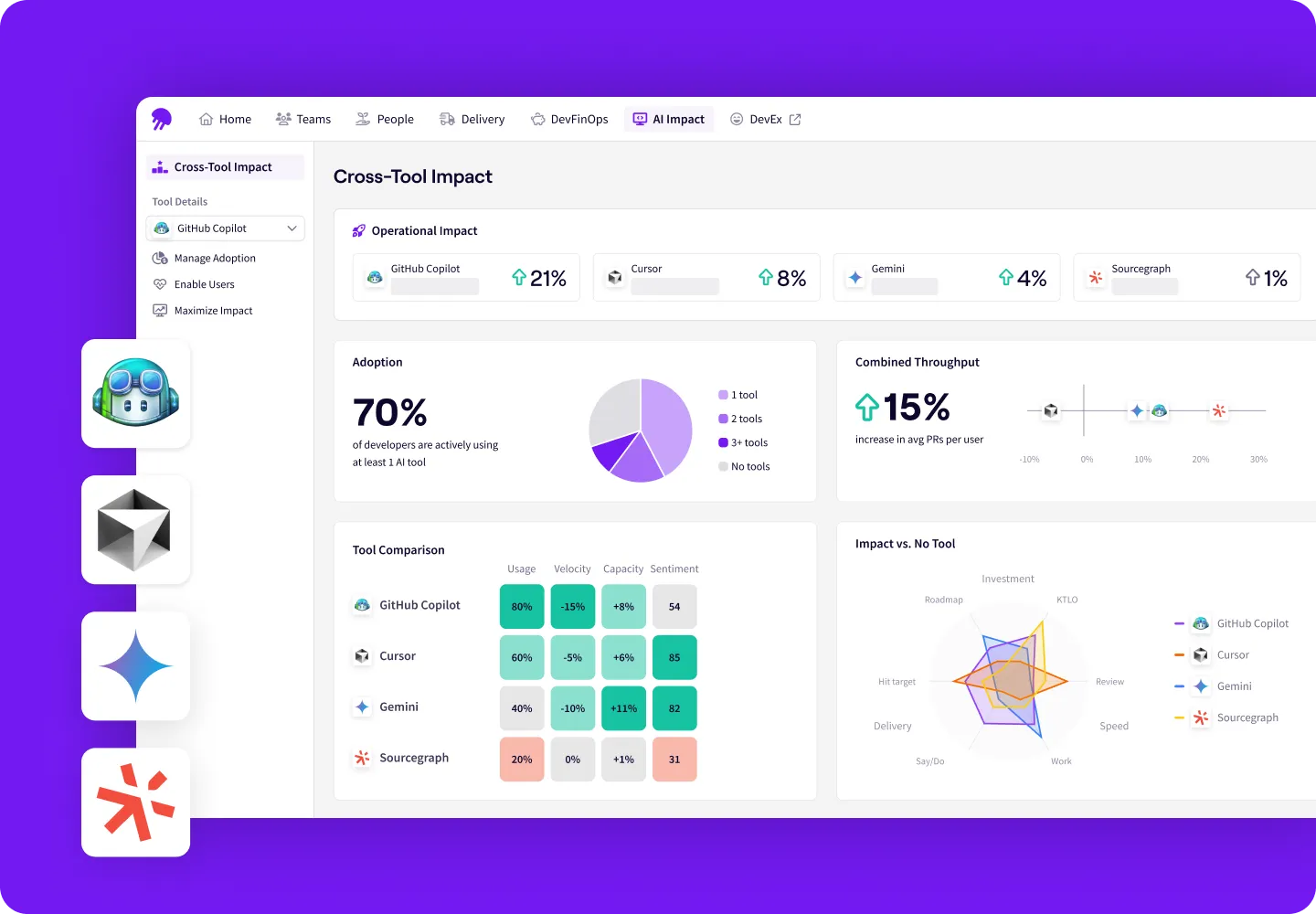

As the first to market with AI Impact, which measures AI coding tool adoption and impact across the most widely used AI products, we’ve seen a growing need for structure and guidance around the use of these tools.

While AI is at peak hype, our experience shows the real story is more complex: the future of software development is not fully automated. It’s hybrid. And success needs to be calibrated accordingly.

The Market Reality

The Market Reality

There’s no shortage of AI coding tools available today. In fact, SEMR data shows that nearly half (48%) of engineering teams are using two or more, with leaders choosing a diversified, exploratory approach over standardizing on a single platform. The real challenge now is determining how to drive meaningful impact – regardless of tool.

Most R&D leaders we speak with are still sorting how to:

- Support their teams through this AI evolution (revolution?)

- Manage and set pragmatic expectations with non-technical stakeholders and Boards

- Measure and communicate the return on investment

They’re looking for clarity in a fast-moving, messy ecosystem where tools are evolving quickly, results and engagement vary by developer preference(e.g., IDE vs. CLI), and performance depends on everything from programming language to developer trust and tenure.

The Jellyfish AI Impact Framework

The Jellyfish AI Impact Framework

Grounded in objective data from over 20,000 engineers and shaped through collaboration with more than 300 engineering leaders, the Jellyfish AI Impact Framework is built to:

- Provide inputs for engineering leaders and ICs to make informed decisions

- Adapt to the unique needs and culture of an organization

- Evolve in real time as the AI landscape expands across the software development lifecycle (SDLC)

Three Core Components

Three Core Components

The Framework focuses on three key dimensions:

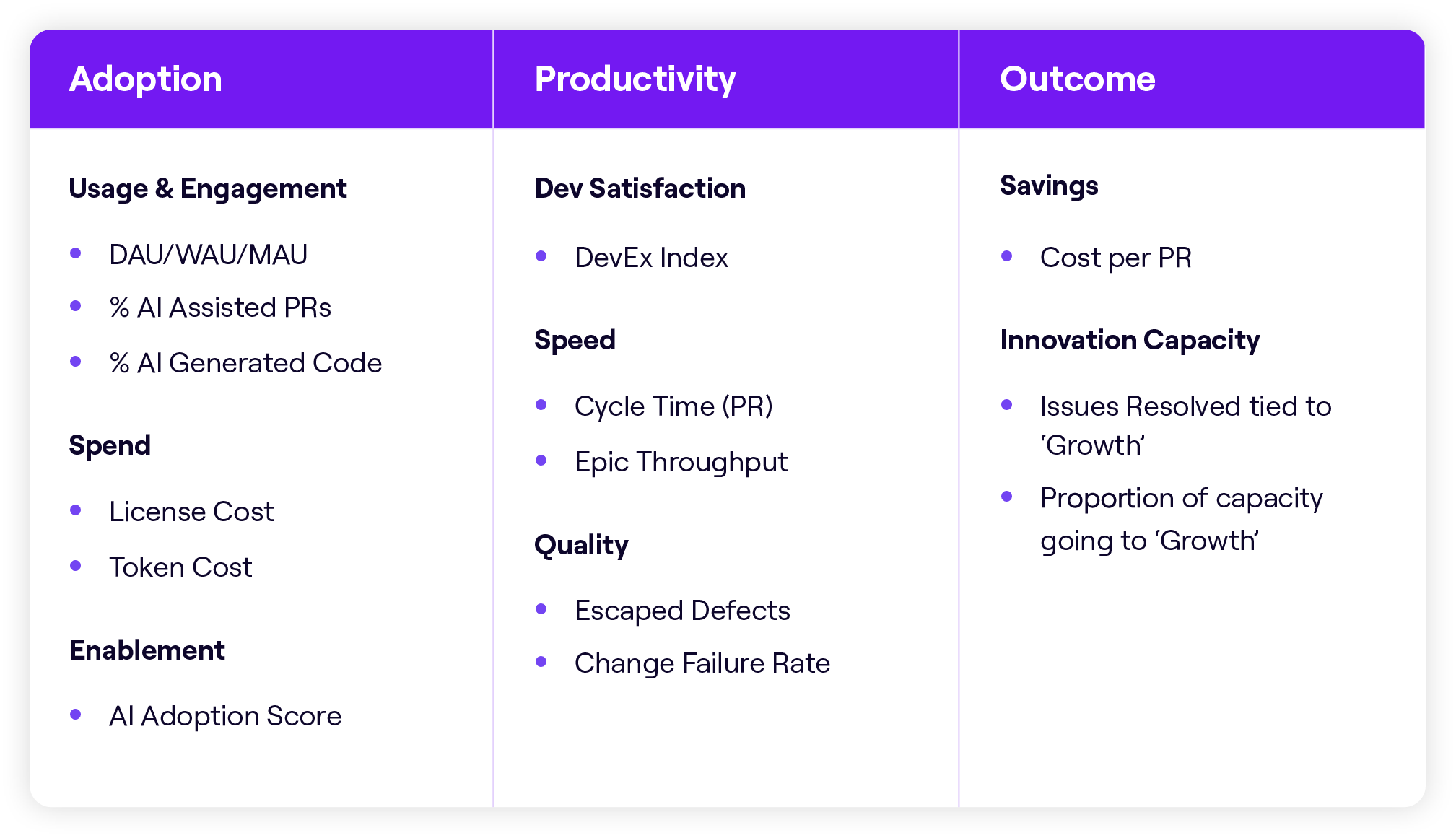

1. Adoption

Encourage teams to regularly engage with the provided tools, learn how to improve the efficacy of said tools, build trust in the outputs, and remove barriers to adoption.

Jellyfish tracks signals like usage, tool spend, and enablement metrics (e.g., AI Adoption Score*) to help leaders assess adoption maturity. At the Adoption stage, determine:

- How and how much are your teams using AI tools?

- Are there friction points blocking adoption?

- Are engineers maturing from experimentation to full adoption?

*AI Adoption Score is a composite score that measures how well an organization is doing at removing barriers to AI adoption, training users (e.g., on how best to prompt), and sharing knowledge.

2. Productivity

Understanding AI’s impact on productivity. Where are you seeing gains and in what scenarios (languages, type of work, etc.)? What learnings can be shared to drive greater productivity across the organization? At this stage, ask:

- How is AI affecting development throughput and team performance?

- Where are you seeing efficiency gains? What kinds of work are benefiting most?

- Are there new bottlenecks (e.g. delayed PR reviews) limiting broader productivity gains?

3. Outcomes

Work to understand business impact. Fundamentally, you should aim to understand:

- Are AI tools improving R&D costs (typically in the form of savings)?

- Are AI tools helping R&D produce more output? e.g. focused on revenue generating work, characterized here as “growth” oriented work.

- What is the distribution of engineering allocation with AI assisted tools? e.g. is more “growth” work happening or is the team addressing other needs with AI tools?

For each component, the Framework provides suggested metrics along with industry benchmarks, but also allows flexibility so you can tailor our guidance to your workflows.

How to Use the Framework

How to Use the Framework

The Framework is meant to be more than a measurement tool, it’s an operational guide to set your R&D organization up for long-term success. It is both a scorecard and an operational playbook that works across stages.

Keep in mind that deploying AI tools does not equal immediate transformation. Success comes from intentional use, iterative learning, and ongoing support.

- First, focus on adoption. Help your teams engage regularly with AI tools. Build trust in outputs, and remove friction points.

- Next, analyze productivity. Identify patterns of success – what tools, tasks, or teams are benefiting most? Spot new bottlenecks and share learnings.

- Finally, measure outcomes. Understand ROI in terms of cost savings and value (software) provided to the business.

Looking Ahead

Looking Ahead

The Jellyfish AI Impact Framework is here to bring structure and clarity to the uncertainty in a rapidly evolving landscape. Whether you’re just getting started or scaling AI across your organization, this Framework will help you:

- Align teams around shared goals

- Track progress over time

- Make strategic, data-informed decisions

The AI era isn’t just about speed. It’s about enabling teams to work smarter, drive better outcomes, and build the future of software through a human-AI partnership.

To keep up with the rapidly evolving AI landscape, we will update benchmarks and the Framework on a regular basis, including telemetry for agentic tools.

Effectively Build AI-integrated Engineering Teams

Learn more about our approach to AI measurement.

Get a DemoAbout the author

Ryan is SVP and Field CTO at Jellyfish.