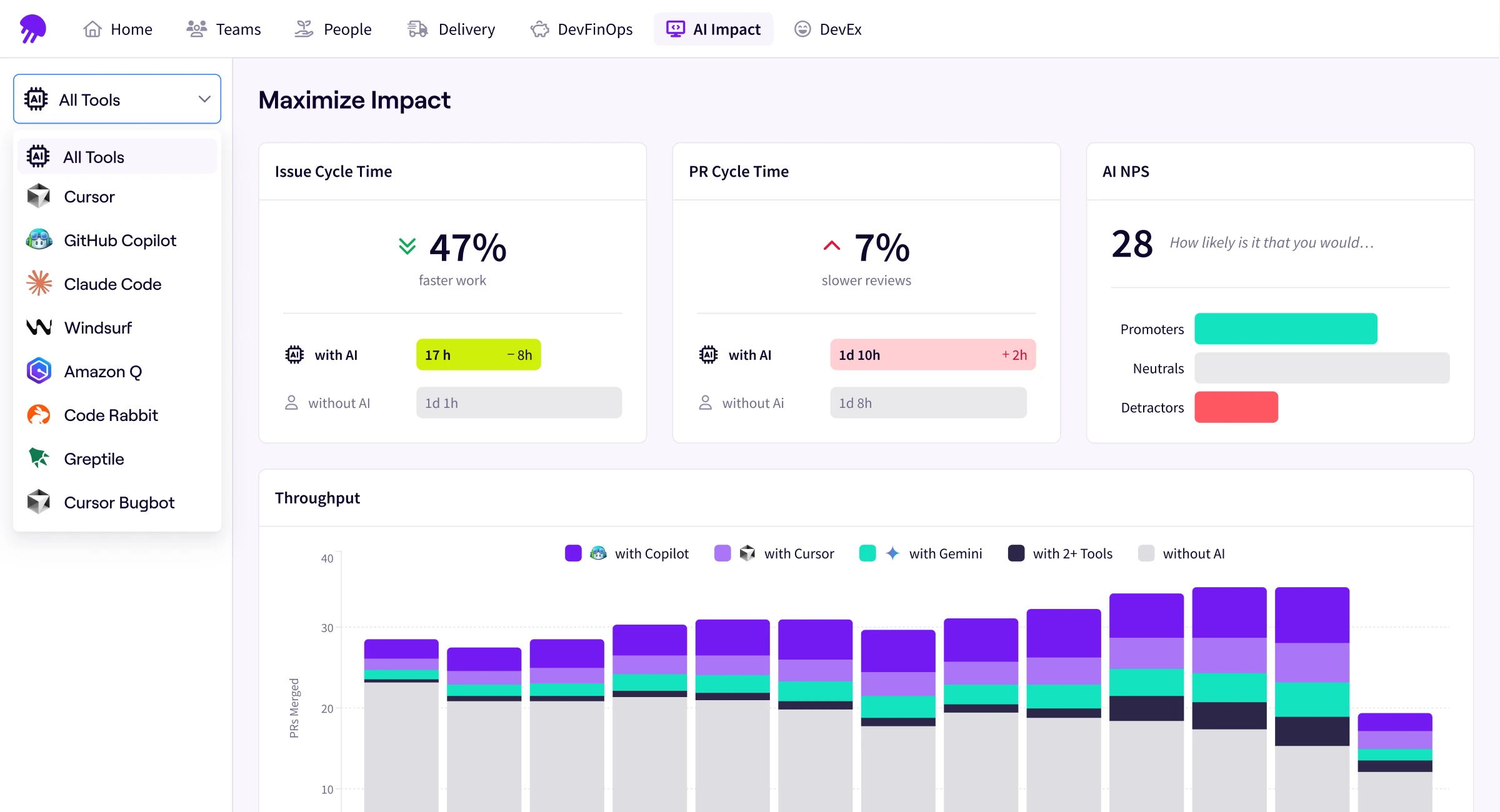

Last month we released new data showing that the use of autonomous coding agents is still in its early days. While many companies are exploring these agents, the lack of sustained traction suggests that some teams are facing challenges integrating them into sustainable workflows.

Some early adopters have, however, found success adapting to an AI-centered development lifecycle. For these leaders, we wanted to understand what differentiates their workflows, what patterns have emerged, and how others can learn from their success.

Among teams who saw sustained use of agents, there was a repetitive theme: autonomous agents were thought of as “junior engineers” – able to be pointed to a direct solution and asked to implement it, but not able to tackle more complex work. These teams were able to effectively integrate agents when they were assigned well-defined, well-scoped tasks.

We saw this in practice when we examined one company with a significantly high agent adoption rate. Over the last three months, this company included agents in 12.96% of their PRs – that’s nearly nine times more than the average company experimenting with agentic workflows.

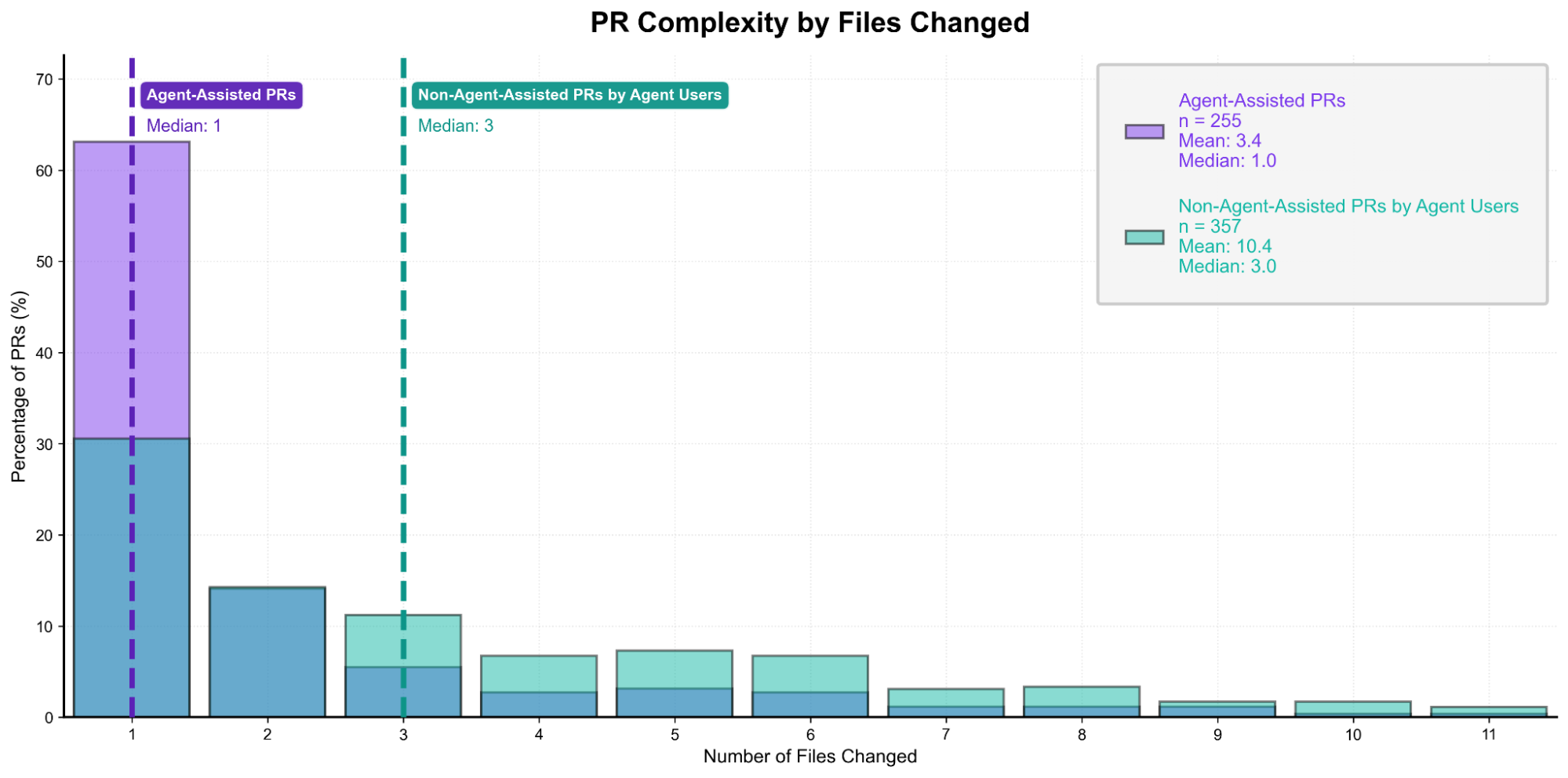

When the company in question uses agents, we measure a decrease in PR complexity (measured by files changed) and PR size (measured by the diff of lines changed). For developers who leverage agents, their agent-assisted PRs were 12% smaller compared to their non-agent-assisted PRs. Additionally, the median agent-assisted change impacted one file compared to three for non-agent-assisted PRs.

While you might explain the size difference as a function of agents being less verbose than humans, conventional wisdom and evidence from previous work suggest the contrary. Given that agents tend toward verbosity, the smaller PR sizes and complexity we observed indicate something more strategic: teams are intentionally identifying and assigning narrow, well-defined tasks to agents rather than letting agents tackle broader problems.

This work translates to paper cuts, small UI bugs and other very low-level fixes that don’t require much thinking. Informational changes and small updates fit this profile too – clear adjustments that can be completed and shipped quickly. These are tasks where the solution is already determined, requiring execution rather than problem-solving. This has opened the door for non-engineering roles to tackle work that traditionally required developer time: we are seeing product managers and designers increasingly use agents to make small code changes, answer technical questions, and implement minor fixes themselves rather than pulling engineers into these tasks.

Isolating agents to small-scoped work appears to stem from two possible factors: either teams don’t fully trust agents with higher-level work, or the agents don’t yet have the capability and proper context to handle senior- and staff-level tasks. On the capability side, the constraint is less about raw technical ability and more about context: agents often lack the organizational knowledge, architectural understanding, and codebase familiarity that complex work requires, and teams haven’t yet invested the resources needed to bridge this gap. Whether it’s a capability limitation or a trust issue, the outcome is the same: agents haven’t made their way into high-variability work.

Agents aren’t yet making architectural decisions or working through ambiguous problems. Instead, they’re implementing solutions where the path forward is already clear.

To better understand the impact AI has on your engineering workflows, learn more at Jellyfish AI Impact or request a demo here.

Take a Closer Look

Get a better understanding of the impact AI has on your engineering workflows.

Request a DemoAbout the author

Sophie is a research intern at Jellyfish.