Make Confident Tooling Decisions

Benchmark AI assistants, agents, and emerging tools with a consistent, vendor-neutral model built on normalized SDLC signals you can trust.

Get Unbiased Clarity

Measure every AI tool with one consistent, objective framework so you can compare real performance.

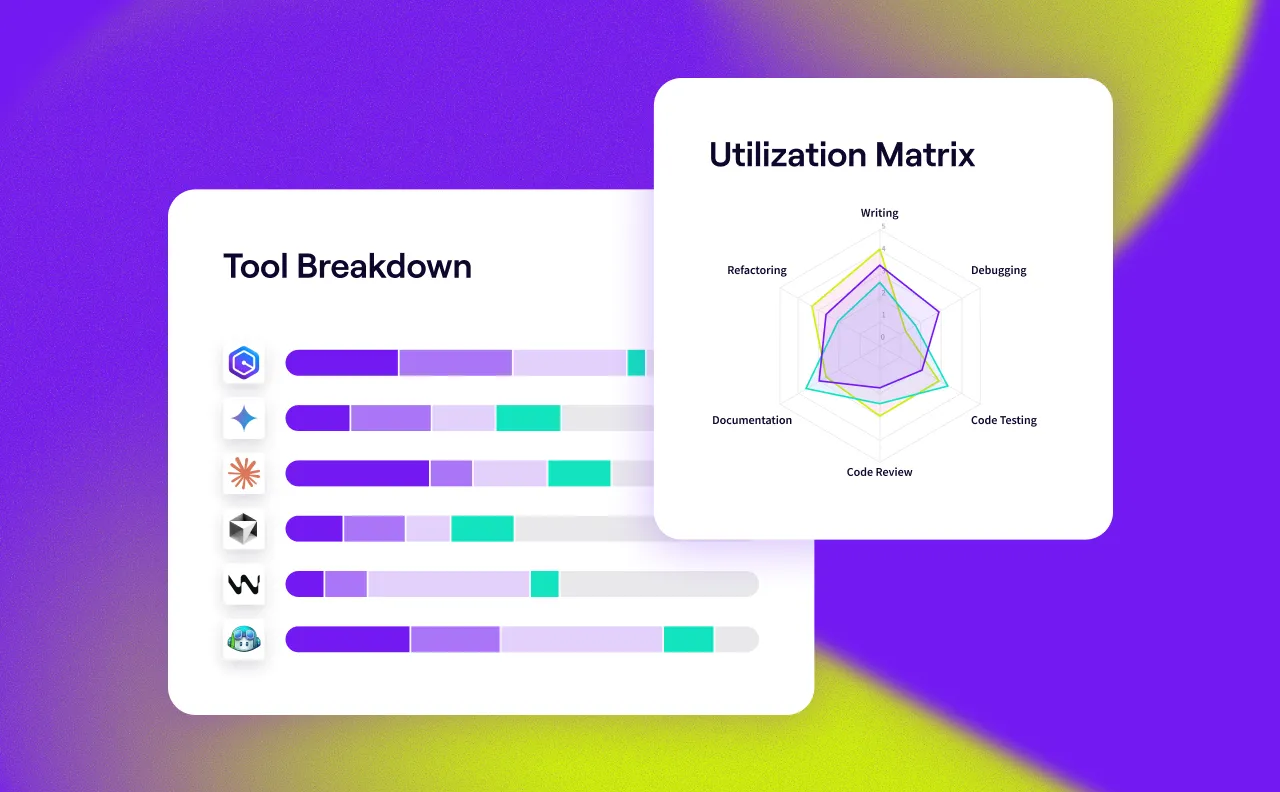

Match Tools to Workflows

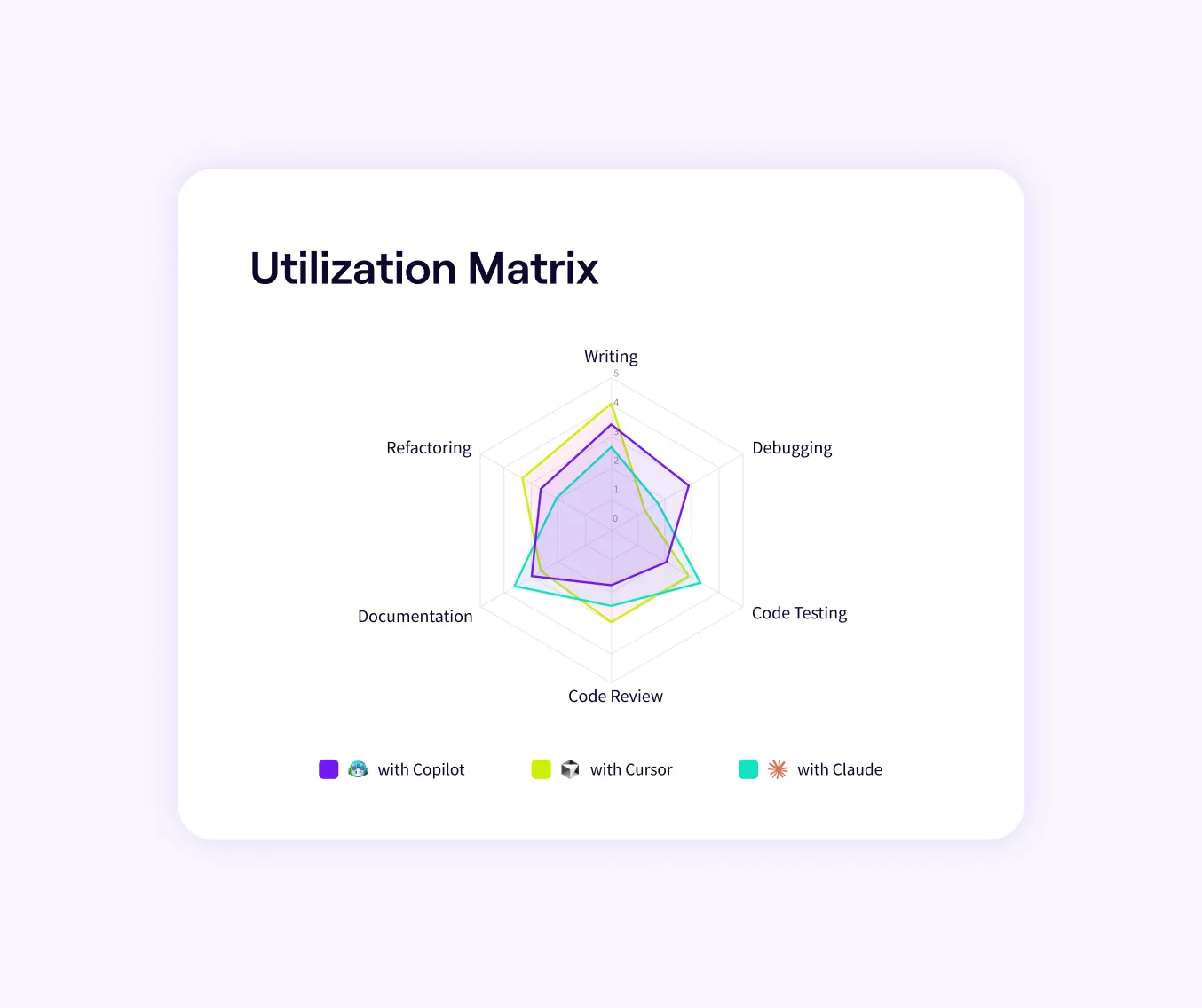

Understand how each tool performs across writing, review, testing, and more to optimize by task.

Invest With Evidence

Use trusted SDLC signals to back decisions on where to scale, cut, or shift your AI tool investments.

Jellyfish gives us a picture of how Copilot is reshaping our organization.

Nicole Nunziata

Director of Technology Operations at Varo

Choose the Right AI Tools with Data

Coverage Across Your Stack

Assess assistants, code-review agents, autonomous agents, and emerging tools in one consistent evaluation model.

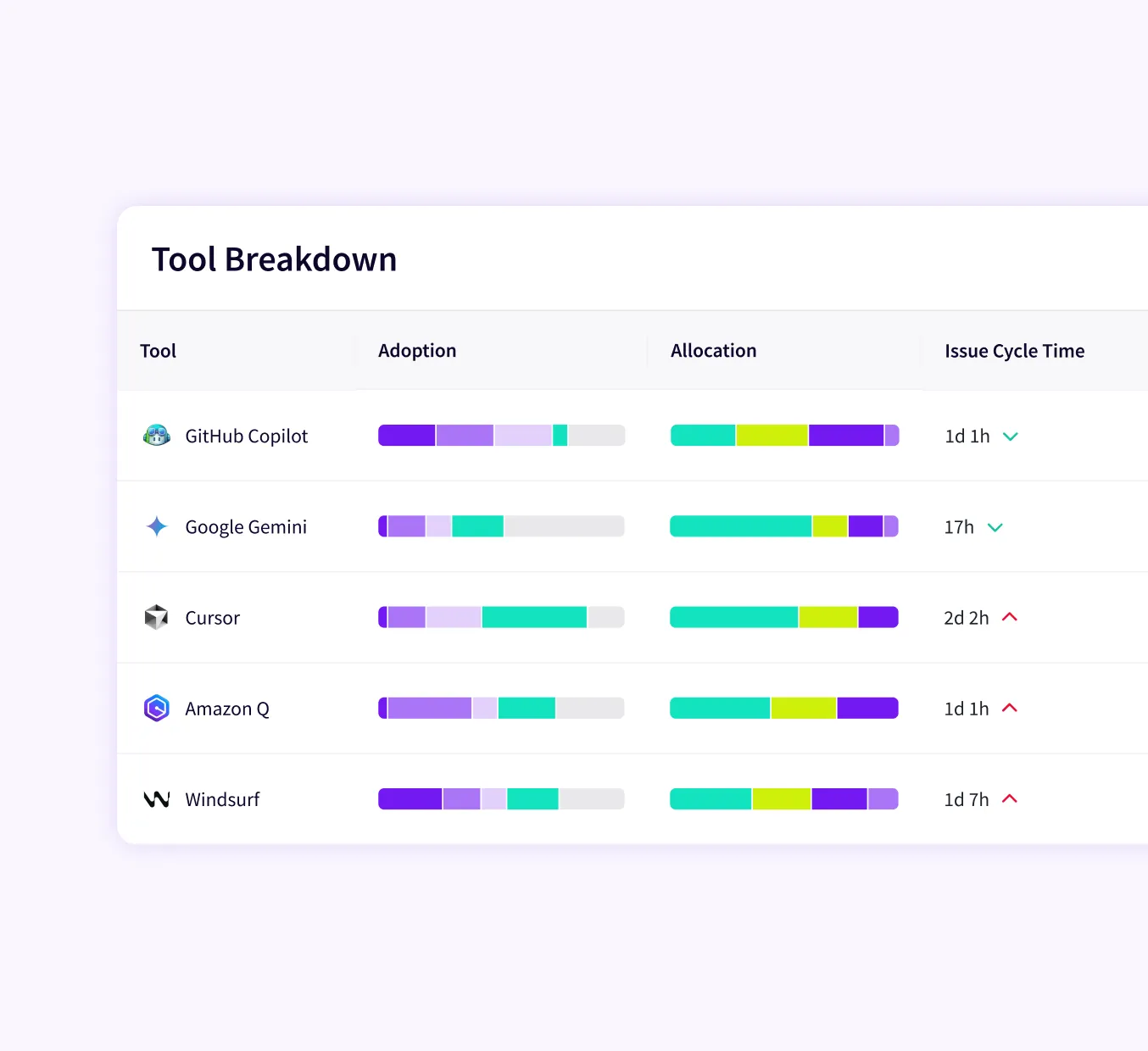

Compare Tool Performance

Track cycle time, throughput, and quality signals to identify your top-performing and underperforming tools.

Understand Delivery Impact

See how each tool influences PR velocity and where it accelerates or slows delivery.

Optimize Task Types

Identify which tools excel at writing, refactoring, debugging, reviewing, documenting, and more to choose the right fit for every task.

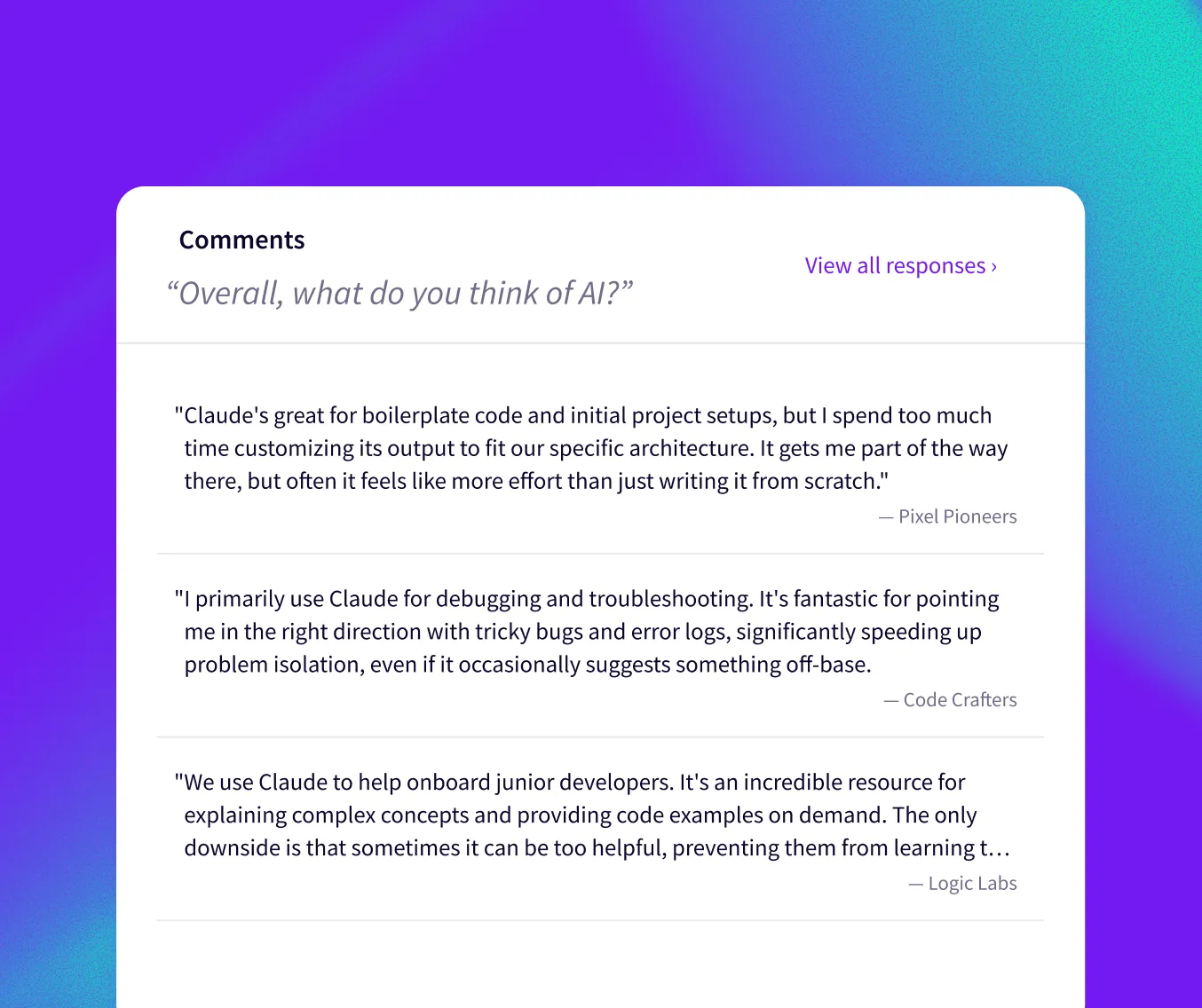

Include Developer Perspectives

Layer developer feedback alongside quantitative signals to understand tool usability, trust, and real-world preferences.

Integrate Your Full AI Tech Stack

Unify insights across assistants, review agents, and emerging systems without changing existing workflows.

AI Impact Research

In-depth frameworks and guidance to support adoption, measurement, and scaling of AI.