We all know the saying: you can’t manage what you can’t measure. That’s especially true when it comes to AI in engineering. While the industry is still early in defining universal standards, it’s rapidly converging on a common set of metrics, practices, and tooling that separate experimentation from real impact. To keep up, engineering leaders need an aggregate, system-level view of their AI investments – one that helps them measure progress, manage change, and understand how AI is actually impacting engineering efficiency.

That’s where Jellyfish AI Impact comes in. Jellyfish gives engineering leaders the data and guidance they need to measure AI adoption, adapt quickly, and drive lasting business outcomes no matter the AI tool in question.

Jellyfish connects directly to AI tools like GitHub Copilot, Cursor, Claude, and many others, ingesting vendor-provided API data on usage, events, users, and spend.

But that data is only the foundation.

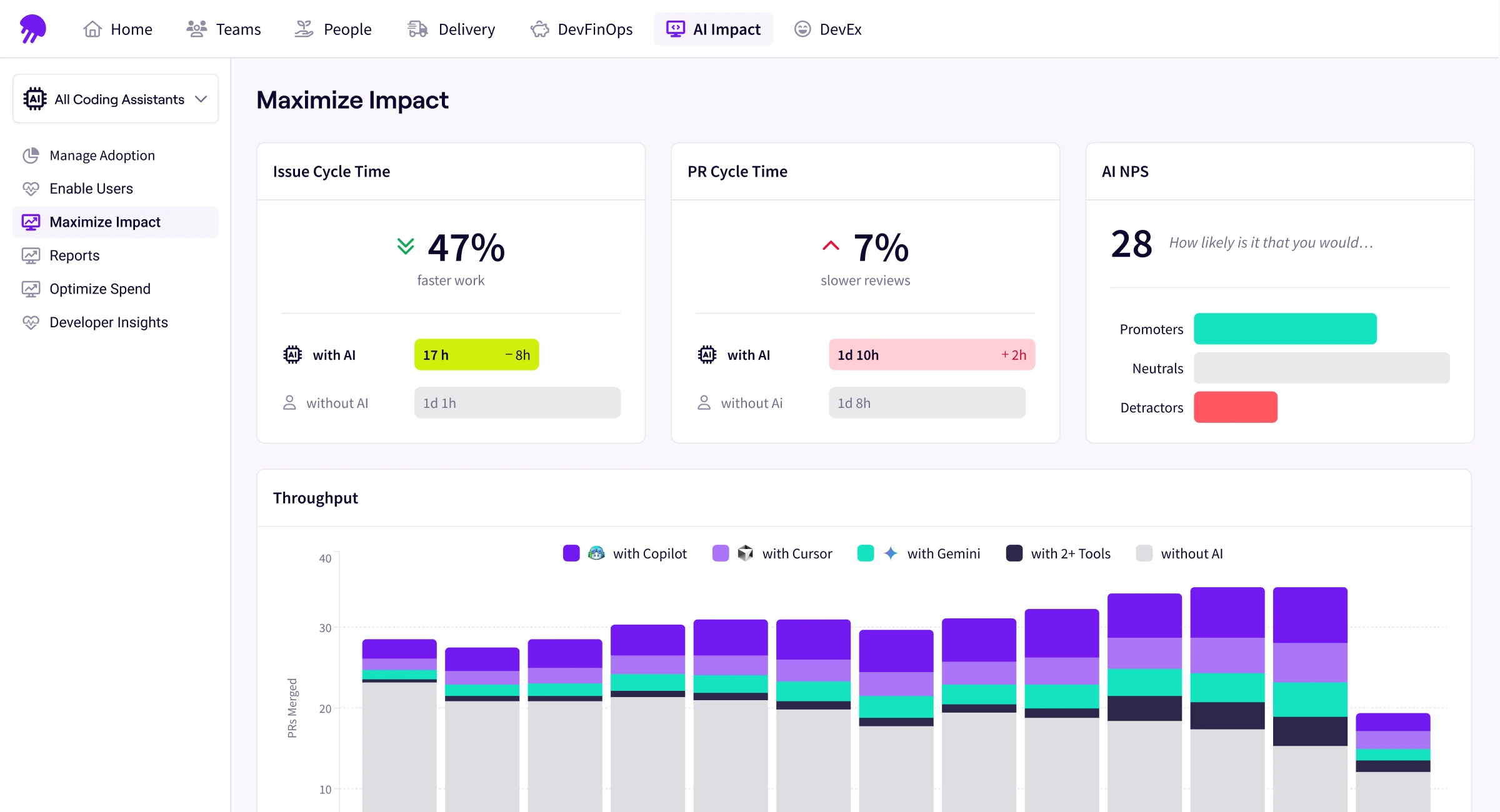

With AI Impact, Jellyfish goes further by analyzing pull requests to detect AI’s footprint in real code changes, correlating AI activity with PR metadata, reviews, and throughput, and integrating issue tracking systems like Jira, Linear, and Azure DevOps. This allows teams to align AI usage with delivery metrics such as cycle time and work type. By aggregating and correlating these signals across systems, Jellyfish creates a holistic view of AI’s impact on engineering productivity, not just tool engagement.

AI Impact goes far beyond what native AI dashboards were designed to deliver. They lack cross-vendor visibility, deep source control analysis, and tight integrations with issue tracking and delivery systems, making it difficult to move from raw usage metrics to meaningful conclusions.

Why “Just Build It” Breaks Down in Practice

Why “Just Build It” Breaks Down in Practice

So when teams ask, “Why not just build this internally?”, the answer often sounds simple on paper. Pull data from vendor APIs, load it into a BI tool, create a few charts, and iterate. In practice, that approach breaks down quickly.

The first challenge is fragmentation and change. Every AI vendor exposes different schemas, event definitions, and update cadences. Multiply that across multiple tools, and what started as a lightweight dashboard turns into a permanent data maintenance project.

The second challenge is correlation. Linking AI usage to outcomes like PR throughput, cycle time changes, work types, and team-level performance requires inference, normalization, and modeling across systems that were never designed to work together. Small mistakes in this process can easily lead to misleading or overly simplistic conclusions.

Last but certainly not least is engineering time. Every hour spent maintaining pipelines, updating dashboards, refactoring models, or debugging identity mismatches is an hour not spent delivering product. Engineering leaders don’t win by building better dashboards; they win by delivery products that add business value.

A Single Pane of Glass for AI-Integrated Teams

A Single Pane of Glass for AI-Integrated Teams

Jellyfish AI Impact was built specifically for organizations using multiple AI tools across the software development lifecycle. With Jellyfish, teams get a single place to see adoption across all AI tools, side-by-side comparisons of coding, review, and agent-based tools, and AI usage enriched with delivery and flow metrics. Most importantly, they gain a clear view of how AI changes velocity and outcomes – not just activity.

This level of visibility becomes critical as AI shifts from experimentation to infrastructure. Native dashboards from AI coding vendors can tell you what happened, but they rarely explain what it means. Jellyfish bridges that gap by turning raw AI usage data into actionable insight. It automatically groups users into meaningful cohorts like Power, Casual, and Idle based on real usage patterns, shows how adoption differs by team, role, or type of work, and connects AI usage directly to changes in PR throughput and cycle time.

With AI Impact, you get a holistic view of teams, codebases, and AI tools in one place, making it the strategic choice for organizations that want to level up their approach to AI adoption and measurement.

You can learn more and get started with AI Impact here.

About the author

Daniel Kuperman is VP of Product Marketing at Jellyfish.