Build AI-integrated engineering teams

AI tools alone won’t transform your org. Jellyfish provides insights into AI tool adoption, cost, and delivery impact – so you can make better investment decisions and build teams that use AI effectively.

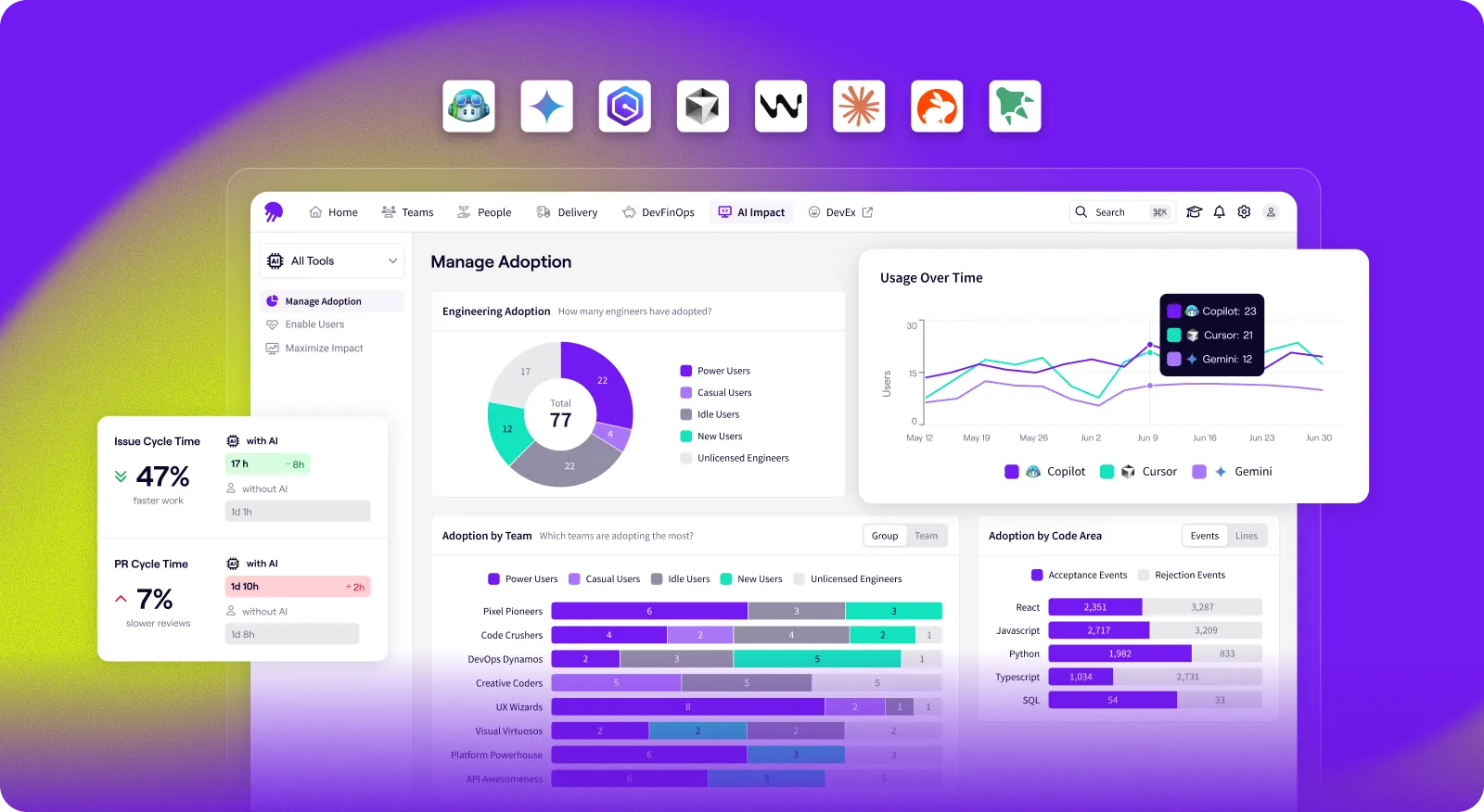

Measure the adoption and impact of AI across the SLDC

Gain clear visibility into how your AI tools impact team productivity – so you can confidently optimize your AI strategy and report on metrics that matter.

Drive adoption

Spot usage patterns, remove blockers, and scale what’s working by replicating top user behavior across teams

Maximize impact

See how AI tools perform in your workflows and quantify their effect on throughput, quality, and focus

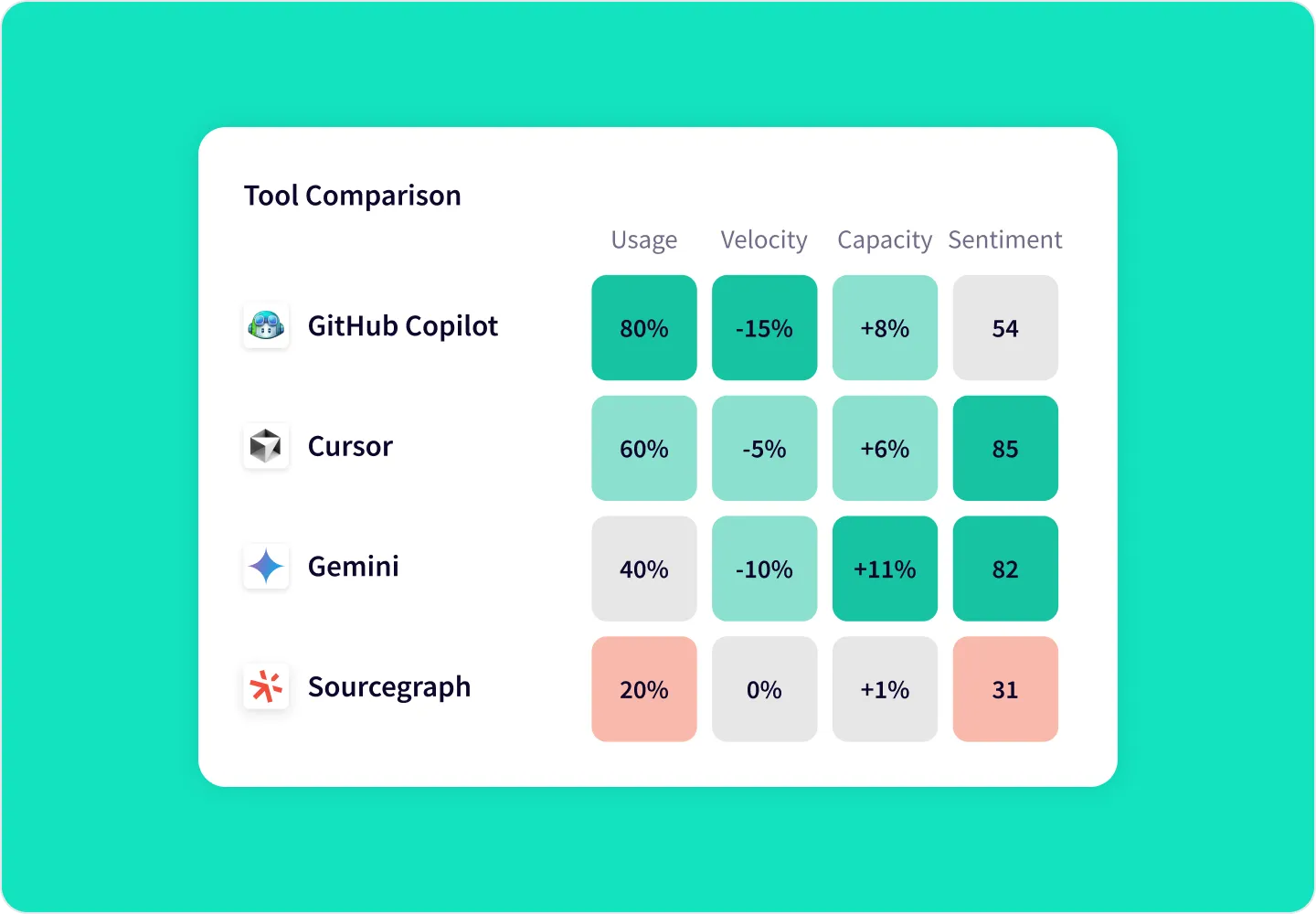

Optimize your AI toolset

Compare tool performance and spend, and match tools to the teams and tasks where they add the most value

What customers say about AI Impact

Thanks to Jellyfish’s integration with Cursor, we now have granular adoption data and the ability to benchmark against non-Cursor users. With clear signals on where productivity gains are happening, we can take learnings from the higher-performing teams and share them across the org so everyone can benefit.

When the Jellyfish AI Impact dashboard went live, the insights it generated answered leadership’s questions, fully validating the Copilot investment.

Jellyfish gives us a picture of how Copilot is reshaping our organization.

Jellyfish AI Impact allows you to understand:

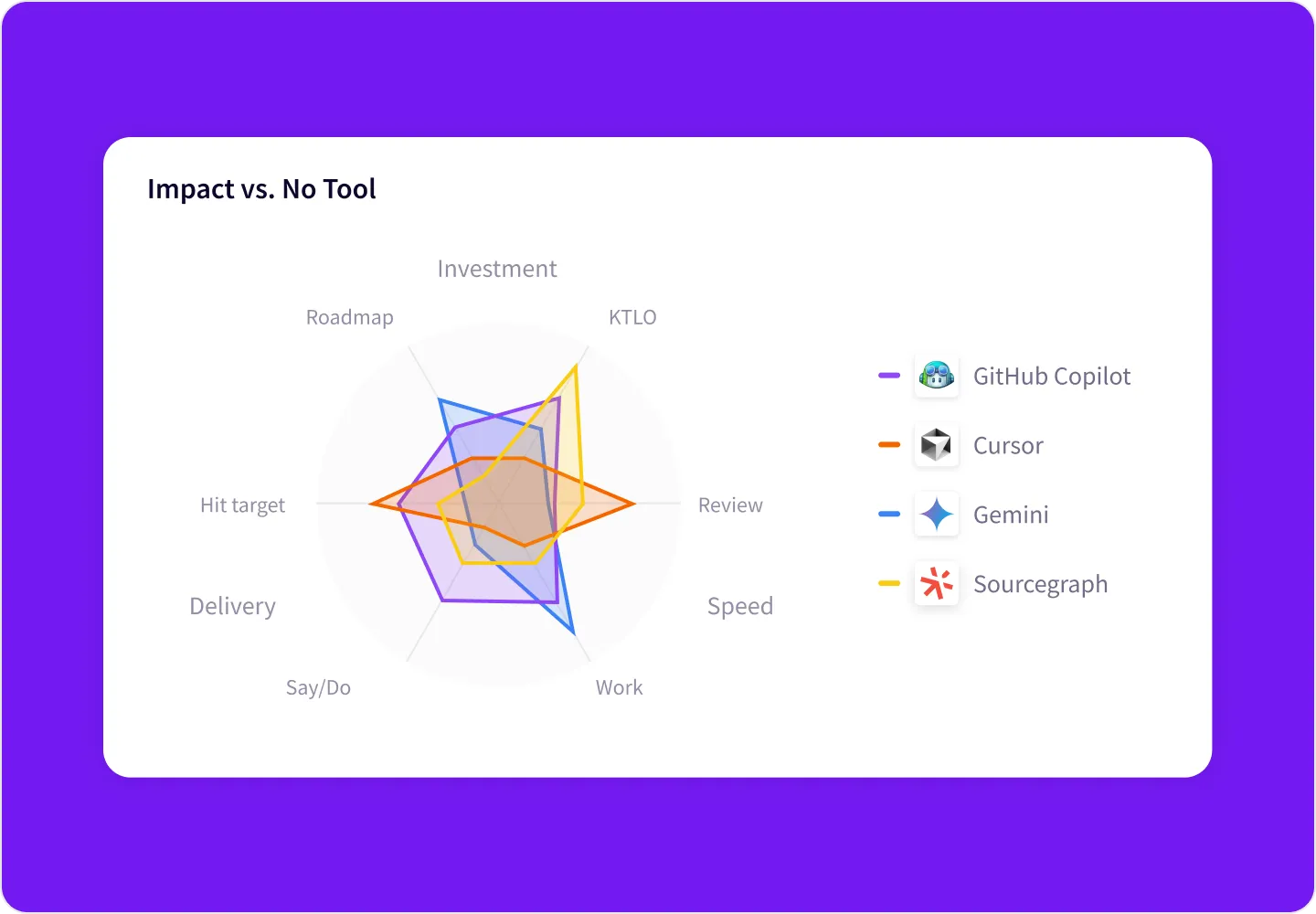

How are AI tools impacting productivity?

Measure how tools like Copilot, Cursor, Gemini, and Sourcegraph affect your teams by visualizing their impact on delivery speed, workflow efficiency, and resource allocation.

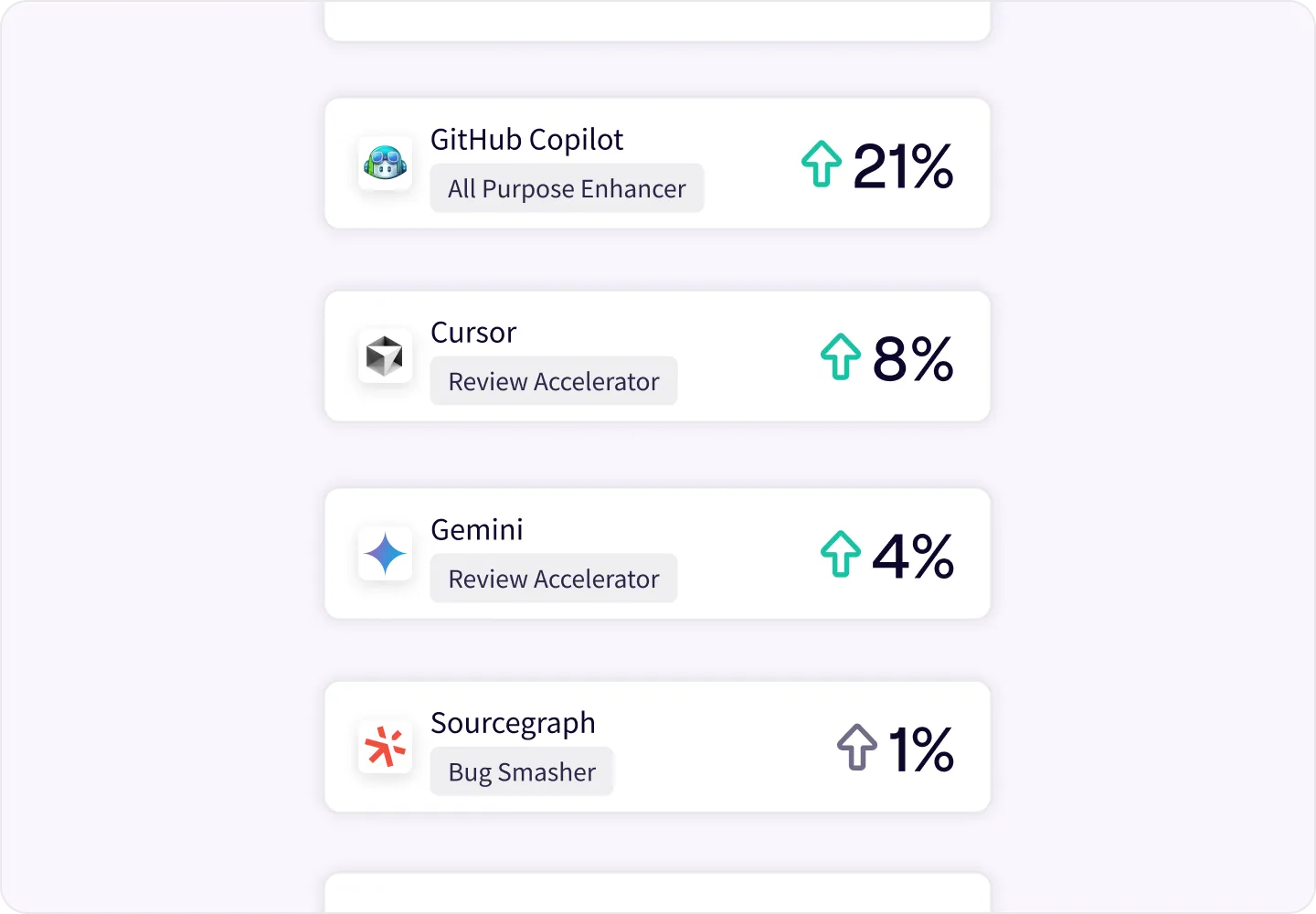

Which AI tool performs best across different roles and engineering tasks?

Analyze which teams within your org are leveraging Copilot, Cursor, Gemini, or Sourcegraph—complete with breakdowns by work type, language, and editor.

Which AI tools are driving value – and which aren’t?

Objectively see how different tools perform relative to each other and understand their contribution to the overall contribution to the software development lifecycle.

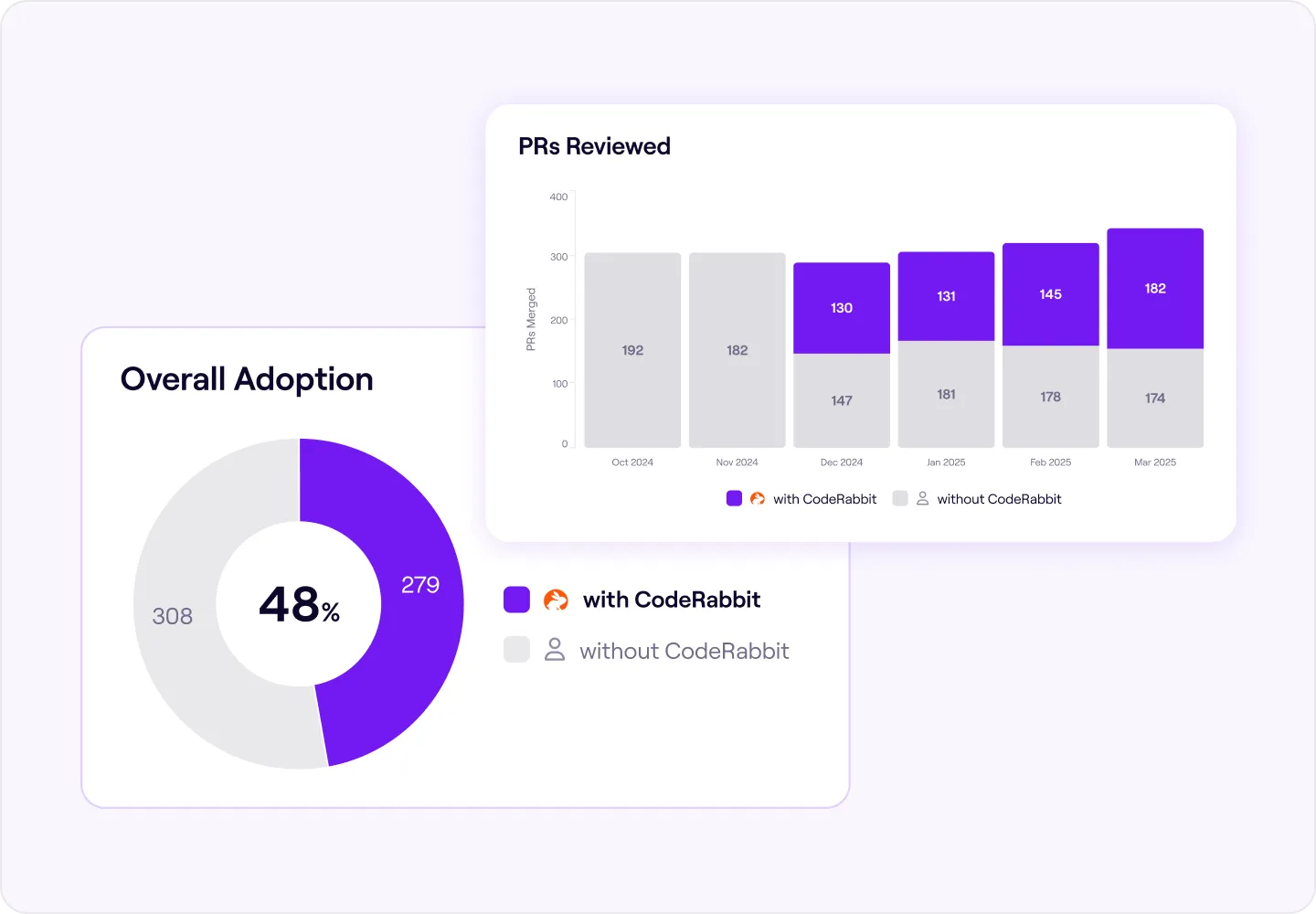

How are agents performing as part of our AI tool ecosystem?

Get AI tooling insights across the SDLC, including for agents–like adoption insights for code review agents that are consistent, comparable, and immediate.

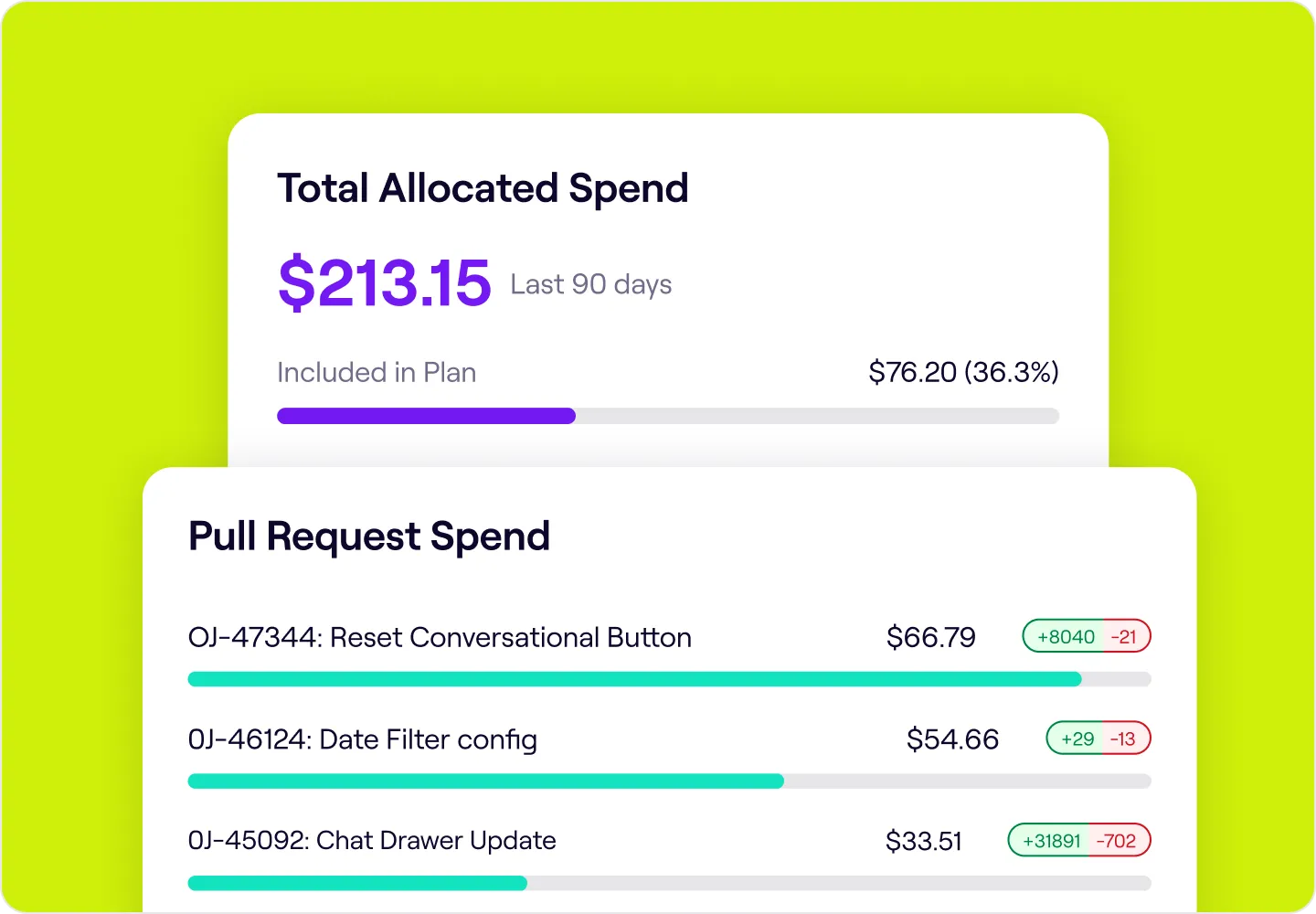

How is my AI spend impacting delivery value?

View AI spend at a tool, individual, or initiative level to understand how AI spend impacted specific projects and if the investment was worth it.