In this article

AI is often pitched as a silver bullet for software development these days, and honestly, the numbers make it tempting to believe.

For example, McKinsey’s research found that engineers can write code 35–45% faster, refactor 20–30% faster, and complete documentation nearly 50% faster with the help of AI.

These numbers have CEOs reaching for their checkbooks and developers either excited or anxious about their future. But in that same research, McKinsey also points out that those results aren’t guaranteed.

Productivity varies depending on the task (routine tasks improve more than creative ones) and on the developer’s own experience with AI coding tools. In some cases, the learning curve can even slow things down.

So, while AI still dominates every software conversation in 2025, there’s a fine line between genuine improvement and wasted investment.

This article shows you what AI does well in software development right now, what it struggles with, and what’s coming next. We’ll cover the specific use cases that deliver immediate value and help you set the right expectations for your team.

Benefit #1 – Increased Productivity Through Automation

Benefit #1 – Increased Productivity Through Automation

The repetitive parts of development have always been a time sink. Every day, your team deals with the same routine tasks:

- Writing boilerplate code for the hundredth time

- Creating unit tests for basic functions

- Documenting APIs and code behavior

- Setting up project management configurations

- Generating CRUD operations

These “necessary evils” would usually consume half a developer’s day. Meanwhile, the actual problem-solving and creative work got squeezed into whatever time remained.

AI tools now handle most of this routine work, so developers spend more time on architecture decisions and more complex design work. And the numbers back this up.

Jellyfish’s 2025 State of Engineering Management report found that 62% of teams see at least 25% productivity gains, and they expect those numbers to grow.

Stack Overflow’s survey shows similar results, with 81% of developers saying that productivity is AI’s top benefit.

Here’s what this looks like in practice across a normal development day.

| Task | Without AI | With AI |

| Writing CRUD operations | Write from scratch (30-45 min) | Generate in 2 minutes, review and customize (5-7 min) |

| Creating unit tests | Write manually for each function (1-2 hours) | AI generates test suite, developer adds edge cases (15-20 min) |

| Boilerplate code | Type every line manually (20-30 min) | AI autocompletes as you type (5 min) |

| Finding code examples | Search documentation and Stack Overflow (15-25 min) | Get instant examples for your context (1-2 min) |

| Basic debugging | Check each potential issue manually (30-60 min) | AI identifies common bugs and suggests fixes (10-15 min) |

| API documentation | Write descriptions for each endpoint (45-60 min) | Generate docs from code, then refine (10-15 min) |

Here’s how this developer on Reddit describes the productivity hike:

I’ve been able to significantly streamline my speed in delivering tasks. It helps with autocomplete or straight-up generates code for me, and it works great. I don’t really need to figure things out myself as much. Just ask the AI and boom – you have the (usually correct) answer.

Notice that “usually correct” qualifier? That’s important. Not every developer sees these benefits, and there’s a learning curve to using AI properly.

Here’s another Reddit user who shares a different experience:

Yeah, I don’t think AI is making me any faster or more efficient. The amount of hallucinations and outdated info is way too high.

This split makes sense when you understand what AI does well and what it doesn’t.

Use it for repetitive tasks and first drafts, and then apply human judgment. Skip the review step, and you’ll pay for it later.

Benefit #2 – Superior Code Quality and Reliability

Benefit #2 – Superior Code Quality and Reliability

Code quality used to depend entirely on how thorough your reviewers were that day. Now, AI acts as your first line of defense and scans every commit for bugs, security risks, and performance problems.

And contrary to what you might expect, faster work doesn’t mean sloppier code.

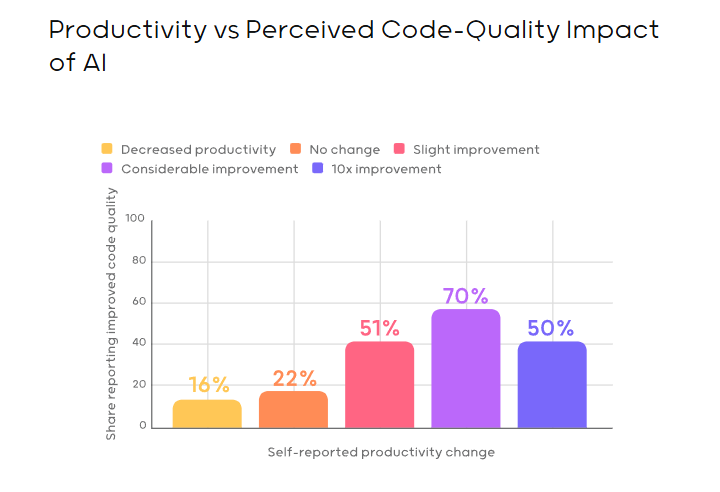

Qodo’s research found that 70% of developers who became more productive also improved their code quality, compared to just 16–22% of those whose productivity stayed the same or declined.

(Source: Qodo)

Still, AI technology and machine learning algorithms have their limits, particularly with big-picture code organization. As one developer on Reddit explains:

LLMs make it easy to write code, but aren’t as good at refactoring and maintaining a cohesive architecture.

Aside from general maintainability constraints, this will hurt the use of AI tools long-term, because more repetitive code snippets with unclear organization will also trash the LLM’s context window.

This creates a snowball effect. Messy code slows down modifications, delays new features, and confuses AI tools that need a clear structure to work well.

To avoid these problems, you can follow this simple review process for AI-generated code generation:

- Does the code solve your actual problem with the right approach?

- How well does it handle edge cases, errors, and unexpected inputs?

- Does it follow your team’s established frameworks and conventions?

- Will it perform well with production-scale datasets and traffic?

- Can other developers easily understand and modify this code?

- Have you checked for common security vulnerabilities?

- Does it integrate smoothly with your existing architecture?

- Are there proper tests covering the main functionality?

- Will this code suggestion cause problems six months from now?

When you combine AI’s speed with human developer judgment on these points, you get both high-quality code and productivity. And most importantly, you don’t lose sleep because of technical debt later on in the software development process.

PRO TIP 💡: Use Jellyfish to compare defect rates and review times between development teams using AI tools and those working without them. The data shows whether AI helps ship higher-quality code or just moves problems downstream, so you can adjust your quality checks accordingly.

Benefit #3 – Reduced Development Costs

Benefit #3 – Reduced Development Costs

McKinsey reports that 52% of organizations saw reduced software engineering costs in the second half of 2024 from using generative AI. For many companies, this equals millions in annual savings.

These savings typically come from multiple sources:

- Teams ship features faster and spend less developer time per feature

- Better code quality reduces expensive production bugs

- Automated reviews free up senior developer time and boost scalability

- Companies hire fewer contractors for routine DevOps work

- Better code quality today means lower maintenance costs tomorrow

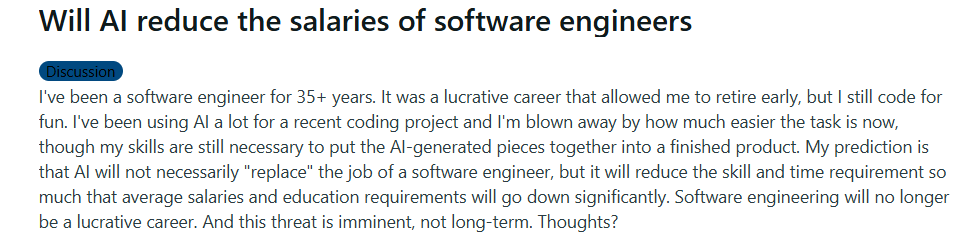

These cost savings make developers nervous about their job security. Many worry that companies will use AI to replace engineering roles entirely.

This Reddit post shows what many real-world developers are thinking:

(Source: Reddit)

But that’s not how AI changes development. AI simply changes what developers do, not whether companies need them. A developer on Reddit explained it perfectly:

Software developers are “formal specifiers”. We will always need formal specifiers… in fact, every year we need them in a broader and broader sense. We only write “code” because it has been the best way to “formally specify” a problem and solution.

We don’t write assembly anymore, and libraries cover a lot of low-hanging fruit that used to be thousands of man-hours. LLMs give us broader capabilities to do formal specification on an even wider scale.

Software developers aren’t going anywhere… in fact, they will become ever more useful… a trend that has been continuing for decades.”

We’ve seen this pattern before. Compilers replaced assembly code, yet we needed more programmers. Cloud services eliminated server management, and companies hired more developers than ever.

Every automation wave created more developer jobs, not fewer.

Companies that understand this use AI to ship twice as many features with the same headcount. They tackle bigger projects that were previously too expensive, and they enter markets that weren’t viable before.

For example, a startup that might have needed 10 developers and two years to build an MVP can now do it with 5 developers in six months. They use the savings to build more features, expand to mobile platforms, or add enterprise capabilities they couldn’t afford before.

The team stays the same size but delivers what used to require twice the resources.

Benefit #4 – Faster Time-to-Market

Benefit #4 – Faster Time-to-Market

Building an MVP used to take 12-18 months with a full team. Now, startups launch in 3-4 months with half the developers. The entire pace of software development has shifted into a higher gear.

McKinsey found that product teams using GenAI tools accelerate time-to-market by about 5% over a six-month development cycle. That might sound modest, but it means shipping a week earlier on major releases.

One developer on Reddit describes the change from the startup perspective:

I have started and worked at several startups, and the things we can do with 5 people today are significantly greater than the things we could do 10 years ago. Not all of this is AI-driven tools, but a lot of it is.

The size of a codebase that is manageable with fewer people is quite significant. So yes, I notice. I am not sure what the impact is in much larger orgs, though, especially ones with people who have been around a long time and really know the code base well? The time to market for MVPs is now much faster than it used to be for better and for worse.

To put this in perspective, here’s how long common projects take with and without AI:

| Project type | Traditional timeline | With AI tools | Time saved |

| Basic CRUD Web App | 4-6 weeks | 1-2 weeks | 70% |

| Mobile App MVP | 3-4 months | 6-8 weeks | 50% |

| API Integration | 2-3 weeks | 3-5 days | 75% |

| E-commerce Platform | 6-9 months | 3-4 months | 45% |

| Enterprise Feature | 2-3 quarters | 1-2 months | 60% |

| Database Migration | 4-6 weeks | 1-2 weeks | 65% |

The speed advantage compounds over time. Faster shipping means more iterations and quicker learning about what users want.

Teams that ship weekly get 4x more market feedback than monthly shippers, and often find product-market fit while competitors are still building.

That “for better and for worse” Reddit comment is worth noting. Speed only helps if you do it right. Teams that rush everything to production without thinking create messes, not products.

To get the most from AI’s speed benefits:

- Start with AI tools from day one on new projects

- Build quick prototypes with AI to test ideas early

- Automate testing so it doesn’t become a bottleneck

- Keep your code review standards high

- Let AI handle repetitive work while developers focus on complex problems

- Create reusable templates and patterns for AI to follow

Remember, every competitor has access to the same AI tools. The advantage goes to teams that integrate them properly.

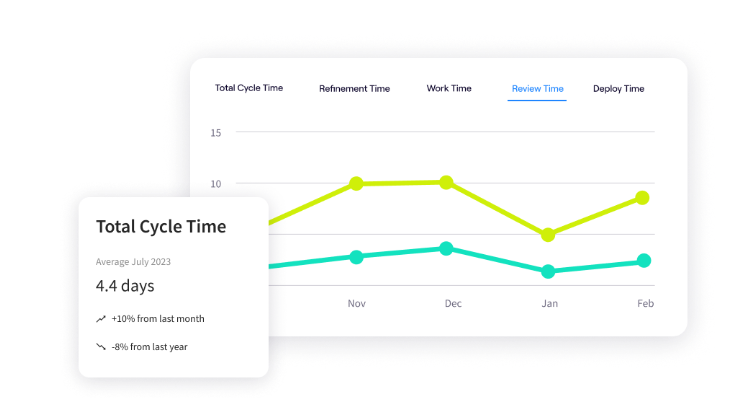

PRO TIP 💡: Jellyfish tracks cycle times before and after AI adoption, so you can see exactly how much faster your teams ship. Compare delivery speeds across different project types to understand where AI saves the most time — some teams see 40% improvements on new features but minimal gains on bug fixes.

Benefit #5 – Enhanced Developer Satisfaction and Retention

Benefit #5 – Enhanced Developer Satisfaction and Retention

Developer retention is one of tech’s most expensive problems. Turnover means endless recruiting, months of training, and lost institutional knowledge.

Meanwhile, the tech industry sees average turnover rates of 13-20% annually, with some companies losing a quarter of their engineering team each year.

And better salary offers aren’t what usually pull developers away. Many developers leave when the work becomes too repetitive and boring. Now, AI changes that dynamic.

McKinsey found that developers using AI-powered tools are more than twice as likely to report being happy at work.

GitHub’s research shows similar results — between 60-75% of developers felt more fulfilled with their job and less frustrated when coding with AI assistance.

Developers report higher satisfaction for simple reasons:

- They spend less time on repetitive tasks they’ve done hundreds of times

- More of their day involves actual problem-solving

- They ship features faster and see their impact sooner

- The cognitive load from mundane tasks disappears

- The work feels more creative and less mechanical

- A sense of doing meaningful work instead of manual, time-consuming labor

Think about it from a developer’s perspective. Monday morning used to mean writing another CRUD interface or debugging the same type of null pointer exception. Now, it means working on that creative architecture problem while AI handles the mundane.

Of course, AI won’t fix bad management or toxic culture.

If developers deal with unclear requirements and stakeholder micromanagement, AI just helps them automate work before they quit anyway. Implement AI to reduce frustration, not to push burned-out teams harder.

PRO TIP 💡: Jellyfish DevEx runs automated surveys to track how AI coding assistants affect developer happiness and connects those responses to performance data. You’ll see if teams using AI report less frustration with repetitive work and whether that improved mood translates to faster shipping or better retention.

6. Making Informed Decisions with Real-Time Data Analysis

6. Making Informed Decisions with Real-Time Data Analysis

Modern applications produce massive amounts of data — server logs, error reports, performance metrics, user analytics, and more.

But most teams barely scratch the surface of this information because there’s simply too much to process manually. AI fills this gap because it processes thousands of data points instantly and outlines what needs your attention.

They scan error logs to spot problems early, watch performance data to predict slowdowns, and track how users interact with the product. Developers get answers in seconds instead of spending hours clicking through dashboards.

Here’s where AI analysis and its deep learning features save the most time and headaches:

- Performance problems: AI monitors your product 24/7 to find slow database queries, memory leaks, and inefficient code paths. It tells you exactly which functions take too long and which API calls bog down your system.

- Security threats: It notices unusual patterns in your logs like failed login attempts, strange API calls, or data access from new locations. You get alerts about potential breaches before attackers cause damage.

- User behavior: AI tracks how people navigate your app, which buttons they click, where they get confused, and which features they ignore completely. You learn what works and what doesn’t without manual analysis.

- Infrastructure needs: It predicts when you’ll hit capacity limits by examining growth trends and usage patterns. It tells you weeks in advance when to add servers or upgrade databases.

- Bug detection: AI connects errors across different parts of your system to find root causes. Instead of seeing 50 error messages, you see the one underlying problem that’s causing all of them.

Not everything should be automated, though. This developer on Reddit makes a good point:

The developers I see using AI inefficiently are trying to use AI to reduce the data-driven decision-making workload.

This matters because AI should inform your decisions, not make them for you. Yes, AI analyzes data brilliantly, but humans still need to decide what to do with that information.

This table shows where to draw the line:

| Where AI should inform decisions | Where humans should decide |

| Finding performance blockers | Choosing system architecture |

| Spotting security anomalies | Setting security policies |

| Tracking which features get used | Deciding which features to build |

| Predicting when you’ll need more servers | Approving infrastructure budgets |

| Discovering error patterns | Choosing what to fix first |

| Measuring code complexity | Deciding how much technical debt is acceptable |

For example, let’s say that AI notices that every Tuesday at 3 PM, your API slows down. It traces the problem to a specific background job and shows you the exact database queries causing trouble. It even recommends which indexes would help. You get all this information in minutes instead of days.

You still decide whether it’s urgent enough to fix immediately. Artificial intelligence provides the data, you set the priorities.

See the Full Impact of AI with Jellyfish

See the Full Impact of AI with Jellyfish

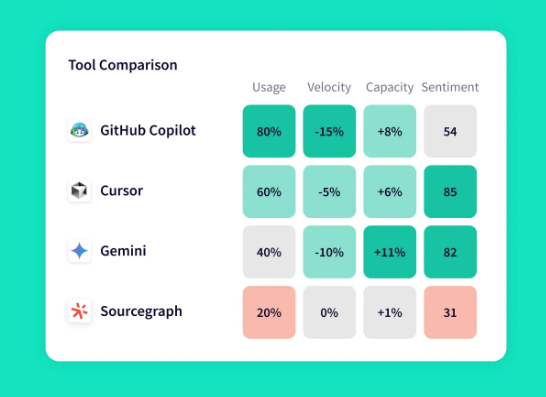

So you’ve invested in AI systems for your developers. GitHub Copilot, maybe some Cursor licenses, and probably ChatGPT for the team.

Sure, developers say they feel more productive, but that’s not enough when you’re spending tens of thousands annually. You need some way to measure if these tools are worth it.

That’s exactly where Jellyfish comes in.

Jellyfish is a software engineering intelligence platform that brings together data from your entire development stack — Git, Jira, CI/CD, and AI-driven coding tools.

With our AI impact dashboard, you can track how engineering work happens across your organization and measure exactly what AI tools do for your teams, from first commit to production.

Here’s exactly what you can expect:

- Measure adoption patterns and usage across your entire engineering organization: See which teams embrace AI tools and which avoid them, broken down by seniority, project type, and programming language.

- Quantify the real productivity impact on delivery speed and throughput: Track how AI changes cycle times, code volume, and feature delivery rates across different workflows. You’ll see specific metrics like PR velocity improvements, commit frequency changes, and how AI affects both coding time and review cycles.

- Compare performance across multiple AI tools to optimize your tech stack: See which tools deliver results and which waste money by tracking adoption, costs, and productivity metrics side by side. You’ll learn that Cursor works great for frontend teams while backend developers get more value from Copilot.

- Connect AI spending directly to business outcomes and calculate true ROI: Track exactly how much you spend on AI tools per team, project, or feature, then see what you get back in productivity gains. You’ll know if that $5,000 monthly Copilot bill delivers $20,000 in faster shipping or just sits there unused.

- Connect easily to your current tech stack: The platform integrates with your development stack through standard APIs. It automatically collects usage metrics and correlates them with engineering performance data.

Schedule an AI impact demo to see detailed metrics on how your teams use AI tools and where they make the biggest difference.

FAQs About the Benefits of AI in Software Development

FAQs About the Benefits of AI in Software Development

How does AI improve code and software quality?

AI models act as your first code reviewer and catch bugs, security risks, and performance problems as developers write.

It shows better ways to implement features based on best practices and keeps code consistent across your team. This means fewer issues make it to production, which also leads to a better user experience overall and less downtime.

What are some common use cases for AI in a software engineer’s daily workflow?

Developers use AI to write boilerplate code, generate unit test cases, and create documentation without starting from scratch. It helps debug tricky errors by explaining what went wrong and how to fix it.

AI also handles repetitive tasks like writing CRUD operations, setting up configurations, and converting code between formats.

What is a good first step for the integration of AI on a team?

Start with one team that already experiments with new tools and give them access to GitHub Copilot or Cursor for a month. Track basic software development lifecycle metrics like how often they use it and whether they ship faster.

Once you see what works for them, expand to similar teams and share the lessons learned.

Is investing in AI development tools worth the cost?

For most teams, yes. Even conservative estimates show AI tools save developers several hours per week on routine tasks, which quickly covers the monthly cost per license.

But the value depends on adoption. Tools only work if developers use them, so track usage carefully and cut licenses for teams that don’t see benefits.

Learn More About AI in Software Development

Learn More About AI in Software Development

- What is the Impact of AI on Software Development?

- The Risks of Using AI in Software Development

- How to Measure the ROI of AI Code Assistants in Software Development

- How to Use AI in Software Development: 7 Best Practices & Examples for Engineering Teams

- AI in Software Testing and Quality Assurance

- Will AI Replace Software Engineers? No and Here’s Why

- What’s The Future of Software Engineering with AI?

- What is the Responsibility of Developers Using Generative AI? Key Ethical Considerations & Best Practices

About the author

Lauren is Senior Product Marketing Director at Jellyfish where she works closely with the product team to bring software engineering intelligence solutions to market. Prior to Jellyfish, Lauren served as Director of Product Marketing at Pluralsight.