In this article

AI writes better code than most juniors. It debugs faster than seniors. And it never needs sleep, coffee, or salary negotiations.

So why won’t it replace you?

Well, because AI without human direction is worthless. It can’t talk to stakeholders who don’t know what they want. It won’t advocate for tech debt cleanup. It can’t mentor the new hire or explain to the CEO why their ‘quick fix’ idea will cost millions.

Writing code fast means nothing if you’re building the wrong thing. Yes, artificial intelligence multiplies human judgment, but zero times anything is still zero. This Reddit user explained it perfectly:

The fear isn’t irrational, though. Some developers are losing jobs right now. Just take a look at what this user said on Reddit:

Yes, some companies are making this bet. But they’re about to find out why it fails – why AI can’t handle edge cases and why junior developers with AI tools create more problems than they solve.

Below, we’ll walk through why AI can’t manage the hardest parts of development, what happens to code quality without experienced human oversight, and what skills matter most now.

How AI is Already Changing Software Development Today

How AI is Already Changing Software Development Today

Two years ago, AI coding tools were novelties. Today, they shape how millions of devs work. Here’s what they do well and what they can’t handle:

Code Generation and Optimization

The old way: Developers wrote every function from scratch, googled syntax, and spent hours on boilerplate code. Even simple CRUD operations meant typing out repetitive patterns manually.

The AI way: Now you describe what you need, and AI generates entire functions instantly. According to Stack Overflow research, 82% of developers using AI tools rely on them mainly for writing code. AI understands context from your codebase, recommends completions as you type, and can refactor entire files to match your coding standards.

Example: You type “create a React component for user authentication with email validation” and get a complete, working component with error handling and form validation in seconds. What used to take 30 minutes now takes 30 seconds.

Don’t make this mistake ⚠️: Don’t ship AI-generated code that you don’t understand. That authentication component might work perfectly, but store passwords in plain text or have SQL injection vulnerabilities that you’ll only catch if you actually read the code.

Automated Code Testing and Debugging

The old way: Writing tests meant manually thinking through edge cases and typing out dozens of test scenarios. Debugging involved console.log statements everywhere and stepping through code line by line.

The AI way: AI analyzes your code to create thorough test coverage and outlines bugs before they hit production. You can paste a function and get back 20 test cases, including edge cases you never considered. Here’s how this Reddit developer uses it:

Example: You can give AI a complex sorting function and it will generate 20 test cases, including null values, empty arrays, duplicate elements, and performance benchmarks. It spots the edge case where your code fails on negative numbers immediately.

Don’t make this mistake ⚠️: Don’t trust AI tests blindly. They test what the code does, not what it should do. AI won’t know that your e-commerce site needs to handle Swedish postal codes differently or that your client needs specific rounding logic.

Streamlining the DevOps Lifecycle

The old way: You’d spend hours writing Dockerfiles, debugging YAML indentation errors, and copying Jenkins configurations between projects. One wrong environment variable meant your whole deployment failed.

The AI way: Now you describe your app, and AI builds the entire deployment pipeline – containers, orchestration, monitoring, the works. It tells you when you’re wasting money on oversized servers, shows where to cut costs, and warns you before traffic spikes crash your site. Today, roughly 20% of DevOps professionals integrate AI into all phases of the software development lifecycle.

Example: Ask AI to set up CI/CD for your Node app, and it generates a working GitHub Actions workflow with test runners and deployment steps. You’ll still need to add your secrets and tweak the deployment targets, but the foundation that used to take hours is ready in seconds.

Don’t make this mistake ⚠️: Don’t trust AI with production secrets. It might hardcode API keys in the Dockerfile or create pipelines that log sensitive environment variables where they shouldn’t be logged.

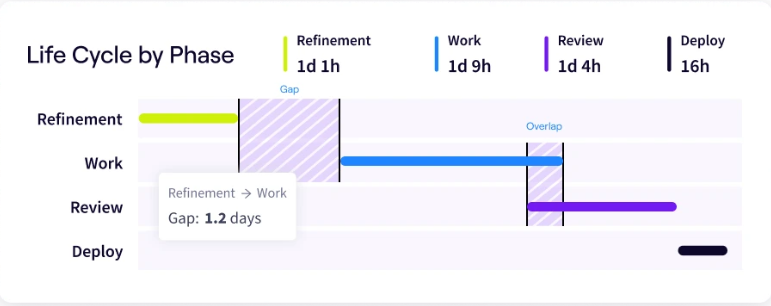

PRO TIP 💡: Jellyfish’s Life Cycle Explorer tracks whether AI speeds up or slows down deployments. Many teams find that AI writes configs fast but breaks production more often. The data shows which DevOps tasks AI handles well and which need humans.

Enabling New Product Capabilities

The old way: Building intelligent features required PhD-level expertise and millions in compute costs. Small teams watched from the sidelines as only Google and Amazon could afford recommendation systems or voice interfaces.

The AI way: Today, developers integrate AI features through APIs and SDKs without building models from scratch. You can add document parsing, programming language translation, or automated summaries in a few days of work. Small teams ship AI capabilities that were enterprise-only just two years ago, though they still need to handle edge cases and API costs.

Example: A solo developer builds a customer support chatbot that understands context and handles 80% of tickets, or adds a “describe what you want” feature that generates custom SQL queries for non-technical users. Features that would have needed entire teams now take days to implement.

Don’t make this mistake ⚠️: Don’t ship AI features without fallback plans. When OpenAI goes down or rate limits kick in, your cool AI-powered search becomes a blank screen unless you’ve built proper error handling and graceful degradation.

Understanding the New Role of the Software Engineer

Understanding the New Role of the Software Engineer

AI affects every developer differently based on their role and seniority. Juniors and seniors face different challenges, and the industry is creating new specializations to handle AI integration.

Here’s how different roles are adapting:

From Writing Code to Designing and Verifying Systems

Right now, coding is still manual labor for most developers. But we’re approaching a point where AI writes the code and developers make the architectural decisions.

The hardest parts of software development were never about code snippets. They’re about making the right trade-offs when every option has downsides. AI doesn’t know your budget constraints or your team’s weaknesses.

A senior engineer described what this looks like in practice:

Yeah, totally agree with this. AI is getting scary good at cranking out code, but actually shaping a fuzzy idea into a working system is still a very human thing.

The “theory building” bit is spot on — so much of the job is about context, tradeoffs, and making sense of ambiguity. One thing I’ve noticed: as AI tools get better at repetitive task automation and writing code, the real challenge is shifting to reviewing and verifying what the AI spits out.

This creates a problem most companies haven’t hit yet. When AI writes most of your code, developers become code reviewers.

But reviewing code you didn’t write is much harder than writing it yourself. You lose context, make assumptions, and don’t know why certain choices were made.

Here’s how this developer on Reddit explained the danger:

Early adopters are learning this the hard way. They gave juniors AI tools, pushed for faster delivery, and now they’re drowning in bugs. The code is syntactically perfect but architecturally wrong. It works until it doesn’t, and when it breaks, nobody knows why.

Some developers believe that this will lead to a clear divide:

AI will replace software engineers who only copy-paste from Stack Overflow. AI will most probably not replace software engineers who find solutions to problems or understand the design of things.

The split makes sense. If your job is googling how to center a div or writing CRUD endpoints, AI does that better. But if you understand why the system needs specific guarantees, how different services interact, or what happens when things fail, you become more valuable as AI improves.

The future developer spends less time typing and more time thinking. You’ll review AI code, catch architectural problems, and make judgment calls about tradeoffs. The syntax becomes AI’s job. The decision-making remains yours.

PRO TIP 💡: Jellyfish shows if AI frees up seniors or just changes their workload. Allocations data reveals whether they design systems or debug AI mistakes all day. You can then adjust your strategies based on what you find.

Soft Skills Become Core Competencies

Technical skills still matter, but they’re becoming table stakes. When AI can help anyone write decent code, the question is, what else do you bring?

Can you explain technical decisions to non-technical people? Can you push back on bad ideas without making enemies? Can you tell when requirements are wrong before building them?

Most developers have trouble with these basics. One programmer put it bluntly on Reddit:

Being pleasant to work with now affects your job market security. Companies used to overlook poor communication if someone was technically brilliant.

But when AI can handle the coding, why keep someone who can’t collaborate? The developer who combines technical skills with people skills becomes the obvious choice. This becomes more important as you advance.

Here’s how this senior engineer explained it:

Think about what AI can’t do here. It can’t sit in a design review and read the room. It can’t tell when the PM is frustrated or when the client isn’t saying what they really mean. The future demands a different skillset entirely:

The developers who thrive will be those who can translate between technical and business teams, who can explain complex problems simply, and who can navigate office politics and client relationships. AI handles the code. You handle the humans.

The Impact of AI on Different Engineering Roles

The Impact of AI on Different Engineering Roles

Different roles feel AI’s impact differently. Junior developers walk a tightrope between AI assistance and actual learning, full-stack seniors become code reviewers and architects, while domain experts become increasingly valuable.

Let’s examine each role:

How the Junior Engineer Role is Changing

Juniors are all-in on AI assistance. They generate entire features with ChatGPT, debug with Claude, and treat AI like a senior developer who never gets annoyed at questions. However, this can also lead to some issues, as this Reddit user notes:

This senior engineer’s complaint shows up everywhere now. Juniors who produce tons of code but can’t explain how it works. They ship features fast but create bugs they can’t fix.

When AI writes your code, you skip all the mistakes that teach you how things work. You never bang your head against a problem for hours, and you don’t build that sixth sense for bugs.

A few juniors realize AI is exposing their knowledge gaps. Here’s what they recommend on Reddit:

The point is, AI can’t save you if you don’t understand data structures. It won’t help when you need to optimize an algorithm or track down a memory leak. The juniors who survive will be those who use AI to go deeper, not to avoid learning.

But of course, AI isn’t purely negative for junior developers. Used correctly, it accelerates learning:

This junior found the balance. AI answers your dumb questions without judgment. It explains complex topics until you get it. But you still write the code yourself.

How the Senior Engineer Role is Changing

Senior developers are code historians. They know the story behind every weird design choice, every commented-out block, and every TODO that’s been there for five years. This context is what makes them irreplaceable.

Here’s what that means in practice:

The majority of the tasks of a software engineer aren’t related to writing from scratch a lot of things, but fitting new requirements in layers of code that have been stratified by generations of other software engineers.

In those situations, what you actually need is patience and understanding of how other people built things and a lot of memory to remember why Jeff put that “useless” if.

AI models can’t do this. It doesn’t know Jeff quit three years ago after a brutal merger. It doesn’t understand that the “useless” if statement prevents a race condition that only happens during Black Friday sales.

Here’s specifically what AI lacks:

- Historical context about why code evolved in certain ways

- Knowledge of undocumented business rules buried in code

- Understanding of which technical debt is intentional

- Awareness of political reasons behind technical decisions

- Memory of past failures that shaped current patterns

Technical knowledge alone doesn’t define senior engineers anymore. Senior engineers now operate across the entire business, manage relationships, and make decisions that affect multiple teams. A Reddit developer explained this change:

This broader scope is exactly what protects senior roles. AI can’t sit in on architecture reviews and sense when the team isn’t bought in. It can’t manage the delicate relationship with that difficult stakeholder who controls the budget.

That’s why the new senior responsibilities now include:

- Protecting the team from impossible deadlines

- Deciding which technical debt to pay down and which to accept

- Building trust with non-technical leadership

- Knowing when to push back and when to compromise

That’s why AI makes senior engineers even more valuable. Someone needs to review all that AI-generated code. Someone needs to make sure that it fits into existing systems. The more code AI writes, the more you need experienced humans to manage the complexity.

The Creation of New, Specialized Roles

Companies that are learning about AI’s potential quickly realize they need people who understand both traditional engineering and machine learning. And these hybrid roles are spreading faster than universities can train people for them.

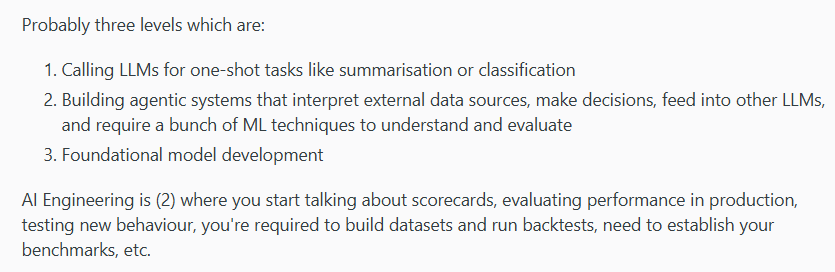

Developers are starting to notice the opportunity:

These aren’t “rebranded” developer jobs. They require different skills, different thinking, and different responsibilities. The work ranges from basic API integration to complex system design, as one developer explained:

At the simpler end, developers integrate AI APIs into existing products. But at the complex end, they’re building evaluation frameworks, managing model performance, and creating systems that can make autonomous decisions.

A few specific roles keep showing up:

- AI/ML engineers work directly with models — they train them, tune them, and make them work for specific problems. Not every company needs them, but those competing on AI do.

- AI platform engineers build the systems that let entire companies use AI safely. They deploy models, watch for failures, and prevent expensive mistakes. As AI spreads across teams, these specialists keep everything running.

- AI ethics and governance specialists keep AI from causing legal or PR disasters. They write policies, check for bias, and handle compliance. Small companies might skip this role, but early mistakes can destroy reputations.

These positions likely won’t be automated soon. Someone needs to decide which models to use, how to evaluate their performance, and when they’re failing. You also need someone to make sure that the AI doesn’t discriminate, hallucinate, or leak sensitive data.

Nobody knows if these positions will exist in five years. They might be stepping stones to something else, or they might become permanent. Your best strategy is to build skills in both traditional development and AI, then adjust as things change.

Why Human Developers Remain Irreplaceable

Why Human Developers Remain Irreplaceable

Despite AI’s impressive features, certain aspects of the software development process still need human judgment, creativity, and accountability.

Here’s what AI can’t replicate:

Critical Thinking and Complex Problem-Solving Skills

What humans do: Developers see patterns across unrelated systems and predict how changes cascade through architectures. They know when a “simple” feature request will break three other services or when a bug is actually a symptom of a deeper design flaw.

Why AI can’t handle this (yet): AI operates without understanding the bigger picture. It can’t know that your architecture has hidden dependencies or that your client has undocumented expectations. Here’s how this developer put it:

LLMs have inherent flaws. Like, it is not self-aware and lacks self-critical thinking and understanding. It does not fully grasp when it understands a concept/context or not.

It does not know if it needs more information in order to give a reasonable answer. It just tried to give it a go.

Example: A developer discovers the ‘random’ crashes happen only when two specific microservices deploy within 5 minutes of each other. AI would analyze each service separately and miss the interaction completely.

Collaboration and Communication

What humans do: Developers speak both business and code. They know when to push back diplomatically, when to compromise, and how to explain why the “simple” change will take six weeks.

Why AI can’t handle this (yet): AI can’t read the room or navigate office politics. It doesn’t know the PM is stressed about deadlines, that the architect’s feedback is really about protecting their legacy system, or that this meeting is actually about budget cuts. AI can’t build trust or manage the relationships that make or break projects.

Example: A developer convinces the sales team to delay a feature promise by showing them how rushing it could lose their biggest client’s data. AI would just generate a technical explanation that nobody would listen to.

Creativity and Innovation

What humans do: Coders see connections nobody else makes. They apply lessons from failed projects to new contexts, steal ideas from completely different industries, and build solutions that make people wonder why nobody thought of it before.

Why AI can’t handle this (yet): AI recombines existing patterns but doesn’t truly innovate. It can’t make the leap from “users are frustrated” to “let’s completely reimagine this workflow.” Here’s how this developer on Reddit explained it:

Technology creates change, but mimicking human emotion and creativity is a stretch for AI. It’s not the huge threat to creativity it’s professed by some to be.

It’s more like the threats of TV replacing radio, calculators preventing math learning, and cars sucking the air out of people. New technologies find their place.

Example: Slack started as an internal tool for a gaming startup, but developers saw potential in their chat system that had nothing to do with games. AI would have probably kept optimizing it for the original purpose.

Ethical Judgment and Accountability

What humans do: Developers make value judgments about what to build and what to refuse. They push back on features that could harm users, consider the societal impact of their code, and take responsibility when things go wrong.

Why AI can’t handle this (yet): AI has no moral compass or understanding of consequences. It will optimize for whatever metric you give it, even if that means creating addictive features that harm teenagers or biased algorithms that discriminate. And when the code causes damage, you can’t hold AI accountable.

Example: A developer refuses to build a feature that tracks employee bathroom breaks even though it’s technically simple. AI would just implement the most efficient tracking system possible, but miss the human dignity issue entirely.

A Strategic Guide for Engineering Leaders: Preparing for the AI-Driven Future

A Strategic Guide for Engineering Leaders: Preparing for the AI-Driven Future

Engineering leaders need a plan for AI integration. The companies that get it right help their developers do better work with AI tools. Here are some things you can focus on:

- Start with education, not implementation: Give your team time to experiment with AI tools before mandating their use. Developers who understand AI’s capabilities and limits make better decisions about when to use it.

- Create clear AI usage guidelines: Decide which code AI can write alone and which needs human oversight. Some systems are too important to trust to AI — your payment processing, your user authentication, your core business logic.

- Invest in code review and testing infrastructure: AI will generate more code faster than ever before. Your review processes and test coverage need to scale accordingly, or technical debt will bury you.

- Hire for judgment, not just coding ability: Look for developers who can evaluate AI output, spot problems, and make architectural decisions. Pure coding skills matter less when AI handles syntax.

- Focus on system design and architecture skills: Train your team to think in systems, not functions. AI writes code, but humans must decide how services interact, where data lives, and which trade-offs to accept.

- Build domain expertise as a competitive moat: AI can’t replicate deep knowledge of your specific industry, customers, and systems. Developers who understand your business context become irreplaceable.

Build AI-integrated Engineering Teams Effectively with Jellyfish

Build AI-integrated Engineering Teams Effectively with Jellyfish

The fear of AI replacing developers was overblown. The main challenge companies have now is figuring out which AI tools help your specific team and which create more problems than they solve.

You might spend thousands on Copilot or Cursor without knowing if they improve delivery speed or just generate more technical debt.

Jellyfish can answer these questions.

Jellyfish is an engineering management platform that pulls data from your entire development toolchain (Git, Jira, CI/CD, and AI assistants) to track delivery speed, resource allocation, AI tool impact, and team performance metrics.

Here’s exactly what you can expect:

- Track real-world AI impact across your entire SDLC: See exactly how Copilot, Cursor, Gemini, or Sourcegraph affects delivery speed, code quality, and team productivity using concrete numbers.

- Measure AI ROI at the project level: Track AI spend per developer, per team, and per project to understand if that Copilot investment was worth it for specific initiatives.

- Compare AI tool performance by team and task: Discover which tools work best for frontend versus backend, which teams adopt AI successfully, and where AI truly speeds up delivery.

- Identify and replicate successful AI adoption patterns: Spot your top-performing teams using AI effectively and scale their practices across the organization.

- Prevent AI-driven burnout and over-commitment: Use Capacity Planner to ensure teams aren’t overwhelmed by the increased code output that AI brings.

- Benchmark your AI-enhanced team performance: Compare your team’s metrics against industry peers to understand if AI is giving you a competitive edge or if you’re falling behind.

With Jellyfish, you’ll see exactly where AI accelerates development and where it needs human oversight to prevent disasters.

Schedule an AI Impact demo to see how Jellyfish can help you build high-performing, AI-integrated engineering teams.

FAQs

FAQs

What skills are most in demand for developers in the age of AI?

System design tops the list — knowing how to structure applications and make architectural decisions that AI can’t make.

Communication skills also matter more than ever since you’ll spend less time coding and more time explaining technical trade-offs to non-technical people.

Should we build our own AI tools in-house or buy them from a vendor?

Most companies should buy, not build. Unless AI is your core product differentiator, you’ll spend millions trying to match what OpenAI or Anthropic already offer for a monthly subscription.

How should we prepare our teams and talent pipeline for this shift?

Here’s some advice on how to prepare your team for AI tools:

- Give developers hands-on AI user experience: Set aside time for experimentation with tools like Copilot or Cursor on real projects, not toy problems.

- Revise your hiring criteria: Look for system thinking, communication skills, and problem-solving ability rather than syntax memorization, languages like Python and JavaScript, or algorithm puzzles.

- Set up AI usage guidelines: Create clear rules about which code requires extra review and which systems stay human-only.

- Strengthen your review processes: AI generates code faster than humans can review it, so you need better testing and validation systems.

- Build domain expertise programs: Develop deep knowledge of your industry and business that AI can’t replicate.

The best approach combines AI’s speed with developers’ judgment, domain knowledge, and problem-solving skills.

How is the field of computer science adapting to the rise of generative AI?

Universities are scrambling to update their curricula, so some schools now require AI literacy courses while others teach students how to verify AI-generated code rather than just write it from scratch.

Many CS programs increasingly emphasize system design, software architecture, and the fundamentals that help developers understand what AI produces and improve their adaptability.

Learn More About AI in Software Development

Learn More About AI in Software Development

- What is the Impact of AI on Software Development?

- Benefits of AI in Software Development

- The Risks of Using AI in Software Development

- How to Measure the ROI of AI Code Assistants in Software Development

- How to Use AI in Software Development: 7 Best Practices & Examples for Engineering Teams

- AI in Software Testing and Quality Assurance

- What’s The Future of Software Engineering with AI?

- What is the Responsibility of Developers Using Generative AI? Key Ethical Considerations & Best Practices

About the author

Lauren is Senior Product Marketing Director at Jellyfish where she works closely with the product team to bring software engineering intelligence solutions to market. Prior to Jellyfish, Lauren served as Director of Product Marketing at Pluralsight.