In this article

Ask developers about AI today, and they’ll probably mention Copilot or Cursor.

And sure, code completion is a natural starting point. But treating AI as just a better autocomplete ignores the broader changes coming to software development.

We’re moving toward AI involvement in architecture decisions, test strategies, deployment patterns, and performance optimization.

The interesting part isn’t that AI will do these things perfectly. It won’t. But developers will spend their time differently when AI takes care of repetitive work.

This evolution makes more sense when you consider how programming has always advanced. A Reddit user recently framed it this way:

That’s a good way to think about it. Yes, AI isn’t magic, but it doesn’t need to be. It won’t magically write perfect code or eliminate bugs. But it will handle enough routine work to change how developers spend their day.

This guide walks through what’s actually changing in the software development process, what’s likely to come next, and how to prepare your team.

The Shift to an AI-Native Development Lifecycle

The Shift to an AI-Native Development Lifecycle

Right now, most developers treat AI as a junior assistant. You ask for help, it suggests code, you move on. Pretty straightforward.

Stack Overflow research confirms that 82% of developers using AI tools rely on them mainly for writing code. But if you look at what OpenAI, GitHub Copilot, and others are building, AI-generated code is just the entry point.

The end goal is AI that participates across the entire development lifecycle. Here’s what that looks like:

- AI in planning and design: AI will take the first pass at requirements. It’ll scan user feedback, support tickets, and analytics to propose features and write initial specs. Product managers then step in to validate priorities and add business context.

- AI in development: Today’s AI helps you write functions. Tomorrow’s will likely build entire features. You’ll describe what you need at a high level, and AI will generate the code, write the tests, and run them.

- AI in testing: AI will create tests for every function, every edge case, and every integration point. The days of manually updating test files after refactoring will (finally) come to an end. One developer already uses this approach today:

- AI in deployment and monitoring: Instead of following rigid deployment rules, AI will analyze each release’s risk, choose the right rollout strategy, and monitor production performance. If something breaks, AI rolls back before anyone notices.

But while this all sounds very promising, we’re not quite there yet. The technology works, but it’s not reliable enough for most teams to trust completely. As one developer on Reddit explained:

They can already code some cool stuff with just regular English input, but there are certainly major limitations.

I think we’ll eventually see completely autonomous development, but as to whether we’ll see LLMs get there depends on several factors, particularly whether we can reduce hallucination rates to near zero.

Getting to near-zero errors will take time. Until then, AI accelerates development but doesn’t automate it completely.

The Next Generation of the Software Engineer

The Next Generation of the Software Engineer

As AI handles more routine work throughout development, engineers don’t disappear. Their role evolves.

Here are some of the biggest day-to-day changes we’ll probably see:

The Engineer as a System Architect

The core of engineering work moves up a level. You’ll spend more time on architecture decision-making and less on implementation details.

When AI can generate a working API from a description, the valuable skill becomes knowing what API design serves your needs best. Engineers become the planners while AI becomes the builder. One developer put it perfectly on Reddit:

This architectural focus makes sense when you consider what AI still can’t do well. AI needs clear instructions and defined goals, but real projects are full of uncertainty and changing requirements. Another developer explained:

AI is getting scary good at cranking out code, but actually shaping a fuzzy idea into a working system is still a very human thing.

The “theory building” bit is spot on — so much of the job is about context, tradeoffs, and making sense of ambiguity. One thing I’ve noticed: as AI tools get better at repetitive task automation and writing code, the real-world challenge is shifting to reviewing and verifying what the AI spits out.

Human engineers define the blueprint, AI builds the components, and then the engineers verify everything works as intended.

The valuable skills are changing. You’ll need to understand what the business actually needs, pick the right design patterns, and know when AI’s suggestions won’t work.

Technical knowledge still matters, but you’ll use it to make decisions rather than write perfect code.

A Greater Emphasis on Human-Centric Skills

As AI handles more technical implementation, the uniquely human parts of engineering become more valuable. Communication, creativity, and problem-solving matter more when you’re not buried in syntax details.

This change will be hardest on developers who rely on copying existing solutions without understanding them. One Reddit comment nailed it:

AI will replace software engineers who only copy-paste from Stack Overflow. AI will most probably not replace software engineers who find solutions to problems or understand the design of things.

This makes sense. AI already copies from Stack Overflow better than humans do. What it can’t do is understand why a solution works, when to apply it, or how to adapt it to unique situations.

Another developer talked about keeping the right perspective:

AI like ChatGPT is a tool; it is not a replacement for thinking. If DevOps uses it wisely and places less reliance, then it will boost the problem-solving skill.

The key word there is “wisely.” When AI handles routine coding, engineers can focus on harder problems. You’ll spend more time understanding user needs, collaborating with teams, and finding creative solutions.

The job becomes more about thinking through problems and less about remembering syntax.

Emerging AI Technologies That Will Shape the Future of Software Development

Emerging AI Technologies That Will Shape the Future of Software Development

While today’s AI systems already change how we code, the next wave of technologies will restructure entire development workflows.

Here are the key innovations that engineering teams should watch closely.

Autonomous AI Agents

What it is: AI agents are systems that can complete multi-step tasks independently. You give them a goal like “insert authentication to this app,” and they figure out the steps, write the code, test it, and submit a pull request.

Why teams should care: Agents can take a Jira ticket and deliver working code without hand-holding. Developers would spend their time on architecture and requirements rather than implementation details.

However, while the technology shows promise, it’s still not ready for widespread use. Here’s how this developer broke it down:

The key blockers aren’t just technical (like context windows or loops) – it’s about reliability and control. Even with the latest models, agents can still make unpredictable decisions or fail to properly decompose complex tasks.

What we’re seeing work better in computer science is “supervised autonomy” – where AI handles the heavy lifting of research, analysis, and basic task execution, but with human oversight at key decision points.

That said, I’m optimistic about specialized autonomous agents for narrow, well-defined tasks emerging in the next 6-12 months. But general-purpose autonomous agents? That’s likely still a few years out.

How to prepare:

- Start experimenting with agent frameworks (e.g., AutoGPT or LangChain)

- Outline repetitive multi-step tasks your team does regularly

- Build clear documentation and test suites (agents work better with good context)

- Practice writing clear, unambiguous requirements

- Set up sandboxed environments where agents can work safely

Example: Say you create a ticket: “Add password reset functionality to our app.” An AI agent would read this, analyze your codebase to understand the authentication algorithm, design the reset flow, implement the backend logic, create the email templates, write tests, and submit everything for review. You’d get a complete, tested feature ready to deploy.

PRO TIP 💡: As AI agents become more autonomous, you’ll need to track their performance just like you track human developers. Jellyfish already monitors AI agent metrics (from code generation and review bots to test generators) and shows you success rates, error patterns, and actual productivity impact.

AI for Legacy System Modernization

What it is: AI models that can read old codebases, understand their logic despite poor documentation, and automatically translate them to modern programming languages and frameworks. Think COBOL to Java or jQuery to React, but with business logic intact.

Why teams should care: Legacy code holds companies back, but fixing it is expensive and risky. AI already helps teams understand undocumented code and convert simple functions—the full automation isn’t here yet, but even AI-assisted modernization beats manual rewrites.

How to prepare:

- Document critical business logic in your legacy systems now

- Start small with AI-assisted refactoring for individual modules

- Build comprehensive test suites for legacy systems (AI needs these to verify migrations)

- Train your team on both old and new technologies

- Create isolated environments for testing AI-generated migrations

Example: A bank needs to modernize a 30-year-old COBOL payment system. Instead of manually documenting every function and rewriting from scratch, AI would analyze the code, extract the business rules, generate equivalent modern code, and create a comprehensive test suite to verify nothing broke.

Proactive Security and Compliance Automation

What it is: AI that monitors your codebase 24/7 for security holes and compliance violations. It then writes the fixes and updates policies to match new regulations automatically.

Why teams should care: Companies face stricter regulations and sophisticated attacks daily. Artificial intelligence could provide round-the-clock protection that scales with your codebase and spot issues before hackers or auditors do.

Still, complex compliance work needs human expertise. Here’s how this security engineer explained it:

GRC is way too complicated to just leave to AI. There’s a ton of nuance, especially with risk and compliance strategy.

Automation tools are solid for the boring stuff like pulling reports and collecting evidence, but I am not sure that there’s anything out there that has the balance you’re looking for.

How to prepare:

- Implement basic automated security scanning in your CI/CD pipeline

- Create clear security policies that AI can reference

- Document compliance requirements explicitly

- Start with AI handling known vulnerability patterns

Example: A developer accidentally commits hardcoded API keys. AI spots them instantly, removes them from the code, rotates the keys, and updates the secrets manager. For GDPR compliance, it identifies any unencrypted personal data and automatically applies the required protection.

Preparing Your Engineering Team for What's Next

Preparing Your Engineering Team for What’s Next

All these advancements sound promising, but they mean nothing if your team isn’t ready. Here’s how to prepare your engineers for what’s coming.

Rethinking Team Structure

Teams built for manual coding won’t fit an AI-augmented workflow. When AI handles implementation, you need fewer coders and more thinkers. The whole org chart needs better adaptability.

Traditional structure usually looks something like this:

- 10 developers writing code

- 2 QA engineers are testing

- 1 architect designing

- 1 product manager who defines the requirements

AI-augmented structure changes this to:

- 4 developers orchestrating AI and reviewing output

- 1 QA strategist defining test approaches (AI writes the tests)

- 2 architects (more design work when implementation is automated)

- 1 product manager validating AI-generated requirements

These new structures require different talents. Writing perfect code becomes less important than managing AI that writes good-enough code at scale.

The valuable engineers will be those who can see the big picture and direct AI accordingly. Here’s what one developer on Reddit predicted:

The future belongs to those who can effectively manage multiple agents at scale, or those who can design and maintain the underlying architecture that makes it all work.

This means your star performers might not be who you expect. The developer who writes flawless code by hand matters less than the one who can manage ten AI agents producing decent code.

The QA engineer who catches edge cases matters less than the one who teaches AI to find them automatically.

Investing in the Right Skills

Yes, the important skills are different now, but that’s good news for developers.

AI handles the grunt work so you can focus on creative solutions and complex challenges. The trick is to focus your energy on skills that complement AI rather than compete with it.

This breakdown shows which engineering skills to prioritize:

| Timeless engineering skills | AI-era skills | Human edge |

| System design and architecture | Prompt engineering and optimization | Communication with non-technical stakeholders |

| Debugging complex issues | AI output validation and testing | Creative problem solving |

| Performance optimization | Managing AI agent workflows | Strategic thinking and business understanding |

| Security fundamentals | Understanding machine learning limitations and failure modes | Team collaboration and mentoring |

| Database design and data modeling | Orchestrating multiple AI tools together | User empathy and product thinking |

Human skills matter more than ever. AI writes good code but misses context. It can’t read between the lines in a meeting or know when to push back on a bad idea. Humans understand the politics, the trade-offs, and what needs to be built.

This developer made a great point on Reddit:

AI can help with tasks, but it can’t replace human creativity. Whether it’s coming up with new ideas for products, marketing, or problem solving, being able to think creatively will always be in demand.

AI might generate ten solutions to a problem, but it takes human insight to recognize which one fits your specific constraints, team dynamics, and business goals. Here’s how one developer sees it:

AI can handle a lot of tasks, but it’s still up to us to solve complex problems. Being able to think critically and creatively about issues will definitely help you stand out in an AI-driven workplace.

Complex problems rarely have clean solutions that you can prompt an AI to generate. They need to understand politics, technical debt, user experience psychology, and market dynamics all at once.

These are the problems that matter most and the ones humans will always own.

PRO TIP 💡: Track which skills correlate with AI-powered productivity. Jellyfish’s AI Impact tool shows you which developers are great with AI tools and what they do differently — prompt techniques, tool selection, workflow patterns. You can use this data to build targeted training that replicates what your top performers already do.

Building an AI-Ready Culture

The biggest AI blockers are somewhat predictable. Fear of replacement keeps developers from even trying AI tools. The “that’s not how we do things” mindset kills experimentation before it starts.

Some teams wait for perfect AI before testing anything, while senior developers write off the whole thing as Silicon Valley hype. Meanwhile, managers worry about losing control over code snippet quality.

The playbook for AI adoption looks like this:

- Start with volunteers: Find developers who want to try AI and let them lead. They’ll become your internal champions and help skeptics see the benefits.

- Share wins publicly: When someone saves hours with AI, tell the whole team. Concrete examples beat abstract promises every time.

- Remove access barriers: AI tools should be as easy to use as Google. No approval forms, no special requests, no budget fights.

- Reward experimentation: Make sure to also celebrate attempts, not just successes. If someone tries AI for a new use case and it fails, that’s valuable learning.

- Let teams choose their approach: Don’t force specific AI workflows. Each team knows their pain points best, so try and let them work on their own problems.

- Create safe spaces to fail: Set up sandbox projects where developers can test AI without production pressure. Mistakes here cost nothing.

- Document what works: Build a shared library of successful prompts, use cases, and patterns. Make it easy for others to copy what works.

The key is to expand how people think about AI’s role. One engineering manager shared their approach:

I encourage my team to use it as often as necessary. If it helps them fill a gap, explain something, or help them get their job done faster, great. It’s not going to do the work for them, but it’ll help them get there.

The biggest barrier I’ve found is just trying to get people to think of all the ways it could potentially be useful, not only to help them get their current job done, but how it can be a digital assistant to take their work to the next level.

Build momentum through small wins. Don’t wait for the perfect AI strategy or comprehensive training program. Let curious developers experiment, share what works, and pull others along.

The teams that figure this out now will have a massive advantage when more powerful AI tools arrive.

Lead Your Team into the Future of Coding with Jellyfish

Lead Your Team into the Future of Coding with Jellyfish

AI should make development faster and better, but most teams see mixed results at best. They buy licenses, send a Slack announcement, and hope for the best.

Meanwhile, they have no idea if developers really use the tools, if code quality improves, or if they picked the right AI assistant.

Jellyfish fixes this blind spot. It’s an engineering management platform that connects directly to your AI coding tools and development systems to show you what’s happening — who uses AI, how much it helps, and whether you’re getting your money’s worth.

Here are just some of the use cases you can expect:

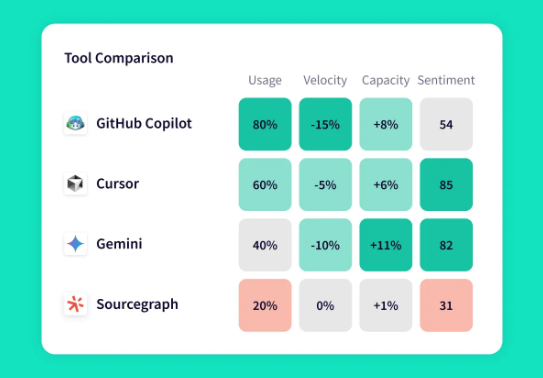

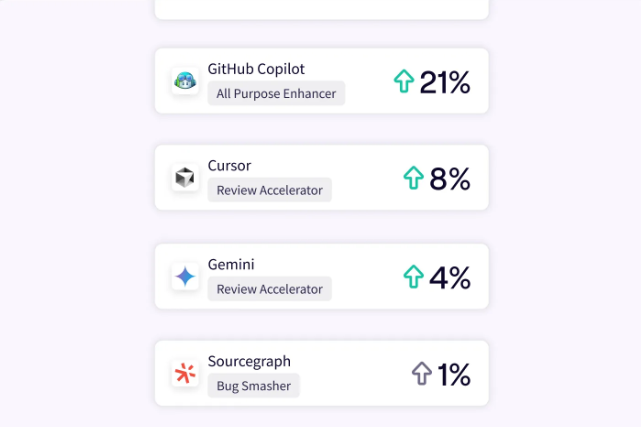

- Track AI adoption across your entire organization: Get detailed breakdowns of which teams use which AI tools (Copilot, Cursor, Gemini, Sourcegraph) and for what kind of work.

- Measure the actual impact of AI on productivity: See hard numbers on how AI changes your delivery velocity, bug rates, and developer efficiency.

- Compare AI tool performance head-to-head: See which AI assistant helps which team most, then streamline your tool mix and budget accordingly.

- Identify and replicate success patterns: Find the developers who get the most value from AI tools, understand their specific workflows, and then create playbooks to help every team achieve similar results.

- Calculate ROI on AI investments: Track exactly how much you spend on each AI tool versus the measurable productivity gains, delivery acceleration, and quality improvements to build bulletproof business cases for continued investment.

- Monitor AI agent performance: You can get consistent, comparable insights on code review agents and other AI automation across your SDLC.

- Forecast capacity with AI productivity gains: Use historical data that accounts for AI-enhanced productivity to set realistic roadmaps and prevent team burnout.

With Jellyfish, you move from hoping AI helps to knowing exactly how much. You’ll see which tools deliver value, which teams benefit most, and where to focus your investment for maximum return.

Schedule an AI Impact demo to build the AI-native development culture your team needs to stay competitive.

FAQs About the Future of AI in Engineering

FAQs About the Future of AI in Engineering

Will AI replace software engineers and programmers?

No, AI can’t replace developers because it lacks human judgment, creativity, and business understanding. It writes code but doesn’t know what to build or why it matters.

Developers become orchestrators who direct AI tools rather than typing every line themselves.

What is the single most important skill for an engineer to learn for the future?

Master the art of AI orchestration — knowing when to use AI, which tools fit the task, and how to validate the output.

It’s like conducting an orchestra where each AI tool is an instrument. The best engineers will direct multiple AI agents to solve complex problems.

What’s the difference between today’s AI assistants and the future of generative AI (GenAI)?

Current AI tools suggest code while you type and answer specific questions. Tomorrow’s AI will understand entire codebases, plan implementations, write tests, and even fix its own mistakes.

How will this shift affect the engineering job market?

The demand for engineers won’t drop, but the skills that get you hired will change. Startups in the tech industry will look for developers who can design systems, manage AI tools, and solve complex problems rather than those who just write clean code.

Entry-level roles might shrink, but opportunities for engineers who can leverage AI will expand.

Learn More About AI in Software Development

Learn More About AI in Software Development

- What is the Impact of AI on Software Development?

- Benefits of AI in Software Development

- The Risks of Using AI in Software Development

- How to Measure the ROI of AI Code Assistants in Software Development

- How to Use AI in Software Development: 7 Best Practices & Examples for Engineering Teams

- AI in Software Testing and Quality Assurance

- Will AI Replace Software Engineers? No and Here’s Why

- What is the Responsibility of Developers Using Generative AI? Key Ethical Considerations & Best Practices

About the author

Lauren is Senior Product Marketing Director at Jellyfish where she works closely with the product team to bring software engineering intelligence solutions to market. Prior to Jellyfish, Lauren served as Director of Product Marketing at Pluralsight.