AI was at the center of almost every engineering conversation in 2025. It’s no surprise then that the vast majority of engineering organizations now use AI tools in some capacity, and these companies – especially those at the leading edge – are already seeing important gains.

By analyzing metrics from tens of thousands of Jellyfish users representing hundreds of organizations over the last 12 months, we can get a clear view of the year’s trends in AI adoption and its overall impact on the SDLC. We can also see how usage of market-leading tools like Claude, Cursor and GitHub Copilot shifted throughout 2025.

AI Coding Tool Adoption Continued to Rise

AI adoption reached new heights in 2025. According to our State of Engineering Management Report, 90% of teams now use AI in their workflows, up from 61% one year ago.

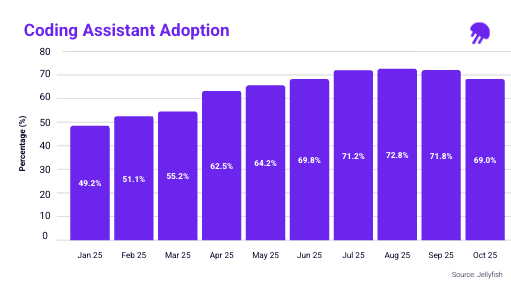

Jellyfish platform data shows similar patterns when we break AI usage down into specific capabilities: Code assistant adoption increased from 49.2% in January to 69% in October, peaking at 72.8% in August. That summertime spike may be linked to the release of Claude Opus 4.1, available in Claude Code, as well as the release of OpenAI’s GPT-5 in August, with updates to Codex the following month.

These numbers indicate growing confidence in AI; engineers who were previously skeptical about coding assistants are seeing enough potential value to get started. AI coding assistants evolved into context-aware agents over the last 12 months, with leading tools setting new standards for developer productivity.

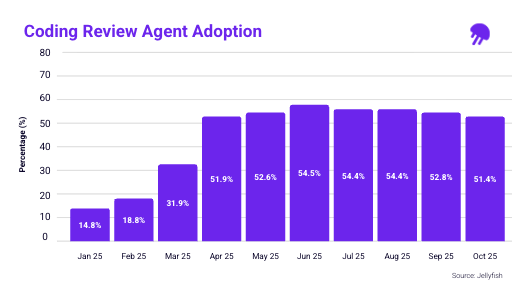

Adoption of coding review agents grew even faster in 2025, from 14.8% in January to 51.4% in October. The data shows a major leap in adoption between March and April – coinciding with the general availability of Copilot Code Review. As AI review agents get better at detecting issues and vulnerabilities, more teams will trust the tools to improve the quality of their code. Combining tools like Jellyfish AI Impact and SonarQube provides engineering leaders with visibility into code quality and security as they deploy new AI tools.

How adoption differs by coding tool

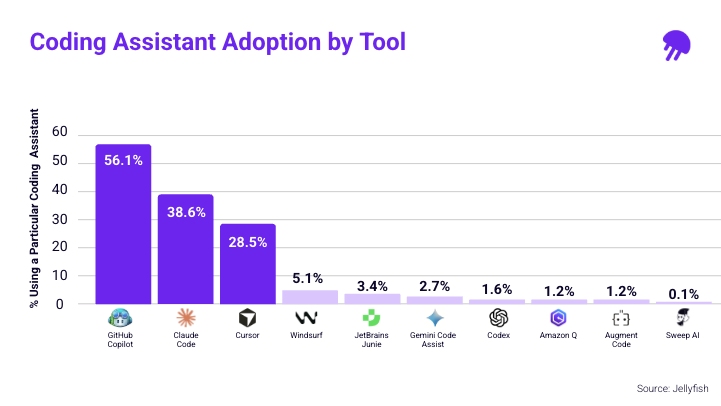

GitHub Copilot was the most popular coding assistant in 2025, followed by Claude Code and Cursor. Copilot also dominated this year when it comes to code review – 67% of engineers use Copilot Review, followed by 12% for CodeRabbit. However, Cursor Agent is the top agentic AI tool: 19.3% of AI users have adopted Cursor compared to 18% who use GitHub Copilot Agent.

Claude, Copilot, and Cursor all boast similar retention rates. 89% of engineers who started using Copilot or Cursor at the start of April 2025 were still using the tool 20 weeks later, compared to 81% in the case of Claude Code. With both Copilot and Cursor, we see retention drop off in the first weeks after adoption and pick back up again several weeks in. Engineering leaders can potentially avoid this dip with training and enablement, encouraging team members to share best practices and celebrate success stories.

What increased adoption means for leaders

As AI adoption increases, engineering leaders need to provide developers with training and support to use AI dev tools effectively.

AI has the potential to transform the developer experience, but only when organizations have the right processes in place. The 2025 DORA report showed that AI isn’t a silver bullet; instead, it magnifies an organization’s existing strengths and weaknesses. Increased investments in AI should motivate leaders to address the issues that could stand in the way of progress.

AI Made a Measurable Impact

AI adoption increased in 2025, but what does that mean for actual engineering results?

According to Jellyfish platform data, almost half of companies now have at least 50% AI-generated code, compared to just 20% at the start of the year. This not only reflects the increase in AI coding assistant adoption, but also that developers trust the outputs.

The percentage of AI-reviewed pull requests (PRs) grew steadily and significantly across the year. By October, just over 20% of companies used AI to review 10 to 20% of PRs. We can expect these numbers to rise in 2026 as AI coding review tools move into the mainstream.

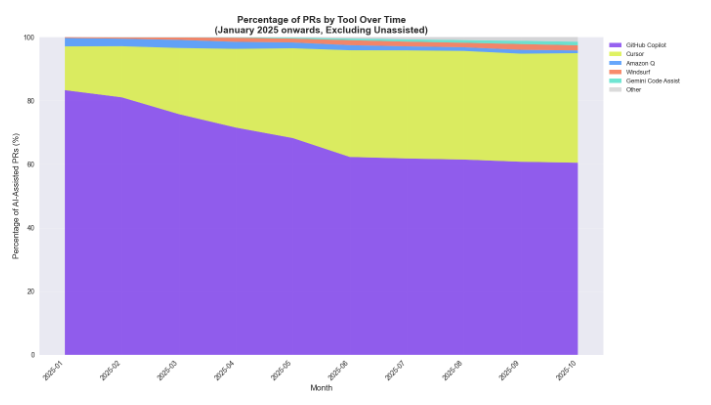

The percentage of PRs by tool over time paints an interesting picture. While GitHub Copilot was the most popular tool throughout the period, Cursor steadily gained ground. Over 80% of AI-assisted PRs used Copilot in January, compared to under 20% using Cursor. By October, those proportions had shifted to 60% and almost 40%. Could we see a 50/50 split by this time next year?

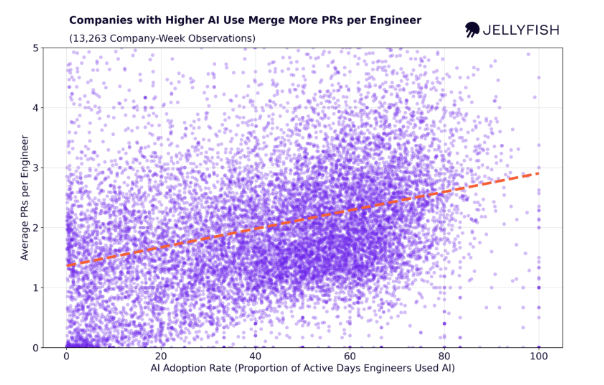

Digging deeper, Jellyfish teamed up with OpenAI to explore the impact of AI on coding productivity and quality. We found that companies with higher AI adoption:

- Merge more PRs per engineer: The average number of PRs per engineer increased 113% – from 1.36 to 2.9 – when adoption went from 0% to 100%.

- Have faster cycle times: Companies can expect their median cycle time to drop from 16.7 to 12.7 hours – a 24% reduction – when adoption goes from 0% to 100%

- Push more bug fixes: 7.5% of PRs are bug fixes at companies with low adoption, compared to 9.5% at companies where adoption is high.

These metrics provide critical insights into how AI is transforming engineering as a whole, but the numbers can vary widely from organization to organization. The Jellyfish AI Impact Framework allows leaders to track adoption, productivity, and outcomes so they can make data-informed decisions that will keep their company ahead of the curve.

Request a demo to learn more about how Jellyfish can cut through uncertainty and provide data-backed insights to inform your AI strategies.

About the author

Nikolas Albarran is a Product Researcher at Jellyfish.