In this article

The traditional testing playbook no longer works. Manual testing can’t scale with continuous deployment, and test automation has become its own burden – thousands of brittle scripts that break faster than teams can fix them.

Meanwhile, escaped defects hurt more than ever before. Users expect perfection and won’t hesitate to leave scathing reviews or jump to competitors.

AI-based testing breaks this cycle. It analyzes past failures, recognizes risky patterns, and concentrates on the parts of your code that usually cause trouble. The tests stay in sync with your application.

The impact on daily work is clear. One professional on Reddit put it this way:

This evolution, not replacement, is what most QA professionals experience:

Below, we’ll take a look at how AI handles common testing problems, the concrete benefits teams report, and how to integrate these tools into your existing process.

What is the Role of AI in Software Testing?

What is the Role of AI in Software Testing?

Modern AI testing tools can handle the repetitive tasks that would take human testers hours or days to complete manually. These are some of the primary ways teams use AI in their QA process:

- Creating test coverage automatically: AI analyzes your codebase and user workflows to generate relevant test cases without any manual effort. According to the State of Software Quality Report 2024, more teams use AI for test case generation than for any other testing task.

- Validating user interfaces across platforms: Artificial intelligence checks how your app looks across different browsers and devices to outline display problems that functional tests ignore. The system spots misaligned buttons and overlapped text, but it ignores browser-specific quirks.

- Providing detailed failure analysis: While regular tests just say “failed,” AI explains what users would experience and how to fix it. This context-rich reporting reduces the entire bug reporting cycle by 90%.

- Focusing tests on changed code: AI picks which tests to run by looking at what code changed and what usually breaks. Instead of running everything every time, teams test only what matters for each specific update.

- Identifying high-risk code areas: Machine learning algorithms study your bug history and code complexity to flag risky areas before you test. Since AI defect predictors find about 70% of bugs upfront, teams can target the right areas from day one.

Types of AI Software Testing

Types of AI Software Testing

The term “AI testing” refers to several separate techniques that manage different aspects of quality assurance. And each technique targets a particular issue that makes traditional testing slow, expensive, or unreliable:

- AI-driven test generation: Writing test scripts for every possible user path takes weeks of manual work. Generative AI explores your application automatically, maps out user flows, and creates comprehensive test suites that cover edge cases humans might never think to test.

- Self-healing test automation tools: Test maintenance kills productivity when every UI tweak breaks dozens of scripts. AI-powered tests adapt to changes like renamed buttons or moved elements on their own, so your automation runs smoothly without constant repairs.

- Visual testing: Functional tests tell you if a button works, but not if it’s invisible or overlapping other content. AI compares your UI testing across devices and outlines visual bugs like broken layouts or text that gets cut off on certain screens.

- Predictive bug detection: Your bug history holds clear patterns about failure-prone code. Machine learning looks at these patterns and outlines high-risk modules so you don’t waste cycles testing code that rarely breaks.

- Performance pattern recognition: Traditional load testing relies on made-up user scenarios that are way off from actual use. AI learns from production traffic to create authentic load tests and spot performance problems before they impact users.

- Intelligent test prioritization: You don’t need to test payment flows when you only change the header logo. Machine learning understands code dependencies and picks relevant tests for each commit, so developers get faster feedback.

Benefits of AI in Software Testing

Benefits of AI in Software Testing

Teams using AI testing report improvements that would have seemed impossible a few years ago. The numbers speak for themselves:

- Increased speed and shorter release cycles: Testing no longer holds up releases when AI handles the heavy lifting. Research shows that AI-driven automated testing tools reduce test cycle times by up to 60%.

- Improved test coverage and accuracy: Human testers can only check so many scenarios before deadlines hit. AI-powered automated testing tools enhance test coverage by up to 200% according to a Capgemini study, because they explore paths and combinations humans wouldn’t have time to test.

- Reduced costs and increased efficiency: QA typically eats up 25-40% of software budgets through manual labor and test maintenance. Companies that switch to AI-powered testing report up to 30% cost reduction, and they find more defects in less time.

- Frees up humans for high-impact work: QA engineers shouldn’t spend their days clicking through test scripts. Nearly 70% of workers want AI to handle routine work, according to Stanford, and this is particularly relevant in end-to-end testing, where automation frees experts to focus on complex quality challenges.

PRO TIP 💡: These benefits mean nothing if you can’t prove them with data. Jellyfish helps you track how AI adoption affects your overall software engineering metrics and delivery speed, so you can see which teams truly benefit from AI tools.

Manual Software Testing vs. AI Software Testing

Manual Software Testing vs. AI Software Testing

Manual testing built the software industry we know today. Human testers spot bugs that matter to users, understand context, and apply judgment that no script can replicate.

But as applications grow more complex and release cycles shrink from months to days, manual testing strategies alone can’t keep pace. Teams need weeks to test what users expect to work instantly, and even the best QA engineers can only cover a fraction of possible scenarios.

AI fills the gaps that manual testing simply can’t cover. A human tester checks maybe 50 test cases per day, but AI models run thousands of tests per hour without breaks or mistakes.

It spots trends across massive datasets, validates UI across every possible device combination, and adapts to code changes instantly. No amount of manual effort can match this scale — it’s like asking someone to count every grain of sand on a beach.

| Task | Manual testing | AI testing | Winner |

| Understanding user experience | Applies human judgment and empathy | Follows programmed rules | Manual |

| Exploratory testing | Finds unexpected issues through creativity | Tests only what it’s trained to find | Manual |

| Regression testing | Takes days, prone to human error | Runs thousands of tests in minutes | AI |

| Visual testing across devices | Checks a few key screens manually | Validates the entire app across all viewports | AI |

| Test maintenance | Requires updating scripts for every UI change | Self-heals and adapts automatically | AI |

| Edge case discovery | Limited by human imagination and time | Generates combinations humans wouldn’t consider | AI |

| Context and business logic | Understands the “why” behind requirements | Test execution without understanding the purpose | Manual |

| Cost at scale | Increases linearly with coverage | One-time setup, then minimal cost | AI |

| Initial setup | Start testing immediately | Requires training and configuration | Manual |

| Handling ambiguity | Makes judgment calls on unclear requirements | Needs clear pass/fail criteria | Manual |

The best teams combine both approaches based on what each does best. Manual testers explore new features, validate user workflows, and apply business knowledge that AI lacks.

AI runs the regression tests, checks every browser combination, and handles the tedious tasks that burn out QA engineers. Together, they create better coverage than either could achieve alone.

How to Use AI in Software Testing

How to Use AI in Software Testing

Implementing AI testing doesn’t mean you have to overhaul your entire QA process. These practical approaches let you add AI capabilities one step at a time while keeping what already works:

Create Self-Healing Tests to Reduce Maintenance

The old way: Every UI change breaks multiple test scripts, and QA engineers spend hours updating selectors and XPaths. A simple button rename can cause dozens of tests to fail, even though the functionality still works perfectly.

The AI way: AI-powered tests use multiple identification methods simultaneously, so they don’t break when one attribute changes. If developers change a button’s ID or move it elsewhere on the page, AI figures out it’s still the same button and adjusts the test to keep working. QA engineers who use these tools immediately notice the difference:

Self-healing tests make so much sense – maintenance has always been the most exhausting part of automation for me.

First steps you can take:

- Audit your current test suite to see which tests break most frequently due to UI changes

- Start with your most brittle tests (typically login flows and navigation that touch multiple pages)

- Configure multiple locator strategies so the AI has fallback options when primary selectors fail

- Set up alerts for when tests self-heal so you can review the changes and make sure they’re valid

- Run parallel tests with and without self-healing for a sprint to measure the reduction in maintenance time

Example: A retail company’s checkout tests broke weekly whenever marketing updated button text or developers restructured the page layout. After implementing self-healing tests, the same test suite that needed 15 hours of weekly maintenance now runs unattended. The AI recognizes that the “Buy Now” button became “Purchase” and updates the test automatically.

Automate Test Data Generation

The old way: QA teams manually create test data in spreadsheets or copy production data and scramble it for privacy. This process takes hours, often produces unrealistic data, and the same tired test accounts get reused until they break.

The AI way: AI studies your data model and automatically creates test data that looks and behaves like real production data. It generates complete datasets with proper relationships, valid edge cases, and realistic patterns in minutes.

First steps you can take:

- Map out your current test data requirements and outline which datasets take the most time to create manually

- Start with simple entities like user profiles before you move to more complex relational data

- Define your data rules and constraints clearly so that AI generates valid test scenarios

- Create templates for common test scenarios you run repeatedly

- Set up a test data pipeline that refreshes automatically before each test cycle

Example: A banking app’s QA team used to spend days building test accounts with transaction histories. Now their AI tool generates 10,000 complete customer profiles in under an hour, each with realistic spending patterns and edge cases like overdrafts or disputed charges they hadn’t considered testing.

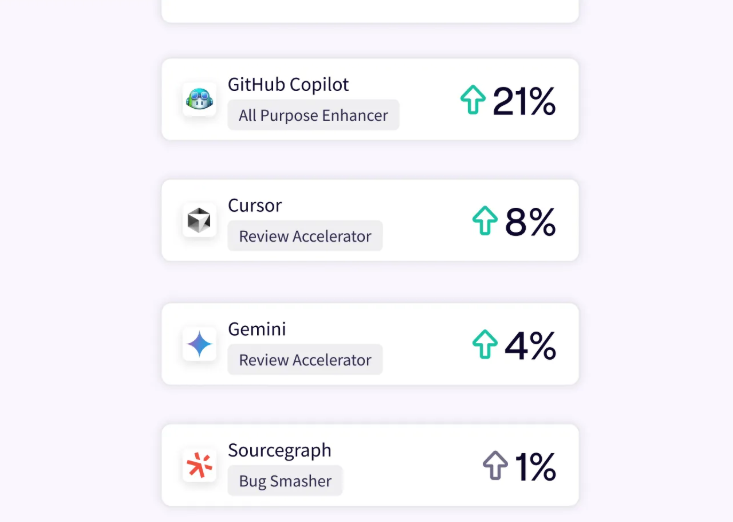

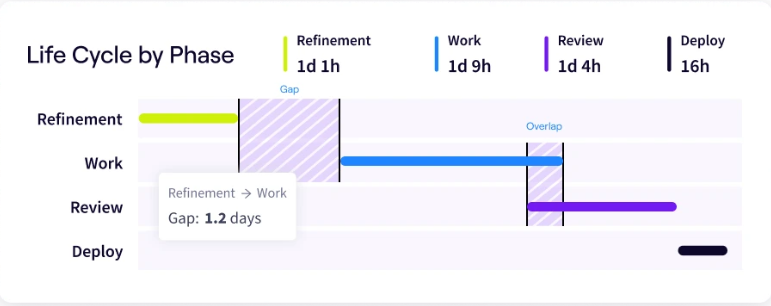

PRO TIP 💡: Faster test data helps only if it removes delays from your software development process. Jellyfish’s Life Cycle Explorer tracks where work stalls in your pipeline, so you can see whether QA improvements lead to quicker releases or if problems just shift elsewhere.

Automate Your Regression Suite

The old way: Manual regression test creation is so time-consuming that teams can’t keep up with development speed. Engineers are actively hunting for AI alternatives to escape this cycle, as this Reddit user posted:

The AI way: AI scans your entire application and builds regression tests based on actual user flows and code dependencies. The tests auto-update when you change features, so there’s no need for constant rewriting.

First steps you can take:

- Analyze your current regression suite to outline gaps in coverage and document which user paths cause the most production issues

- Import existing test cases if you have them, so the AI can learn your testing patterns and coverage priorities

- Configure the AI to monitor both code changes and production errors to see what needs regression testing

- Start with one module or feature area, let AI generate tests, verify accuracy, then expand to other areas

- Create feedback loops where failed tests and escaped bugs train the AI to streamline test generation

Example: An e-commerce platform with 500 manual regression tests switched to AI generation and doubled its coverage in two weeks. The AI created tests for abandoned cart recovery, guest checkout flows, and payment edge cases that the team had never documented. Now, when developers change the checkout process, the regression suite updates instantly.

Use AI for Defect Analysis

The old way: QA teams read through bug reports individually and try to figure out what went wrong with limited clues. Each engineer investigates their assigned bugs in isolation, so they miss that five different issues all come from the same broken service.

The AI way: AI connects the dots between related bugs, finds common root causes, and shows you which fixes worked before for similar issues. One engineer on Reddit explained the impact of this:

The intention isn’t to replace QA/QC teams here, but to essentially give them more cognitive bandwidth. Currently, all quality checks are done manually, and the intent here is to make it easier for these teams.

First steps you can take:

- Export your bug tracking history from Jira, Azure DevOps, or whatever system you use to create a training dataset

- Configure the AI to analyze bug descriptions, stack traces, and code changes to outline patterns and commonalities

- Set up automated bug classification so similar issues get grouped together for faster resolution

- Train the system on your resolved bugs so it learns which fixes worked for specific types of defects

Example: A SaaS platform might receive 500 bug reports per week that all look different to support teams. AI defect analysis could find out that 60% trace back to just three database connection issues, so engineers can take care of the real-time problems instead of patching symptoms endlessly.

Adopt Low-Code or No-Code Test Creation

The old way: Only engineers who know Selenium and programming languages can write automated tests, so most of the team sits on the sidelines. Business analysts and manual testers understand the product better than anyone, but can’t help with automation because they don’t code.

The AI way: Visual test builders let anyone create automated tests by recording actions or describing test steps in plain English. AI converts these natural language instructions or recorded workflows into maintainable test scripts that run as reliably as coded tests.

First steps you can take:

- Identify team members who understand your application deeply but don’t write code, like business analysts or manual QA testers

- Start with simple, stable workflows like login sequences before tackling complex multi-step processes

- Train non-technical team members on the tool with hands-on sessions based on real test scenarios they already know

- Measure how much faster tests get created and how many more team members contribute to test automation

Example: An insurance company’s manual QA team knew every edge case in their claims system, but couldn’t write automated tests. After adopting a no-code platform, these testers created 200 automated scenarios in two weeks by simply describing the steps in English. The AI translated phrases like “verify claim status updates after approval” into executable test code that runs nightly.

An Overview of Popular AI-Based Software Testing Tools

An Overview of Popular AI-Based Software Testing Tools

Today’s AI testing market offers solutions for agile teams of every size and technical skill level. While some tools focus on specific problems like visual bugs or test maintenance, others provide complete testing platforms.

Here are some of the market leaders worth evaluating:

| Tool | Primary use case | Key AI features | Best for |

| Testim | End-to-end web testing | Self-healing tests, smart element locators, and AI-based test authoring | Teams transitioning from manual to automated testing |

| Applitools | Visual and cross-browser testing | Visual AI for pixel comparisons, automatic baseline maintenance, and layout testing | Applications where UI consistency is critical |

| mabl | Continuous testing process in CI/CD | Auto-healing tests, intelligent failure analysis, and performance regression detection | DevOps teams that need integrated testing |

| Functionize | Autonomous test creation | Natural language test creation, ML-powered maintenance, predictive test selection | Enterprise teams with complex applications |

| Katalon | Multi-platform test automation | AI object recognition, smart failure analysis, automatic test generation | Teams testing web, mobile, and API testing together |

| Sauce Labs | Cross-browser and device testing | Failure pattern analysis, smart test orchestration, and visual regression detection | Organizations that need massive device coverage |

| TestCraft | Codeless Selenium automation | AI-based element binding, test flow optimization, and smart data management | Business testers without coding skills |

| Launchable | Test intelligence and optimization | Predictive test selection, flakiness detection, ML-based prioritization | Large codebases with slow test suites |

Before committing to any platform, evaluate it against your specific needs and constraints. You should consider these best practices:

- Check compatibility: Verify the tool integrates with your tech stack, CI/CD pipeline, and existing test frameworks.

- Map out the total cost of ownership: Include training, maintenance, and scalable costs apart from the base license.

- Test with your worst legacy code: See how it handles your messiest, most brittle existing tests.

- Validate vendor support quality: Submit a complex question during the trial to gauge metrics like response time and expertise.

- Check the escape hatch: Export some tests to make sure that you’re not locked into proprietary formats.

- Involve the whole team: Have developers, QA, and business analysts try the tool to spot adoption barriers.

- Monitor resource consumption: Check if the tool slows down your CI/CD pipeline or needs expensive infrastructure.

The right tool for another company might be wrong for yours. Base your decision on the actual improvements you see when your team uses the tool on real-world projects.

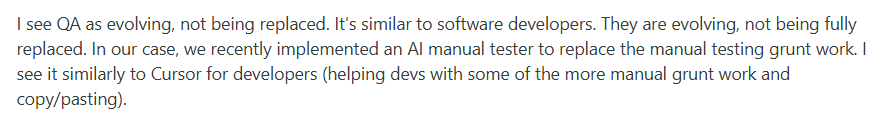

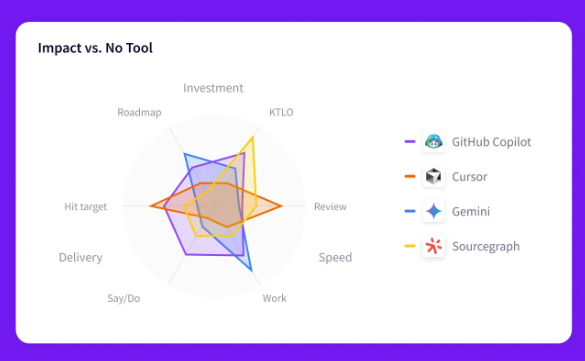

PRO TIP 💡: That expensive AI testing platform might only benefit two teams while others ignore it completely. Jellyfish shows you adoption rates and productivity metrics by tool and team, revealing whether to train more teams or admit the tool doesn’t fit your needs.

See the Big Picture with Jellyfish

See the Big Picture with Jellyfish

Your team might have the best AI testing tools available, yet still miss the benefits because you can’t see who uses them or how they help.

Without proper insights into usage and impact, AI investments pretty much become expensive experiments.

That’s where Jellyfish comes in.

Jellyfish provides the engineering intelligence platform that helps you get the most value from AI tools across your entire development organization:

- Track AI tool adoption and usage patterns: You can see exactly which teams use AI testing tools, how often they use them, and find barriers that are preventing wider adoption across your QA organization.

- Measure the real impact of AI on delivery: Track how AI testing affects deployment speed, defect rates, and quality metrics with concrete numbers you can share with leadership.

- Compare AI tool performance: Evaluate which AI testing platforms deliver the best test results for different team types and testing scenarios to optimize your tool selection.

- Identify where AI brings the most value: Discover which testing phases benefit most from AI automation and where human testers still provide irreplaceable value.

- Benchmark against industry peers: Compare your testing metrics with similar companies to see where you lead and where you lag behind.

- Forecast capacity with AI-enhanced productivity: Build accurate roadmaps based on actual time savings from AI testing and keep workloads sustainable for your teams.

With Jellyfish, you finally get clear answers about which AI tools work for your teams and which don’t.

Schedule an AI impact demo to see what your engineering data shows.

FAQs About AI in Software Testing

FAQs About AI in Software Testing

What features should I look for in an AI software testing tool?

Different teams need different AI features, so prioritize what fixes your current bottlenecks. These features typically deliver the most value:

- Self-healing capabilities: Tests should automatically adapt when UI elements change instead of breaking every time developers update the interface.

- Natural language test creation: Look for tools that let non-technical team members run tests in plain English or through visual recording.

- Automatic test prioritization: The tool should analyze code changes and run only relevant tests to speed up feedback loops.

- Root cause analysis: Choose platforms that explain why tests fail with detailed context, screenshots, and recommended fixes.

- Cross-browser and device coverage: Make sure the tool tests across multiple environments simultaneously without extra configuration.

- Integration with your CI/CD pipeline: The tool must work with your existing Jenkins, GitLab, or GitHub Actions setup without major changes.

Will AI completely replace manual testers?

No, AI makes testers more valuable, not obsolete.

While AI runs thousands of repetitive checks, human testers focus on creative problem-solving, user empathy, and understanding business context that machines can’t grasp.

How do I get my team started with AI testing?

Choose the test suite that breaks most often or takes the longest to run, and implement AI there first.

Let your team learn the tool on familiar tests where they’ll immediately see the benefits, then gradually expand to other areas.

Learn More About AI in Software Development

Learn More About AI in Software Development

- What is the Impact of AI on Software Development?

- Benefits of AI in Software Development

- The Risks of Using AI in Software Development

- How to Measure the ROI of AI Code Assistants in Software Development

- How to Use AI in Software Development: 7 Best Practices & Examples for Engineering Teams

- Will AI Replace Software Engineers? No and Here’s Why

- What’s The Future of Software Engineering with AI?

- What is the Responsibility of Developers Using Generative AI? Key Ethical Considerations & Best Practices

About the author

Lauren is Senior Product Marketing Director at Jellyfish where she works closely with the product team to bring software engineering intelligence solutions to market. Prior to Jellyfish, Lauren served as Director of Product Marketing at Pluralsight.