In this article

You started small. Five engineers, one deployment pipeline, everyone knew how everything worked. Then you scaled to 30 employees, then 50, and the simple setup that felt elegant now can’t seem to keep up.

Today, you have seven different ways to deploy services, inconsistent environments across teams, and infrastructure engineers who spend their days troubleshooting one-off problems. Teams are building their own workarounds because the official path is just too slow.

You know something needs to change. Now you need to figure out if platform engineering is the solution, or if your team isn’t quite ready for that level of investment yet.

This guide breaks down eight clear signs your organization needs platform engineering now. And if you recognize most of them, you’ve probably outgrown your current infrastructure.

The 8 Signs You're Ready to Adopt Platform Engineering

The 8 Signs You’re Ready to Adopt Platform Engineering

These eight patterns help you understand whether you’ve moved from “platform engineering would be nice” to “platform engineering solves the exact problems we have right now.”

If most of these describe your organization, you’ve reached the tipping point.

Sign 1: Your Developer Onboarding Takes Weeks, Not Days

The problem: A new developer joins your team and spends weeks asking for access, reading outdated docs, and asking how things “really work.” Their first meaningful code contribution happens in week four, if they’re lucky.

Why this happens: No one has built standardized environments or self-service tools. Each team cobbled together its own infrastructure, documentation exists in random Slack messages, and getting access means waiting for three different people to approve requests manually. The lack of standardization creates what one developer described as a nightmare scenario:

How platform engineering helps:

- New developers get instant access to pre-configured development environments through self-service portals

- Golden paths walk them from setup to first deployment without senior engineer intervention

- Standardized processes let developers move between teams without relearning everything

- Automated provisioning removes the multi-day wait for tool and environment access

- Internal developer portals provide a single source of truth for documentation, APIs, and deployment processes

Traps to avoid:

- Building a self-service portal that’s harder to use than the manual process it replaces, with complex forms and confusing workflows that only frustrate developers

- Creating golden paths that are too rigid and block developers who need flexibility for legitimate edge cases

- Documenting everything in your internal portal, but making it unsearchable or poorly organized

- Standardizing tools because they seem like the best practice, without validating that they fit your context

Example: Say your new developers take 15-18 days to ship their first meaningful change because they’re waiting on access and figuring out custom processes. Platform engineering could shrink that window to 2-3 days through self-service portals and documented golden paths, which then also frees senior engineers from repetitive onboarding support.

Sign 2: You Have Rampant Tool and Process Sprawl

The problem: Your frontend team uses CircleCI, your backend team swears by GitHub, and your data team built custom Jenkins pipelines. Each team has its own monitoring stack, deployment process, and way of managing secrets. When someone moves between teams or projects, they face a completely different toolchain and have to learn everything from scratch.

Why this happens: Teams chose tools independently based on their immediate needs without coordination across the organization. What made sense for one team at one point in time became locked in, and nobody had the authority or bandwidth to standardize across groups. The accumulation creates what one engineer described as a self-defeating cycle:

Every company I have worked with has a bloated DevOps stack. Terraform, Kubernetes, Jenkins, ArgoCD, GitHub Actions, Helm, Microsoft Azure—you name it.

It’s like every tool fixes one thing but breaks another, and somehow, the entire setup is still fragile as hell. There’s no scalability. Instead of simplifying DevEx, we’re just stacking more complexity on top of complexity.

How platform engineering helps:

- Narrows down to a focused set of approved tools that handle common needs

- Creates uniform workflows across teams so developers can deploy services, check logs, and manage secrets using the same patterns regardless of the underlying tech

- Teams can adopt cloud-native architectures consistently, with standardized approaches to containerization, service mesh, and microservices deployment

- Drops the mental overhead of remembering which commands work where, since deployment and configuration management work the same across all projects

- Makes cross-team collaboration easier because developers share a common vocabulary and toolset

- Makes internal transfers and cross-team projects seamless since developers bring their platform knowledge with them

Traps to avoid:

- Choosing enterprise-grade tools for everything when simpler solutions would work just fine

- Mandating internal developer platform (IDP) adoption through a top-down decree without getting buy-in from teams

- Forcing everyone onto a single rigid tool when legitimate differences between teams justify different approaches

- Standardizing too early, before you understand the common patterns (usually leads to premature decisions that don’t fit most teams and need constant exceptions)

Example: Consider an org where tool sprawl means teams collectively maintain eight CI/CD pipelines and three monitoring stacks. Platform engineering narrows this to two deployment options (one for containers, one for serverless) and a single observability platform. Support burden drops from eleven tools to three, and cross-team collaboration is now possible because everyone speaks the same infrastructure language.

PRO TIP 💡: Jellyfish’s resource allocation tracks how much time teams spend wrestling with different CI/CD tools and monitoring systems. After you consolidate to a standard platform, you can show the exact time savings by comparing before and after, which gives you hard numbers to prove the investment paid off.

Sign 3: “You Build It, You Run It” is Causing Burnout

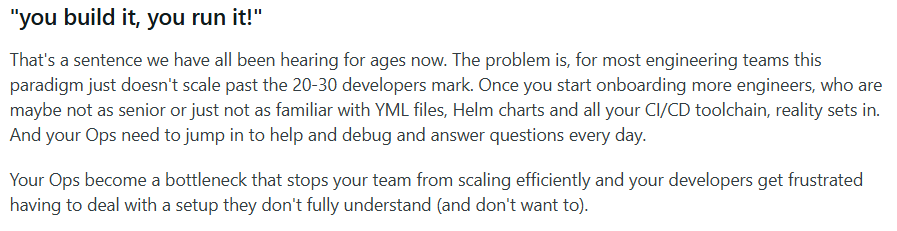

The problem: Your teams follow “you build it, you run it,” but developers now spend more time firefighting infrastructure than building features. On-call has become so brutal that talented engineers are quitting, and the ones who stay are burned out from handling alerts at 2 AM for problems they didn’t create. The phrase has become almost a joke in some organizations, as one engineer shared:

Why this happens: Full ownership sounded good in theory, but you never built the foundational platform that makes it sustainable. The cognitive load of managing everything from application code to infrastructure operations crushes developer productivity. And without shared tools or runbooks, every problem becomes a custom debugging session that exhausts whoever is on call.

How platform engineering helps:

- Takes ownership of infrastructure concerns like networking, security, and deployment pipelines so developers can focus on application logic

- Automates routine operational tasks and builds self-healing tools for known failure modes

- Draws clear lines between what the platform owns (infrastructure and deployment) and what developers own (application logic and feature behavior)

- Provides consistent operational tooling and documented procedures that work across all services

Traps to avoid:

- Swinging too far in the opposite direction and removing all operational responsibility from developers and product managers

- Building a platform that forces developers to file tickets and wait days for simple changes, so you end up with the old centralized ops model that made everyone miserable in the first place

- Taking over application-level concerns that developers should own (e.g., business logic monitoring or feature-specific alerting)

- Implementing automation that’s so complex that developers can’t understand or debug it when things go wrong

Example: Developers at a mid-sized company handle everything from container orchestration to SSL certificates, and get paged multiple times per night for infrastructure pain points they can’t fix. The company decides to use platform engineering to centralize infrastructure operations and standardize monitoring. On-call shifts have now become manageable, and developers can redirect their energy toward building products.

Sign 4: Your Software Development Teams are Constantly Blocked

The problem: Your developers need a new database instance or environment access, but they’re stuck waiting days for manual provisioning. Tickets pile up, projects stall, and what should take minutes stretches into a week because infrastructure changes need approval from a handful of gatekeepers.

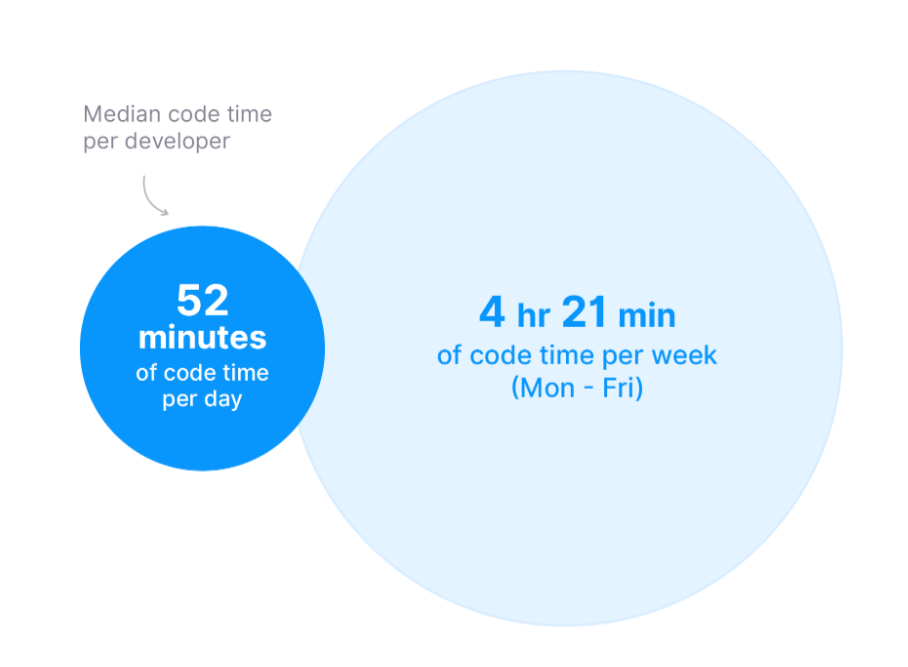

Research shows developers spend just 52 minutes per day writing code, roughly 4 hours and 20 minutes in a typical workweek. Waiting on infrastructure approvals eats directly into that already limited window.

(Source: Software.com)

Why this happens: Manual provisioning made sense when you had 10 developers, but now you have 50 and still route everything through the same three ops engineers. Without automation or self-service tools, these gatekeepers have become an organizational bottleneck that slows down everyone.

How platform engineering helps:

- Automates common infrastructure requests through APIs and internal tools that handle provisioning safely without human intervention for every change

- Defines pre-approved patterns and templates that let developers spin up resources confidently without needing to understand all the underlying security

- Reduces dependency on individual experts by making infrastructure provisioning a standardized, documented process

- Standardizes infrastructure as code (IAC) practices across teams, so infrastructure changes are reviewable, testable, and version-controlled like application code

- Creates clear ownership boundaries where developers self-serve within their domain, while the platform team maintains the base infrastructure

Traps to avoid:

- Making self-service provisioning so bureaucratic with approval chains that developers abandon it and go back to filing tickets with the ops team

- Standardizing templates that cover 60% of use cases and providing no path for the other 40%, which forces legitimate edge cases through slow manual processes

- Removing all constraints in the name of developer autonomy, so teams can provision whatever they want on AWS or other cloud providers, and create security holes or runaway costs

- Building self-service portals with complex interfaces that need training sessions to use, when the whole point was making infrastructure access simple and obvious

Example: Developers at a fintech company wait 3-5 days for the ops team to provision databases and environments, whether for transaction processing services or machine learning model training. With platform engineering, they use a self-service portal to create compliant database instances in minutes through pre-approved templates.

PRO TIP 💡: Jellyfish tracks cycle time and software delivery blockers across your engineering organization. Platform teams can measure whether self-service infrastructure improved velocity by comparing lead time and throughput before and after implementing automated provisioning.

Sign 5: Your Time-to-Market is Slowing Down

The problem: Releases that took two weeks now take two months, and everyone blames different parts of the process. Your team doubled in size, but velocity dropped, and your developers are spending more time coordinating deployments and troubleshooting infrastructure than writing code.

Why this happens: You never invested in standardizing or automating your release process as the team grew. The manual workload and custom configurations that seemed fine early on have compounded into a deployment process that’s slow, fragile, and impossible to scale. The contrast between automated and manual releases can be dramatic, as one developer shared their experience across two companies:

How platform engineering helps:

- Standardizes deployment pipelines across all teams so shipping code follows the same fast, predictable process regardless of which service or team is deploying

- Automates the repetitive tasks in the release process, like environment provisioning, testing, security scanning, and deployment, that currently need manual intervention

- Removes coordination overhead by providing self-service infrastructure that teams can use independently

- Brings faster feedback loops through consistent CI/CD practices and automated testing environments that catch issues early

Traps to avoid:

- Standardizing on slow, heavyweight deployment processes just because they’re consistent (when the main goal is to make releases both standardized and fast)

- Creating so many automated quality gates in the name of safety that deployments still take forever despite being “automated”

- Building automation that only works for simple, happy-path scenarios and fails for anything complex

- Optimizing only the deployment step while ignoring the bottlenecks in testing, environment setup, or approval workflows that eat up most of the timeline

Example: A SaaS company’s release cycle ballooned from two weeks to two months as they grew from 25 to 75 engineers. Platform engineering standardized deployment pipelines and automated environment setup. Releases dropped back to a two-week cycle, and teams shipped independently without cross-team coordination meetings.

Sign 6: You Can’t Enforce Security and Compliance Consistently

The problem: Security policies exist in documents and Slack reminders, but they’re applied inconsistently across teams because enforcement depends on manual reviews and individual vigilance. Some teams follow best practices religiously, while others skip steps under pressure.

Why this happens: Security policies exist as separate processes that developers need to remember and apply on top of their regular work. Following best practices depends entirely on developers remembering to do the right thing and having the expertise to implement it correctly (which breaks down as soon as deadlines get tight). When security is separate from the application development lifecycle, it gets deprioritized, as one Reddit user explained:

How platform engineering helps:

- Builds security and compliance controls directly into the platform so developers get secure-by-default infrastructure

- Offers pre-built secure templates for handling sensitive data, managing credentials, and configuring services that meet all compliance rules out of the box

- Creates consistent audit trails across all services automatically by logging infrastructure changes, access patterns, and deployments in a standardized way

- Removes the security expertise barrier by making secure choices the easy default path, so developers build compliant systems without becoming security specialists

Traps to avoid:

- Implementing security controls so strict that developers constantly need exceptions and workarounds

- Treating platform security as a complete solution when it only handles infrastructure concerns and can’t protect against application-layer vulnerabilities

- Implementing automated security gates that fail with vague error messages like “security violation detected” without explaining what’s wrong or how to fix it

- Relying entirely on platform controls while neglecting security training, code reviews, and threat modeling

Example: A healthcare company failed SOC 2 audits because teams handled secrets management and access controls inconsistently across 40 microservices. They use platform engineering to standardize security controls and automate compliance checks. The next audit passed cleanly, and security incidents dropped by 70%.

Sign 7: You Have No Way to Share Good Practices

The problem: Your best practices are invisible. One team has excellent API design patterns, another has solid caching strategies, and a third has robust error handling – but this knowledge exists only in their codebases.

New teams and new services start from zero every time. Posts asking for knowledge transfer advice have become common on engineering forums, like this developer facing a team departure:

Why this happens: Knowledge exists in people’s heads and individual codebases with no system to extract it. Teams don’t have time to document their solutions properly, and even when they do, nobody can find or trust that documentation six months later when it’s already obsolete.

How platform engineering helps:

- Builds golden paths that show teams the best way to solve common problems based on what’s worked well across the organization

- Creates shared libraries that make it easier to use the tested approach than to write something new (and potentially worse)

- Captures what works well and makes it available as platform features that any team can use right away

- Makes good patterns easy to find through internal catalogs that show what exists, how to use it, and who’s already using it successfully

Traps to avoid:

- Standardizing so heavily that teams can’t try new approaches when they face problems that the existing solutions don’t handle well

- Publishing reference architectures that become outdated fast because the platform engineering team can’t keep up with changes across the organization

- Making best practices so high-level and theoretical that they apply to everything vaguely, but help with nothing specifically when developers need concrete technical guidance

- Acting like the platform team has all the answers when individual teams often create better solutions that deserve wider platform adoption

Example: A company with 12 product teams found that each team built its own API rate limiting, logging structure, and error handling with wildly different quality levels. Platform engineering extracted the best implementations into shared libraries and created templates that new services could use immediately.

PRO TIP: Jellyfish shows which teams have the best deployment frequency, cycle time, and operational efficiency through its benchmarking tool. Platform teams can find these high-performing teams and study their practices to understand what works, then build those approaches into golden paths and shared templates that spread best practices across the organization.

Sign 8: Your System Reliability Depends on “Heroic Behavior”

The problem: Company production systems stay up because three specific engineers know all the workarounds, custom configs, and undocumented fixes that keep things running.

When one of them is on vacation or leaves the company, incidents that should take 20 minutes take three hours because nobody else understands how anything works. Take a look at how the worst-case scenario played out for this small DevOps team:

Why this happens: Your infrastructure grew through ad-hoc fixes and shortcuts that solved immediate problems but never got documented or standardized. The senior engineers who made these changes remember how everything fits together, but that knowledge never transferred to the rest of the team or into any system.

How platform engineering helps:

- Automates common incident responses and recovery procedures so systems can self-heal or guide any on-call engineer through resolution

- Standardizes infrastructure across services so operational knowledge transfers between systems, and engineers who understand one service can debug another

- Creates runbooks and operational documentation automatically as part of the platform, keeping troubleshooting guides current instead of relying on outdated wikis

- Distributes operational capability across the team by making systems understandable and manageable by anyone (not just the person who originally built them)

Traps to avoid:

- Writing comprehensive runbooks that nobody maintains, so they become outdated and guide engineers toward solutions that no longer work or apply

- Standardizing operations in ways that remove all flexibility, so when incidents fall outside documented procedures, teams have no tools or authority to respond

- Spreading on-call duties without spreading knowledge, so more people suffer through incidents they can’t solve

- Creating alert fatigue where engineers ignore pages because most of them are false alarms or minor issues

Example: A SaaS provider depended on one engineer who understood their custom infrastructure quirks and handled most production incidents. Successful platform engineering standardized systems and built automated diagnostics with clear runbooks. The entire team could now resolve incidents, and the hero engineer stopped getting paged at 3 AM.

Where to Start: The First Steps in Your Platform Journey

Where to Start: The First Steps in Your Platform Journey

Look at the eight signs and see how many match your situation. Don’t convince yourself you’re ready if you’re not, but also don’t pretend everything is fine when multiple signs are screaming at you.

Here’s a quick checklist to help interpret your score:

- 0-2 signs: Hold off for now. Your team is still small enough that informal development processes work fine, and you haven’t hit the complexity that makes platform engineering worth the investment. Keep things simple and check back in 6-12 months.

- 3-4 signs: You’re getting close. Don’t jump into full platform engineering yet, but start laying the groundwork. Try small standardization wins like shared CI/CD templates or unified monitoring to see what works and build support for bigger changes.

- 5-6 signs: Time to start. You have enough pain that platform engineering will pay off clearly. Pick your 2-3 worst problems and tackle those first while you build the team and get organizational buy-in for the longer journey.

- 7-8 signs: You’re overdue. Delaying platform engineering is costing you velocity, burning out engineers, and creating technical debt. Get a platform team in place fast and target the bottlenecks that are hurting you most.

Platform engineering includes many possible projects, and you need to pick your battles. Some give you quick improvements without huge effort, while others take serious time but fix the structural problems hurting your team.

Use this matrix to figure out what to start with:

| Initiative | Impact | Effort | Time to value | When to prioritize |

| Self-service environment provisioning | High | Medium | 4-8 weeks | High onboarding pain, frequent blocking |

| Standardized CI/CD pipelines | High | Medium | 6-10 weeks | Tool sprawl, inconsistent deployments |

| Golden paths and templates | Medium | Low | 2-4 weeks | Knowledge silos, inconsistent practices |

| Automated security/compliance checks | High | High | 8-12 weeks | Audit failures, security incidents |

| Internal developer portal | Medium | High | 12-16 weeks | Poor discoverability, fragmented docs |

| Observability standardization | High | Medium | 6-10 weeks | Hero dependency, long incident resolution |

Start with what hurts most. If new developers take weeks to get productive and teams wait days for infrastructure, start with self-service provisioning.

If tool chaos and inconsistent deployments slow you down, standardize your CI/CD pipelines first. Choose the 2-3 issues causing the worst problems and work on those before expanding to other areas.

How to Measure the Success of Your Platform Initiative

How to Measure the Success of Your Platform Initiative

So you’re ready to start platform engineering. That’s great. But in six months, someone will ask if it’s working. If you can’t answer with numbers that show faster deployments, happier developers, or fewer incidents, your platform initiative is in trouble.

This is where Jellyfish comes in.

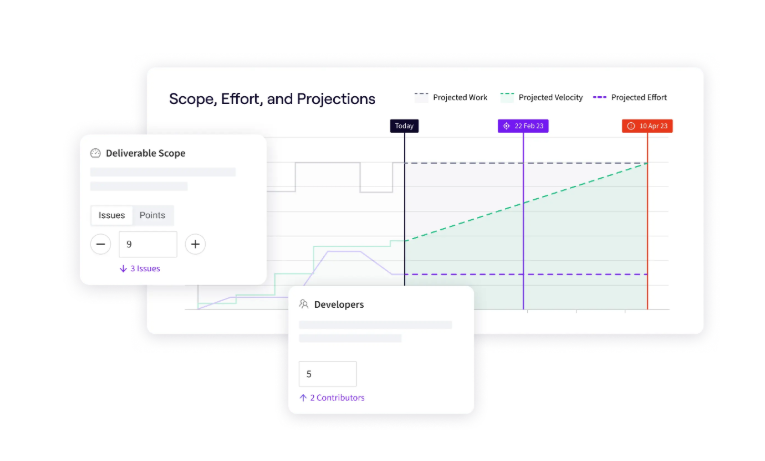

Jellyfish is a software engineering management platform that breaks down how your engineering organization operates by tracking work patterns, team performance, and resource distribution.

To be specific, here’s exactly what Jellyfish brings to the table:

- Resource allocation visibility: Jellyfish tracks how engineers spend their time across feature work, infrastructure tasks, support requests, and unexpected problems. You get clear evidence that platform engineering reduced toil by tracking how many hours were moved from infrastructure babysitting to shipping features.

- DORA metrics tracking: The platform tracks deployment frequency, lead time, change failure rate, and mean time to recovery automatically by connecting to your CI/CD and incident management tools. You can see whether your standardized pipelines and golden paths improved delivery speed compared to the chaos you had before.

- Integration with the existing set of tools: The platform integrates with Git, Jira, CI/CD systems, incident tools, and Slack to collect metrics automatically without changing how developers work. Platform teams can start measuring impact immediately instead of asking developers to adopt new tracking systems on top of everything else.

- Engineering investment analysis: Jellyfish maps engineering time to projects and objectives without manual tracking or timesheet bureaucracy. You can show executives that standardization worked by displaying how much capacity moved from keeping lights on to shipping customer value.

- Benchmarking against industry standards: Jellyfish uses data from 1,000+ engineering organizations to show where your team stands on key metrics. You can compare your deployment speed and cycle time against top performers to understand if your platform engineering results are good or just good enough.

You can’t streamline what you don’t measure, and platform engineering is no exception. Jellyfish gives you the metrics to validate your platform investments, prove ROI to executives, and outline bottlenecks that still need attention.

Schedule a demo and see if your platform is working as well as you think it is.

FAQs

FAQs

Is platform engineering just a new name for DevOps?

No. They’re related but different. DevOps introduced the idea that developers should handle operations for what they build. Platform engineering creates the infrastructure that makes this possible without chaos.

You can practice DevOps without platform engineering when you’re small, but as you scale, platform engineering becomes the thing that keeps DevOps from collapsing under its own weight.

What’s the difference between a platform team and an SRE team?

Platform teams build internal products that developers use to ship code faster – things like deployment pipelines, self-service infrastructure, and developer portals.

On the other hand, SRE teams focus on reliability, observability, and incident response for production systems.

There’s overlap, and some organizations combine them, but the core difference is that platform engineering leans toward developer experience while SRE leans toward operational reliability.

Do I need a platform team if I’m a small startup?

Platform engineering makes sense when you have enough engineers and complexity that ad-hoc solutions break down. If you have fewer than 20 engineers and your current setup isn’t actively blocking you, skip platform engineering and focus on building your product.

What are some common use cases for a Thinnest Viable Platform (TVP)?

The most effective TVPs tackle these three areas first:

- Self-service infrastructure that lets developers provision environments and databases through simple interfaces instead of waiting on ops

- Standard deployment pipelines that work out of the box for most services, so teams don’t reinvent CI/CD

- A basic internal portal where developers can find documentation, discover existing services, and understand what infrastructure is available

How does platform engineering fit into our company’s digital transformation?

It’s the engine that powers digital transformation. Stakeholders talk about moving faster and becoming more agile, but that only happens when engineering teams can ship code quickly and reliably.

Platform engineering creates the foundation your transformation needs by fixing the infrastructure problems that slow teams down.

Learn More About Platform Engineering

Learn More About Platform Engineering

- The Complete Guide to Platform Engineering

- Platform as a Product: The Foundation for Successful Platform Engineering

- Platform Engineering vs. DevOps: What’s the Difference?

- How to Build a Platform Engineering Team: Key Roles, Structure, and Strategy

- Understanding The Platform Engineering Maturity Model

- How to Build Golden Paths Your Developers Will Actually Use

- Best 14 Platform Engineering Tools Heading Into 2026

- 8 Benefits of Platform Engineering for Software Development Teams

- 17 Platform Engineering Metrics to Measure Success and ROI

- 9 Platform Engineering Anti-Patterns That Kill Adoption

- The Top Platform Engineering Skills Every Engineering Leader Must Master

- 11 Platform Engineering Best Practices for Building a Scalable IDP

- What is an Internal Developer Platform (IDP)?

About the author

Lauren is Senior Product Marketing Director at Jellyfish where she works closely with the product team to bring software engineering intelligence solutions to market. Prior to Jellyfish, Lauren served as Director of Product Marketing at Pluralsight.